Implicating the integrated format on reading test assessment: An evaluation of relevant factors

The present study in general evaluates relevant factors involved in assessing reading

performance in the context of English classroom teaching. Unlike the traditional way of placing the

reading text and test items separately in the split format, the test items were placed in accordance with

relevant parts of the reading text in the integrated format and this study compares reading performance

on the ground of the following variables: test formats, study subject, learning task, and pre-proficiency

level. Findings from the study firstly indicated the influence of test formats on reading performance

from the evidence that participants in the integrated format performed better than those in the split

format. Second, findings also showed significant effect of the interaction between test formats and

learning task as well as the interaction between pre-proficiency level and study subject in reading test

performance. Third, in combination with test formats, task design also had an influence on reading test

performance.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Tóm tắt nội dung tài liệu: Implicating the integrated format on reading test assessment: An evaluation of relevant factors

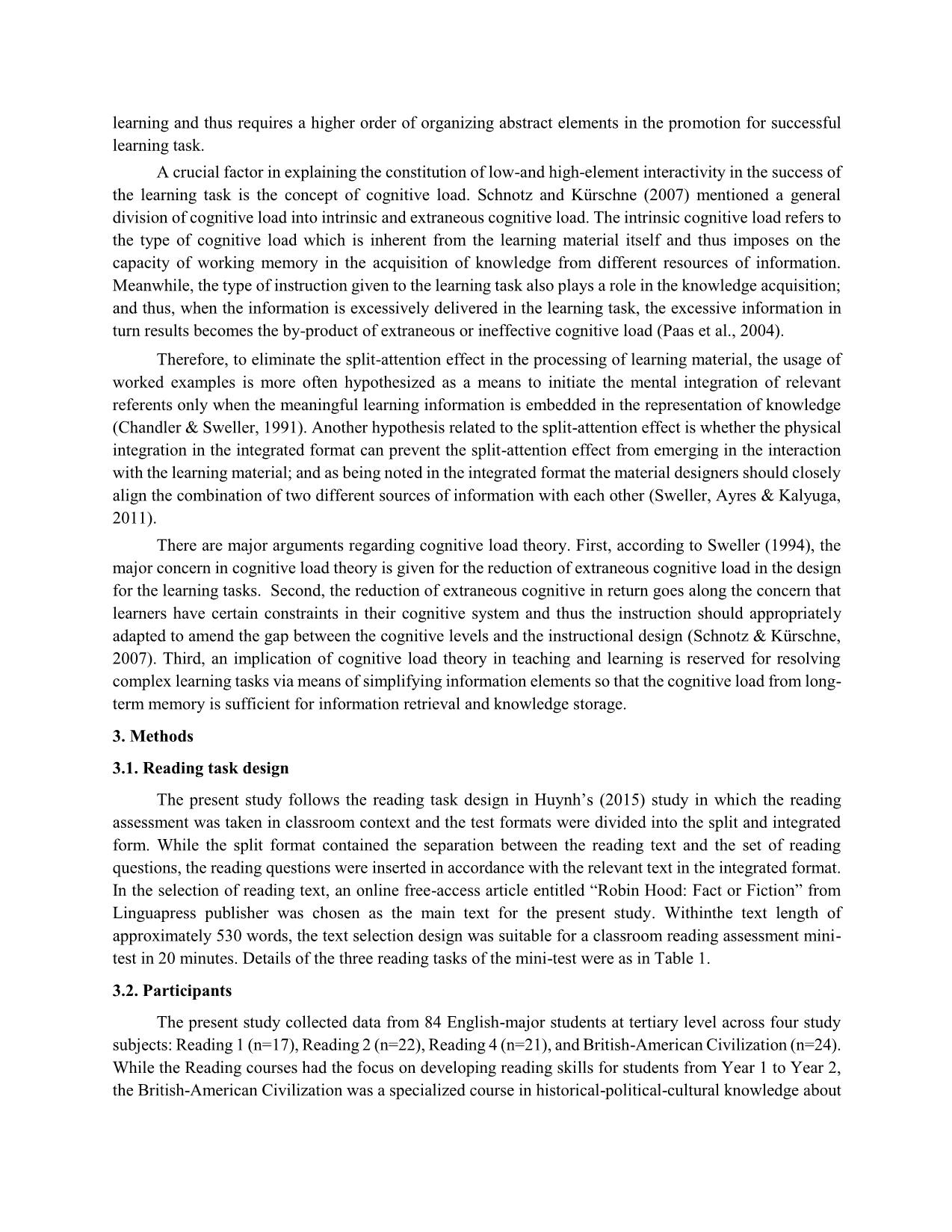

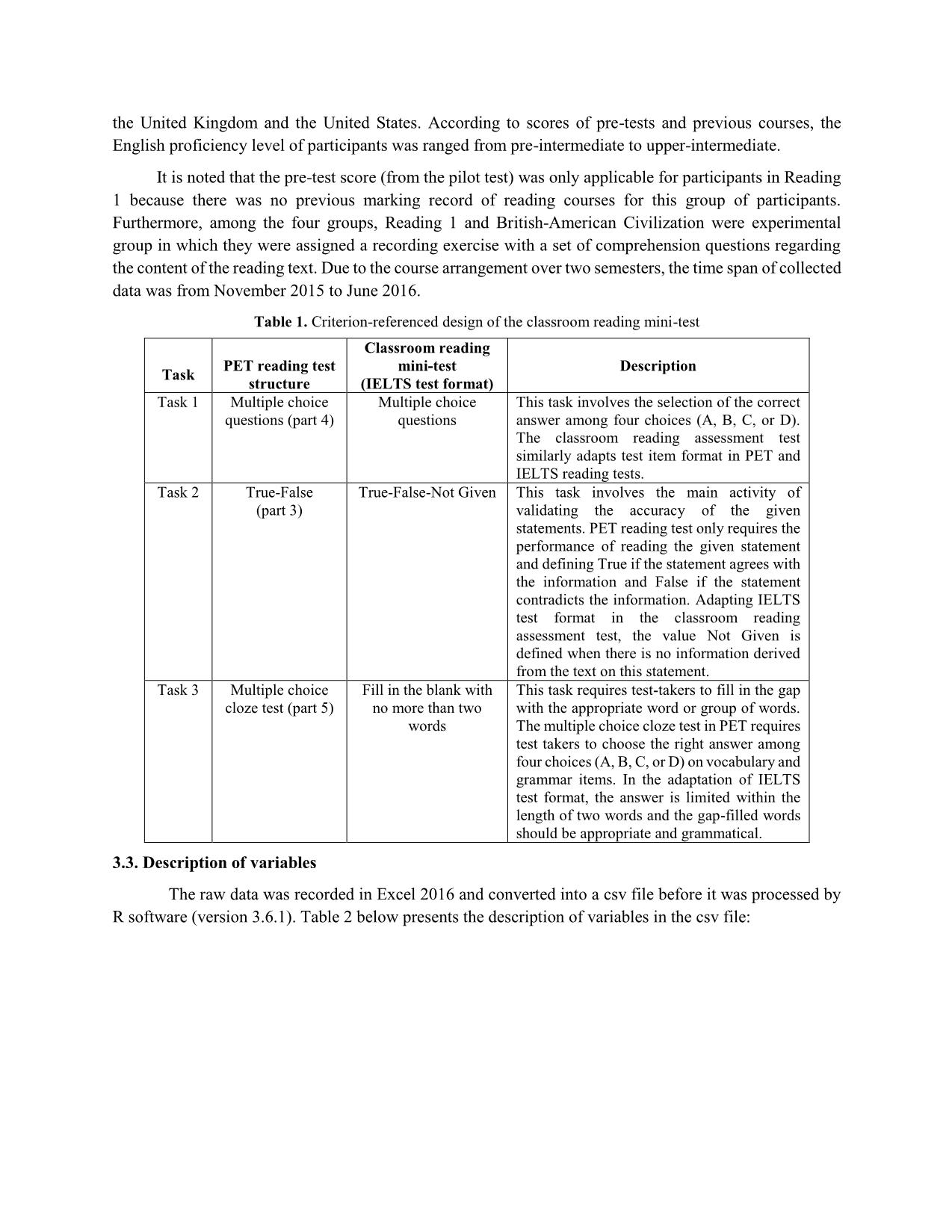

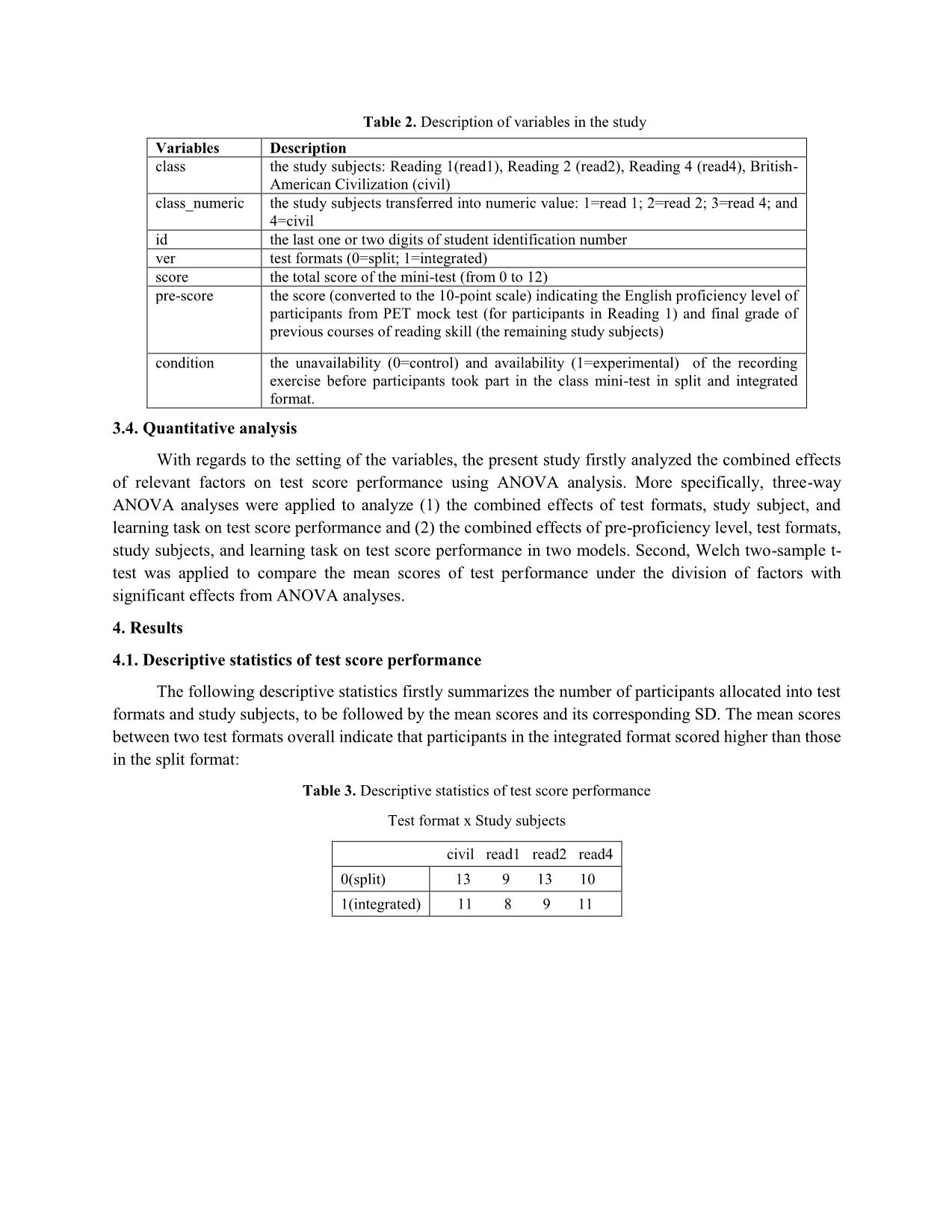

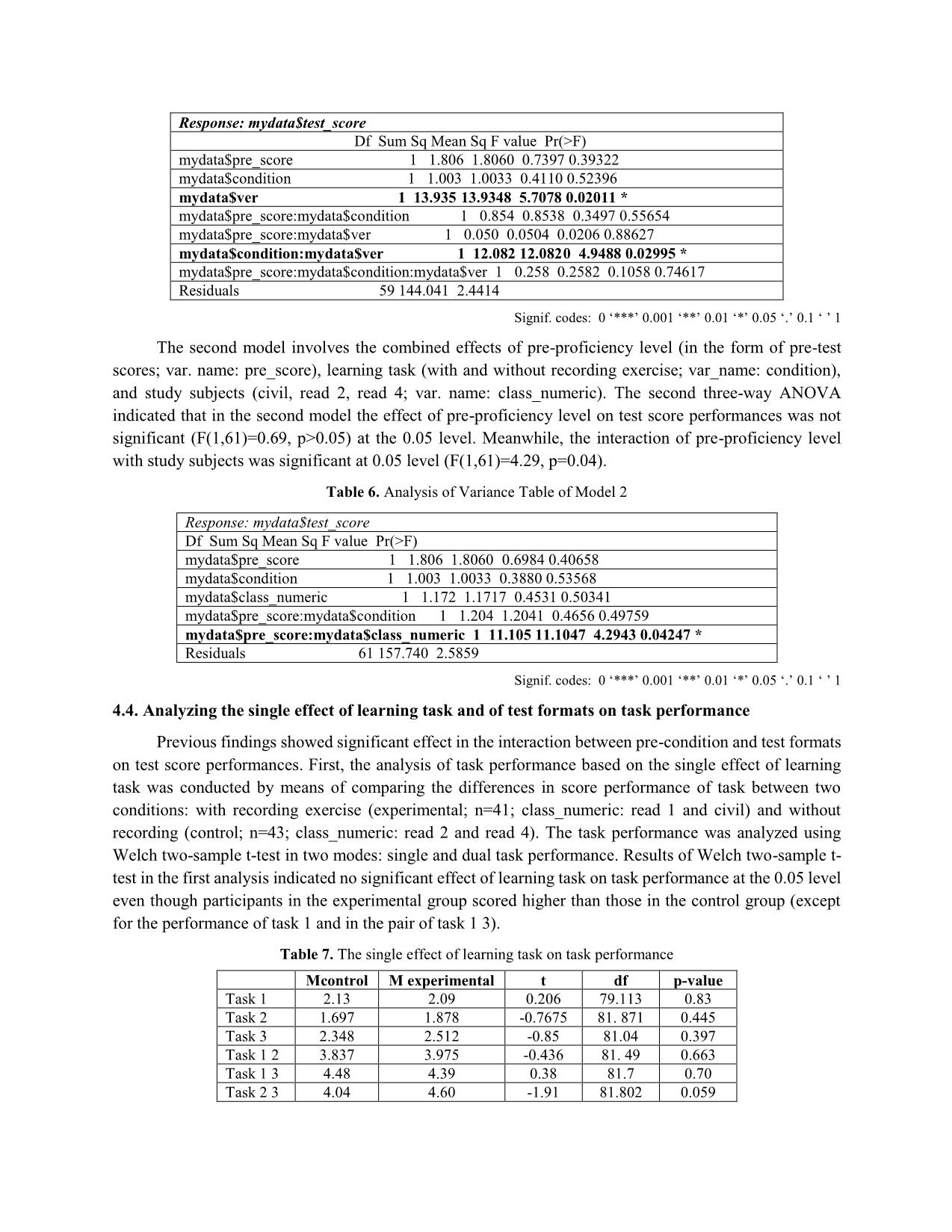

s of test performance under the division of factors with significant effects from ANOVA analyses. 4. Results 4.1. Descriptive statistics of test score performance The following descriptive statistics firstly summarizes the number of participants allocated into test formats and study subjects, to be followed by the mean scores and its corresponding SD. The mean scores between two test formats overall indicate that participants in the integrated format scored higher than those in the split format: Table 3. Descriptive statistics of test score performance Test format x Study subjects civil read1 read2 read4 0(split) 13 9 13 10 1(integrated) 11 8 9 11 Mean and SD Test format Subject Mean SD 1 0 Civil 5.53 1.19 2 1 Civil 7.63 1.28 3 0 read1 5.88 1.36 4 1 read1 7.12 1.35 5 0 read2 6.15 1.57 6 1 read2 6.66 1.11 7 0 read4 5.90 1.79 8 1 read4 6.09 2.02 4.2. Analyzing the combined effects test formats, study subject, and learning task on test score performance A three-way between subjects 2x4x2 ANOVA analysis was conducted to measure the effects of test formats (integrated and split formats; var. name: ver), study subjects (civil, read 1, read 2, read 4; var. name: class_numeric), and learning task (with and without recording exercise; var. name: condition) on test score performance. There was a significant effect of test formats on test score performance at the 0.01 level (F (1, 76)=9. 37, p=0.003). There was also an interaction between test formats and learning task on test score performance and this interaction is statistically significant at the 0.05 level (F (1, 76)=4.29, p=0.04). Table 4. Analysis of Variance for test formats, study subject, learning task, and test score performance Response: mydata$test_score Df Sum Sq Mean Sq F value Pr(>F) mydata$ver 1 21.108 21.1077 9.37890.0030 ** mydata$class_numeric 1 0.047 0.0473 0.0210 0.885074 mydata$condition 1 2.037 2.0373 0.9052 0.344399 mydata$ver:mydata$class_numeric 1 2.203 2.2034 0.9790 0.325574 mydata$ver:mydata$condition 1 9.671 9.6708 4.29710.041568* mydata$class_numeric:mydata$condition 1 1.683 1.6831 0.7479 0.389875 mydata$ver:mydata$class_numeric:mydata$condition 1 0.876 0.8756 0.3891 0.534665 Residuals 76 171.042 2.2505 Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 4.3. Analyzing the combined effects of pre-proficiency level, test formats, study subjects, and learning task on test score performance The follow-up analysis on the combined effects of four factors on test score performance (var. name: test_score) of three groups of participants from Reading 2 (read 2), Reading 4 (read4), and British-American Civilization (civil) and was divided into two models. The first model involves the combined effects of pre- proficiency level (in the form of pre-test scores; var. name: pre_score), learning task (with and without recording exercise; var. name: condition), and test formats (integrated and split formats; var. name: ver). A three-way ANOVA analysis was conducted to measure the effects of three factors on test score performance in the first model. Results of the test indicated no significant effects of pre-proficiency level (F(1,59)=0.73, p>0.05) and no combined effects in the interaction of pre-proficiency level with learning task (F(1,59)=0.34, p>0.05) as well as with test formats (F(1, 59)=0.02, p>0.05) at the 0.05 level. Apart from the significant effect of test formats on test score performance, the interaction between learning task and test formats was also significant at the 0.05 level (F(1,59)=4.94, p=0.02). Table 5. Analysis of Variance Table of Model 1 Response: mydata$test_score Df Sum Sq Mean Sq F value Pr(>F) mydata$pre_score 1 1.806 1.8060 0.7397 0.39322 mydata$condition 1 1.003 1.0033 0.4110 0.52396 mydata$ver 1 13.935 13.9348 5.7078 0.02011 * mydata$pre_score:mydata$condition 1 0.854 0.8538 0.3497 0.55654 mydata$pre_score:mydata$ver 1 0.050 0.0504 0.0206 0.88627 mydata$condition:mydata$ver 1 12.082 12.0820 4.9488 0.02995 * mydata$pre_score:mydata$condition:mydata$ver 1 0.258 0.2582 0.1058 0.74617 Residuals 59 144.041 2.4414 Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 The second model involves the combined effects of pre-proficiency level (in the form of pre-test scores; var. name: pre_score), learning task (with and without recording exercise; var_name: condition), and study subjects (civil, read 2, read 4; var. name: class_numeric). The second three-way ANOVA indicated that in the second model the effect of pre-proficiency level on test score performances was not significant (F(1,61)=0.69, p>0.05) at the 0.05 level. Meanwhile, the interaction of pre-proficiency level with study subjects was significant at 0.05 level (F(1,61)=4.29, p=0.04). Table 6. Analysis of Variance Table of Model 2 Response: mydata$test_score Df Sum Sq Mean Sq F value Pr(>F) mydata$pre_score 1 1.806 1.8060 0.6984 0.40658 mydata$condition 1 1.003 1.0033 0.3880 0.53568 mydata$class_numeric 1 1.172 1.1717 0.4531 0.50341 mydata$pre_score:mydata$condition 1 1.204 1.2041 0.4656 0.49759 mydata$pre_score:mydata$class_numeric 1 11.105 11.1047 4.2943 0.04247 * Residuals 61 157.740 2.5859 Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 4.4. Analyzing the single effect of learning task and of test formats on task performance Previous findings showed significant effect in the interaction between pre-condition and test formats on test score performances. First, the analysis of task performance based on the single effect of learning task was conducted by means of comparing the differences in score performance of task between two conditions: with recording exercise (experimental; n=41; class_numeric: read 1 and civil) and without recording (control; n=43; class_numeric: read 2 and read 4). The task performance was analyzed using Welch two-sample t-test in two modes: single and dual task performance. Results of Welch two-sample t- test in the first analysis indicated no significant effect of learning task on task performance at the 0.05 level even though participants in the experimental group scored higher than those in the control group (except for the performance of task 1 and in the pair of task 1 3). Table 7. The single effect of learning task on task performance Mcontrol M experimental t df p-value Task 1 2.13 2.09 0.206 79.113 0.83 Task 2 1.697 1.878 -0.7675 81. 871 0.445 Task 3 2.348 2.512 -0.85 81.04 0.397 Task 1 2 3.837 3.975 -0.436 81. 49 0.663 Task 1 3 4.48 4.39 0.38 81.7 0.70 Task 2 3 4.04 4.60 -1.91 81.802 0.059 Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Second, the analysis of task performance based on the single effect of test formats was conducted by means of comparing the differences in score performance of task between two formats: split (n=45) and integrated (n=39). On similar analysis using Welch two-sample t-test, participants in the integrated format scored higher than those in the split format. However, significant effect of test formats on task performance was found in the performance of task 1 (t=-2.51, df=77.9, p=0.01), the performance of dual task 1 2 (t=- 2.94, df=76.99, p=0,004), and the performance of dual task 1 3 (t=-2.63, df=75.89, p=0.01) at the 0.05 level. Table 8. The single effect of test formats on task performance M Split M integrated t df p-value Task 1 1.88 2.38 -2.51 77.9 0.01* Task 2 1.6 2.0 -1.72 80.77 0.08 Task 3 2.37 2.48 -0.559 76.597 0. 577 Task 1 2 3.48 4.38 -2.94 76.99 0.004* Task 1 3 4.13 4.79 -2.638 75.89 0.01* Task 2 3 4.11 4.56 -1.52 81.072 0. 13 Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 5. Discussion and implications The purpose of the present study is to investigate the effects of test formats, study subjects, learning task, and pre-proficiency level on test score performance in the evaluation of combined effects and single effect. Findings of the study firstly revealed that test formats have a significant effect on test score performances, considering that participants in the integrated format performed better than those in the split format. Despite being different in research scope, while investigating the influence of material design on geometry test performance, Tindall-Ford et al. (2015) similarly found that participants in the integrated format earned a higher mean score than those in the split format. In a similar concern with their study, it could be the employment of self-management strategy by learners as participants while making their efforts in reducing the split-attention effect; in addition, participants in the present study may also understand how to respond the test items by integrating the questions with the relevant text. Furthermore, the present study also showed that there was a significant effect in the interaction between test formats and learning task, and this finding can be linked with the role of worked examples in lowering cognitive load for the purpose of relating isolated information from the reading material. In another study on the scope of material designing for an introductory accounting course, Sithole (2017) also indicated participants showed a better performance in the integrated format for the recall and transfer tests and suggested the usage of integrated textbook as an effective worked example for learning. Regarding the usage of recording task prior to the participation of the reading performance test for the experimental group, the present study refers to Pouw et al.’s (2019) study on the cognitive basis for split-attention effects to suggest the implementation of recording exercise as the means to lower the extraneous cognitive load for the purpose of maintaining and searching information in working memory. In other words, the recording exercise as the learning task in this study may reduce the amount of time for memory traces while learners perform the reading task in the assessment phase in classroom. In the present study, the evaluation of single effect for learning task in the treatment of recording exercise presented that there was no significant difference in the mean score between split and integrated formats. In correspondence with task requirements from the design of MCQs in split and integrated formats, this finding further raises practical concerns about how individual differences differentiate their reading performance in MCQs, considering the impact of MCQs task in magnifying reading self-efficacy (Solheim, 2011). 6. Conclusion The following study is conducted to analyze relevant factors which are likely to affect reading test performance in the context of language classroom. Under the main involvement of the split and integrated test formats, findings from the study generally support the reduction of split-attention effect from the implication of integrating relevant texts with their relevant questions in the reading task. The study also sheds lights on contributors to reading test performance, considering that these contributors are likely to promote and demote the process of reading comprehension. There are some suggestions for future research investigating similar scope. In manipulating the condition which can reduce the split-attention effects in reading assessment in the context of language classroom, future studies can refer to the integration of hypertext glosses as the technological aids of explaining word meanings in reading assessment (Chen, 2014). The assessment of reading comprehension in different formats can also be adapted to longitudinal design of extensive reading in the evaluation of its effectiveness in the development of reading proficiency and related variables (Jeon & Day, 2016) References Chandler, P., & Sweller, J. (1991). Cognitive load theory and the format of instruction. Cognition and Instruction, 8(4), 293-332. Chen, I.J. (2014). Hypertext glosses for foreign language reading comprehension and vocabulary acquisition: Effects of assessment methods. Computer Assisted Language Learning, 29(2), 413-426. Huynh, C.M.H. (2015). Split-attention in reading comprehension: A case of English as a foreign/second language. 6th International Conference on TESOL (pp. 1-12). SEAMEO. Ho Chi Minh City, Vietnam. Jeon, E.Y., & Day, R.R. (2016). The effectiveness of ER on reading proficiency: A meta-analysis. Reading in a Foreign Language, 28(2), 246-265. Paas, F., Renkl, A., & Sweller, J. (2004). Cognitive load theory: Instructional implications of the interaction between information structures and cognitive architecture. Instructional Science, 32(1), 1-8. Pouw, W., Rop, G., De Koning, B., & Paas, F. (2019). The cognitive basis for the split-attention effect. Journal of Experimental Psychology, 148(11), 2058-2075. Schnotz, W., & Kürschner, C. (2007). A reconsideration of cognitive load theory. Educational Psychology Review, 19(4), 469-508. Sithole, S.T. (2017). Enhancing students understanding of introductory accounting by integrating split-attention instructional material. Accounting Research Journal, 30(3), 283-300. Solheim, O.J. (2011). The impact of reading self-efficacy and task value on reading comprehension scores in different item formats. Reading Psychology, 32(1), 1-27. Sweller, J. (1994). Cognitive load theory, learning difficulty, and instructional design. Learning and Instruction, 4(4), 295-312. Sweller, J., Ayres, P., & Kalyuga, S. (2011). Cognitive load theory. New York, NY: Springer. Tindall-Ford, S., Agostinho, S., Bokosmaty, S., Paas, F., & Chandler, P. (2015). Computer-based learning of geometry from integrated and split-attention worked examples: The power of self-management. Journal of Educational Technology & Society, 18(4), 89-99. NGHIÊN CỨU CÁC YẾU TỐ TRONG THỰC NGHIỆM DẠNG ĐỀ TÍCH HỢP VÀO ĐÁNH GIÁ ĐỌC HIỂU TIẾNG ANH Tóm tắt: Nghiên cứu thực nghiệm này được thực hiện nhằm định trị các nhân tố trong việc đánh giá kỹ năng đọc hiểu tiếng Anh ở môi trường lớp học. Dựa trên sự so sánh kết quả đánh giá đọc hiểu giữa hai dạng đề: dạng đề không phân vùng (split format) và dạng đề tích hợp (integrated format), nghiên cứu phân tích các nhân tố bao gồm dạng đề, môn học liên quan, bài tập tìm hiểu,và trình độ đọc hiểu. Kết quả thực nghiệm cho thấy người tham gia làm dạng đề tích hợp có kết quả cao hơn dạng đề không phân vùng. Bên cạnh đó, có mối liên hệ trong sự tương tác giữa dạng đề và bài tập tìm hiểu cũng như là giữa trình độ đọc hiểu và môn học liên quan. Nghiên cứu cũng chỉ ra cùng với dạng đề, việc thiết kế dạng bài đánh giá cũng có ảnh hưởng tới kết quả đánh giá. Từ khóa: Split-attention, integrated format, reading assessment, reading performance

File đính kèm:

implicating_the_integrated_format_on_reading_test_assessment.pdf

implicating_the_integrated_format_on_reading_test_assessment.pdf