Bài giảng Kiến trúc máy tính - Chương 7: Bộ nhớ máy tính - Nguyễn Kim Khánh

7.1. Tổng quan hệ thống nhớ

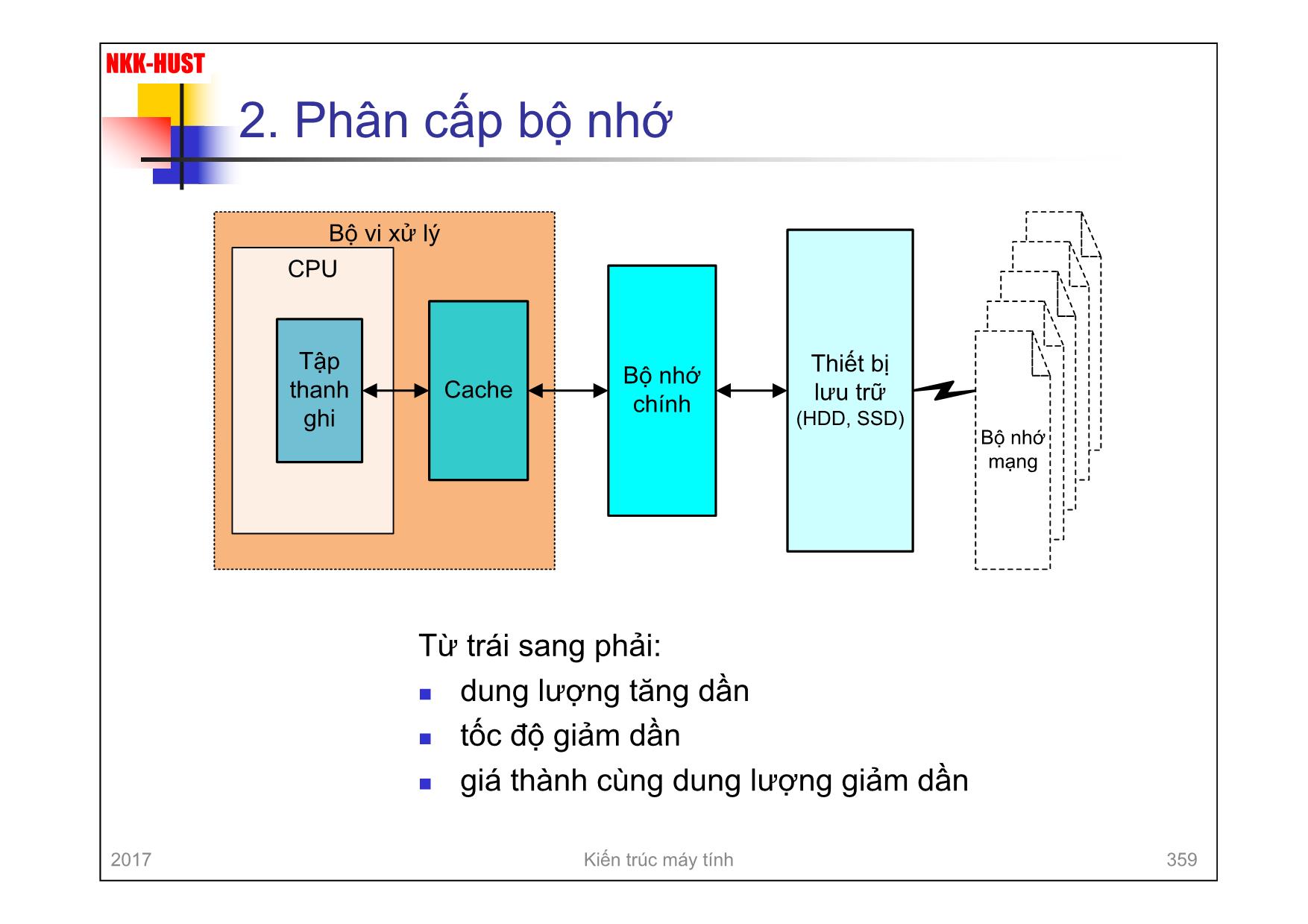

n Vị trí

n Bên trong CPU:

n tập thanh ghi

n Bộ nhớ trong:

n bộ nhớ chính

n bộ nhớ đệm (cache)

n Bộ nhớ ngoài:

n các thiết bị lưu trữ

n Dung lượng

n Độ dài từ nhớ (tính bằng bit)

n Số lượng từ nhớ

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tải về để xem bản đầy đủ

Bạn đang xem 10 trang mẫu của tài liệu "Bài giảng Kiến trúc máy tính - Chương 7: Bộ nhớ máy tính - Nguyễn Kim Khánh", để tải tài liệu gốc về máy hãy click vào nút Download ở trên

Tóm tắt nội dung tài liệu: Bài giảng Kiến trúc máy tính - Chương 7: Bộ nhớ máy tính - Nguyễn Kim Khánh

disk array: The data are striped across the available disks. This is best understood by considering Figure 6.9. All of the user and system data are viewed as being stored on a logical disk. The logical disk is divided into strips; these strips may be physical blocks, sectors, or some other unit. The strips are mapped round robin to consecutive physical disks in the RAID array. A set of logically consecu- tive strips that maps exactly one strip to each array member is referred to as a stripe. In an n-disk array, the first n logical strips are physically stored as the first strip on each of the n disks, forming the first stripe; the second n strips are distributed as the strip 12 (a) RAID 0 (Nonredundant) strip 8 strip 4 strip 0 strip 13 strip 9 strip 5 strip 1 strip 14 strip 10 strip 6 strip 2 strip 15 strip 11 strip 7 strip 3 strip 12 (b) RAID 1 (Mirrored) strip 8 strip 4 strip 0 strip 13 strip 9 strip 5 strip 1 strip 14 strip 10 strip 6 strip 2 strip 15 strip 11 strip 7 strip 3 strip 12 strip 8 strip 4 strip 0 strip 13 strip 9 strip 5 strip 1 strip 14 strip 10 strip 6 strip 2 (c) RAID 2 (Redundancy through Hamming code) b0 b1 b2 b3 f0 (b) f1(b) f2 (b) strip 15 strip 11 strip 7 strip 3 Figure 6.8 RAID Levels 2017 Kiến trúc máy tính 417 NKK-HUST RAID 3 & 4 6.2 / RAID 199 second strips on each disk; and so on. The advantage of this layout is that if a single I/O request consists of multiple logically contiguous strips, then up to n strips for that request can be handled in parallel, greatly reducing the I/O transfer time. Figure 6.9 indicates the use of array management software to map between logical and physical disk space. This software may execute either in the disk subsystem or in a host computer. block 12 (e) RAID 4 (Block-level parity) block 8 block 4 block 0 block 13 block 9 block 5 block 1 block 14 block 10 block 6 block 2 block 15 block 7 block 3 P(12-15) P(8-11) P(4-7) P(0-3) block 12 block 8 block 4 block 0 block 9 block 5 block 1 block 13 block 6 block 2 block 14 block 10 block 3 block 15 P(16-19) P(12-15) P(8-11) P(4-7) block 16 block 17 block 18 block 19 block 11 block 7 (f ) RAID 5 (Block-level distributed parity) (d) RAID 3 (Bit-interleaved parity) b0 b1 b2 b3 P(b) P(0-3) block 11 block 12 (g) RAID 6 (Dual redundancy) block 8 block 4 block 0 P(12-15) block 9 block 5 block 1 Q(12-15) P(8-11) block 6 block 2 block 13 P(4-7) block 3 block 14 block 10 Q(4-7) P(0-3) Q(8-11) block 15 block 7 Q(0-3) block 11 Figure 6.8 RAID Levels (continued) 2017 Kiến trúc máy tính 418 NKK-HUST RAID 5 & 6 6.2 / RAID 199 second strips on each disk; and so on. The advantage of this layout is that if a single I/O request consists of multiple logically contiguous strips, then up to n strips for that request can be handled in parallel, greatly reducing the I/O transfer time. Figure 6.9 indicates the use of array management software to map between logical and physical disk space. This software may execute either in the disk subsystem or in a host computer. block 12 (e) RAID 4 (Block-level parity) block 8 block 4 block 0 block 13 block 9 block 5 block 1 block 14 block 10 block 6 block 2 block 15 block 7 block 3 P(12-15) P(8-11) P(4-7) P(0-3) block 12 block 8 block 4 block 0 block 9 block 5 block 1 block 13 block 6 block 2 block 14 block 10 block 3 block 15 P(16-19) P(12-15) P(8-11) P(4-7) block 16 block 17 block 18 block 19 block 11 block 7 (f ) RAID 5 (Block-level distributed parity) (d) RAID 3 (Bit-interleaved parity) b0 b1 b2 b3 P(b) P(0-3) block 11 block 12 (g) RAID 6 (Dual redundancy) block 8 block 4 block 0 P(12-15) block 9 block 5 block 1 Q(12-15) P(8-11) block 6 block 2 block 13 P(4-7) block 3 block 14 block 10 Q(4-7) P(0-3) Q(8-11) block 15 block 7 Q(0-3) block 11 Figure 6.8 RAID Levels (continued) 2017 Kiến trúc máy tính 419 NKK-HUST Ánh xạ dữ liệu của RAID 0 200 CHAPTER 6 / EXTERNAL MEMORY RAID 0 FOR HIGH DATA TRANSFER CAPACITY The performance of any of the RAID levels depends critically on the request patterns of the host system and on the layout of the data. These issues can be most clearly addressed in RAID 0, where the impact of redundancy does not interfere with the analysis. First, let us consider the use of RAID 0 to achieve a high data transfer rate. For applications to experience a high transfer rate, two requirements must be met. First, a high transfer capacity must exist along the entire path between host memory and the individual disk drives. This includes internal controller buses, host system I/O buses, I/O adapters, and host memory buses. The second requirement is that the application must make I/O requests that drive the disk array efficiently. This requirement is met if the typical request is for large amounts of logically contiguous data, compared to the size of a strip. In this case, a single I/O request involves the parallel transfer of data from multiple disks, increasing the effective transfer rate compared to a single-disk transfer. RAID 0 FOR HIGH I/O REQUEST RATE In a transaction-oriented environment, the user is typically more concerned with response time than with transfer rate. For an individual I/O request for a small amount of data, the I/O time is dominated by the motion of the disk heads (seek time) and the movement of the disk (rotational latency). In a transaction environment, there may be hundreds of I/O requests per sec- ond. A disk array can provide high I/O execution rates by balancing the I/O load across multiple disks. Effective load balancing is achieved only if there are typically strip 12 strip 8 strip 4 strip 0 Physical disk 0 strip 3 strip 4 strip 5 strip 6 strip 7 strip 8 strip 9 strip 10 strip 11 strip 12 strip 13 strip 14 strip 15 strip 2 strip 1 strip 0 Logical disk Physical disk 1 Physical disk 2 Physical disk 3 strip 13 strip 9 strip 5 strip 1 strip 14 strip 10 strip 6 strip 2 strip 15 strip 11 strip 7 strip 3 Array management software Figure 6.9 Data Mapping for a RAID Level 0 Array 2017 Kiến trúc máy tính 420 NKK-HUST 7.5. Bộ nhớ ảo (Virtual Memory) n Khái niệm bộ nhớ ảo: gồm bộ nhớ chính và bộ nhớ ngoài mà được CPU coi như là một bộ nhớ duy nhất (bộ nhớ chính). n Các kỹ thuật thực hiện bộ nhớ ảo: n Kỹ thuật phân trang: Chia không gian địa chỉ bộ nhớ thành các trang nhớ có kích thước bằng nhau và nằm liền kề nhau Thông dụng: kích thước trang = 4KiB n Kỹ thuật phân đoạn: Chia không gian nhớ thành các đoạn nhớ có kích thước thay đổi, các đoạn nhớ có thể gối lên nhau. 2017 Kiến trúc máy tính 421 NKK-HUST Phân trang n Phân chia bộ nhớ thành các phần có kích thước bằng nhau gọi là các khung trang n Chia chương trình (tiến trình) thành các trang n Cấp phát số hiệu khung trang yêu cầu cho tiến trình n OS duy trì danh sách các khung trang nhớ trống n Tiến trình không yêu cầu các khung trang liên tiếp n Sử dụng bảng trang để quản lý 2017 Kiến trúc máy tính 422 NKK-HUST Cấp phát các khung trang 8.3 / MEMORY MANAGEMENT 287 But these addresses are not fixed. They will change each time a process is swapped in. To solve this problem, a distinction is made between logical addresses and physical addresses. A logical address is expressed as a location relative to the beginning of the program. Instructions in the program contain only logical addresses. A physical address is an actual location in main memory. When the processor exe- cutes a process, it automatically converts from logical to physical address by adding the current starting location of the process, called its base address, to each logical address. This is another example of a processor hardware feature designed to meet an OS requirement. The exact nature of this hardware feature depends on the mem- ory management strategy in use. We will see several examples later in this chapter. Paging Both unequal fixed-size and variable-size partitions are inefficient in the use of memory. Suppose, however, that memory is partitioned into equal fixed-size chunks that are relatively small, and that each process is also divided into small fixed-size chunks of some size. Then the chunks of a program, known as pages, could be assigned to available chunks of memory, known as frames, or page frames. At most, then, the wasted space in memory for that process is a fraction of the last page. Figure 8.15 shows an example of the use of pages and frames. At a given point in time, some of the frames in memory are in use nd some are free. The list of free frames is maintained by the OS. Process A, stored on disk, consists of four pages. 14 13 15 16 Inuse Main memory (a) Before (b) After Process A Free frame list 13 14 15 18 20 Free frame list 20 Process A page table 18 13 14 15 Page 0 Page 1 Page 2 Page 3 In use In use 17 18 19 20 14 13 15 16 Inuse In use Main memory Page 0 of A Page 3 of A Page 2 of A Page 1 of A In use 17 18 19 20 Process A Page 0 Page 1 Page 2 Page 3 Figure 8.15 Allocation of Free Frames 2017 Kiến trúc máy tính 423 NKK-HUST Địa chỉ logic và địa chỉ vật lý của phân trang 288 CHAPTER 8 / OPERATING SYSTEM SUPPORT When it comes time to load this process, the OS finds four free frames and loads the four pages of the process A into the four frames. Now suppose, as in this example, that there are not sufficient unused con- tiguous frames to hold the process. Does this prevent the OS from loading A? The answer is no, because we can once again use the concept of logical address. A simple base address will no longer suffice. Rather, the OS maintains a page table for each process. The page table shows the frame location for each page of the process. Within the program, each logical address consists of a page number and a relative address within the page. Recall that in the case of simple partitioning, a logical address is the location of a word relative to the beginning of the program; the processor translates that into a physical address. With paging, the logical- to-physical address translation is still done by processor hardware. The processor must know how to access the page table of the current process. Presented with a logical address (page number, relative address), the processor uses the page table to produce a physical address (frame number, relative address). An example is shown in Figure 8.16. This approach solves the problems raised earlier. Main memory is divided into many small equal-size frames. Each process is divided into frame-size pages: smaller processes require fewer pages, larger processes require more. When a process is brought in, its pages are loaded into available frames, and a page table is set up. 30 18 13 14 15 1 Page number Relative address within page Logical address Physical address Main memory Process A page table 30 Page 3 of A Page 0 of A Page 2 of A Page 1 of A 13 14 15 16 17 18 13 Frame number Relative address within frame Figure 8.16 Logical and Physical Addresses 2017 Kiến trúc máy tính 424 NKK-HUST Nguyên tắc làm việc của bộ nhớ ảo phân trang n Phân trang theo yêu cầu n Không yêu cầu tất cả các trang của tiến trình nằm trong bộ nhớ n Chỉ nạp vào bộ nhớ những trang được yêu cầu n Lỗi trang n Trang được yêu cầu không có trong bộ nhớ n HĐH cần hoán đổi trang yêu cầu vào n Có thể cần hoán đổi một trang nào đó ra để lấy chỗ n Cần chọn trang để đưa ra 2017 Kiến trúc máy tính 425 NKK-HUST Thất bại n Quá nhiều tiến trình trong bộ nhớ quá nhỏ n OS tiêu tốn toàn bộ thời gian cho việc hoán đổi n Có ít hoặc không có công việc nào được thực hiện n Đĩa luôn luôn sáng n Giải pháp: n Thuật toán thay trang n Giảm bớt số tiến trình đang chạy n Thêm bộ nhớ 2017 Kiến trúc máy tính 426 NKK-HUST Lợi ích n Không cần toàn bộ tiến trình nằm trong bộ nhớ để chạy n Có thể hoán đổi trang được yêu cầu n Như vậy có thể chạy những tiến trình lớn hơn tổng bộ nhớ sẵn dùng n Bộ nhớ chính được gọi là bộ nhớ thực n Người dùng cảm giác bộ nhớ lớn hơn bộ nhớ thực 2017 Kiến trúc máy tính 427 NKK-HUST Cấu trúc bảng trang 8.3 / MEMORY MANAGEMENT 291 inverted page table for each real memory page frame rather than one per virtual page. Thus a fixed proportion of real memory is required for the tables regardless of the number of processes or virtual pages supported. Because more than one virtual address may map into the same hash table entry, a chaining technique is used for managing the overflow. The hashing technique results in chains that are typically short—between one and two entries. The page table’s structure is called inverted because it indexes page table entries by frame number rather than by virtual page number. Translation Lookaside Buffer In principle, then, every virtual memory reference can cause two physical mem- ory accesses: one to fetch the appropriate page table entry, and one to fetch the desired data. Thus, a straightforward virtual memory scheme would have the effect of doubling the memory access time. To overcome this problem, most virtual memory schemes make use of a special cache for page table entries, usually called a translation lookaside buffer (TLB). This cache functions in the same way as a memory cache and contains those page table entries that have been most recently used. Figure 8.18 is a flowchart that shows the use of the TLB. By the principle of locality, most virtual memory references will be to locations in recently used pages. Therefore, most references will involve page table entries in the cache. Studies of the VAX TLB have shown that this scheme can significantly improve performance [CLAR85, SATY81]. Page # Offset Frame # m bits m bits n bits n bits Virtual address Hash function Page # Process ID Control bits Chain Inverted page table (one entry for each physical memory frame) Real address Offset i 0 j 2 m ! 1 Figure 8.17 Inverted Page Table Structure 2017 Kiến trúc máy tính 428 NKK-HUST Bộ nhớ trên máy tính PC n Bộ nhớ cache: tích hợp trên chip vi xử lý: n L1: cache lệnh và cache dữ liệu n L2, L3 n Bộ nhớ chính: Tồn tại dưới dạng các mô-đun nhớ RAM 2017 Kiến trúc máy tính 429 NKK-HUST Bộ nhớ trên PC (tiếp) n ROM BIOS chứa các chương trình sau: n Chương trình POST (Power On Self Test) n Chương trình CMOS Setup n Chương trình Bootstrap loader n Các trình điều khiển vào-ra cơ bản (BIOS) n CMOS RAM: n Chứa thông tin cấu hình hệ thống n Đồng hồ hệ thống n Có pin nuôi riêng n Video RAM: quản lý thông tin của màn hình n Các loại bộ nhớ ngoài 2017 Kiến trúc máy tính 430 NKK-HUST Hết chương 7 2017 Kiến trúc máy tính 431

File đính kèm:

bai_giang_kien_truc_may_tinh_chuong_7_bo_nho_may_tinh_nguyen.pdf

bai_giang_kien_truc_may_tinh_chuong_7_bo_nho_may_tinh_nguyen.pdf