A boosting classification approach based on SOM

Self-organizing map (SOM) is well known for

its ability to visualize and reduce the dimension of the data.

It has been a useful unsupervised tool for clustering

problems for years. In this paper, a new classification

framework based on SOM is introduced. In this approach,

SOM is combined with the learning vector quantization

(LVQ) to form a modified version of the SOM classifier,

SOM-LVQ. The classification system is improved by

applying an adaptive boosting algorithm with base learners

to be SOM-LVQ classifiers. Two decision fusion

strategies are adopted in the boosting algorithm, which are

majority voting and weighted voting. Experimental results

based on a real dataset show that the newly proposed

classification approach for SOM outperforms traditional

supervised SOM. The results also suggest that this model

can be applicable in real classification problems.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Tóm tắt nội dung tài liệu: A boosting classification approach based on SOM

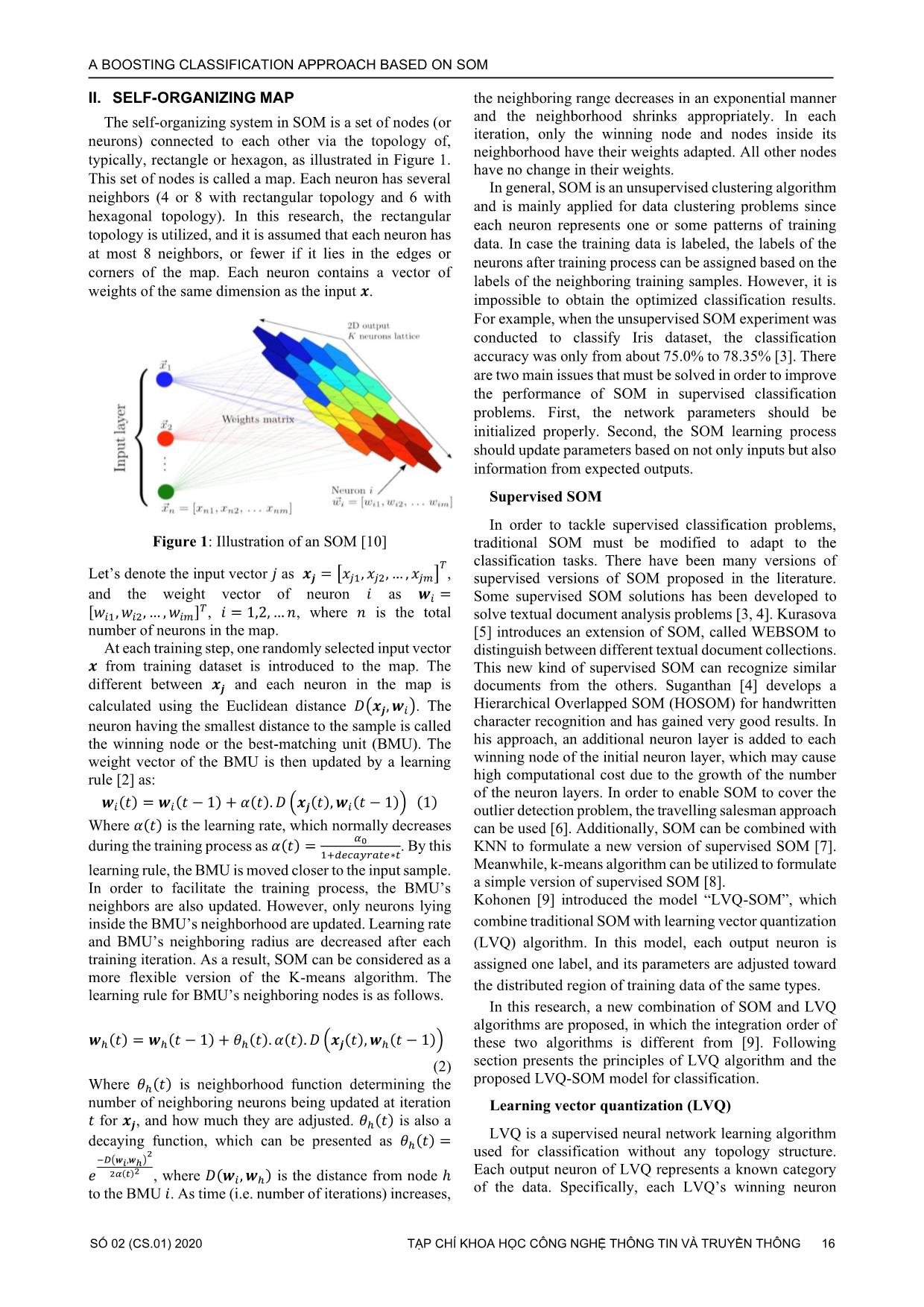

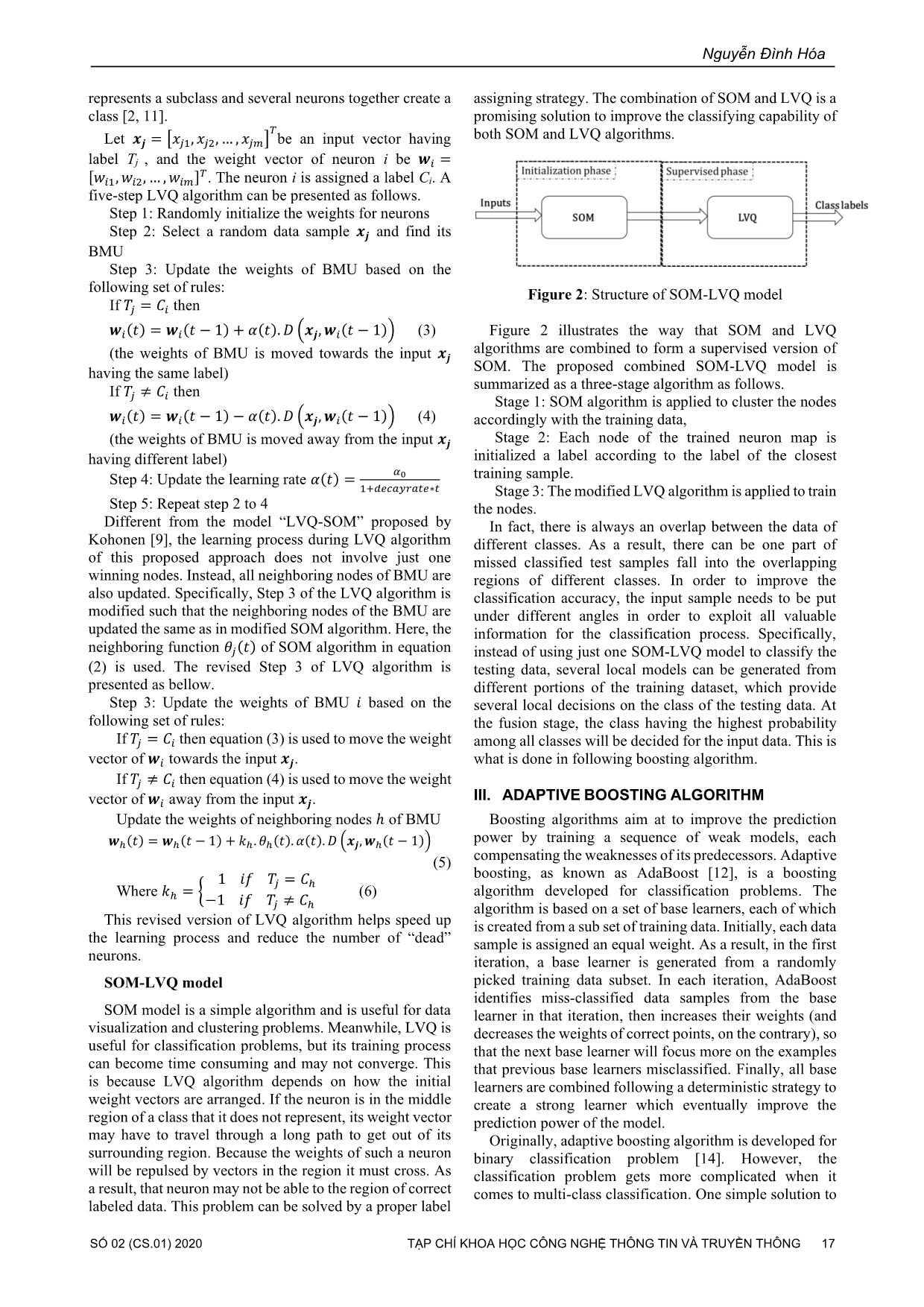

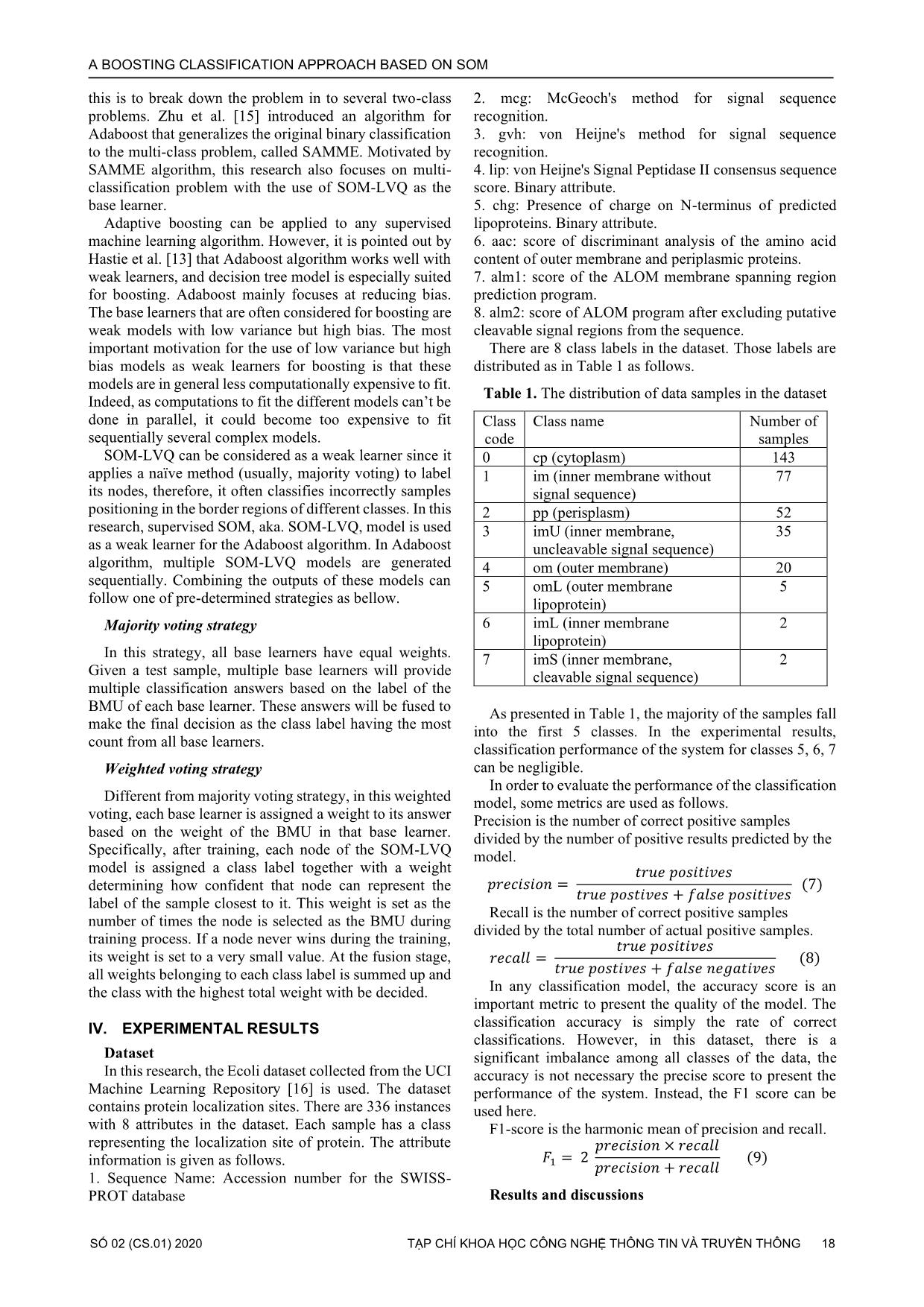

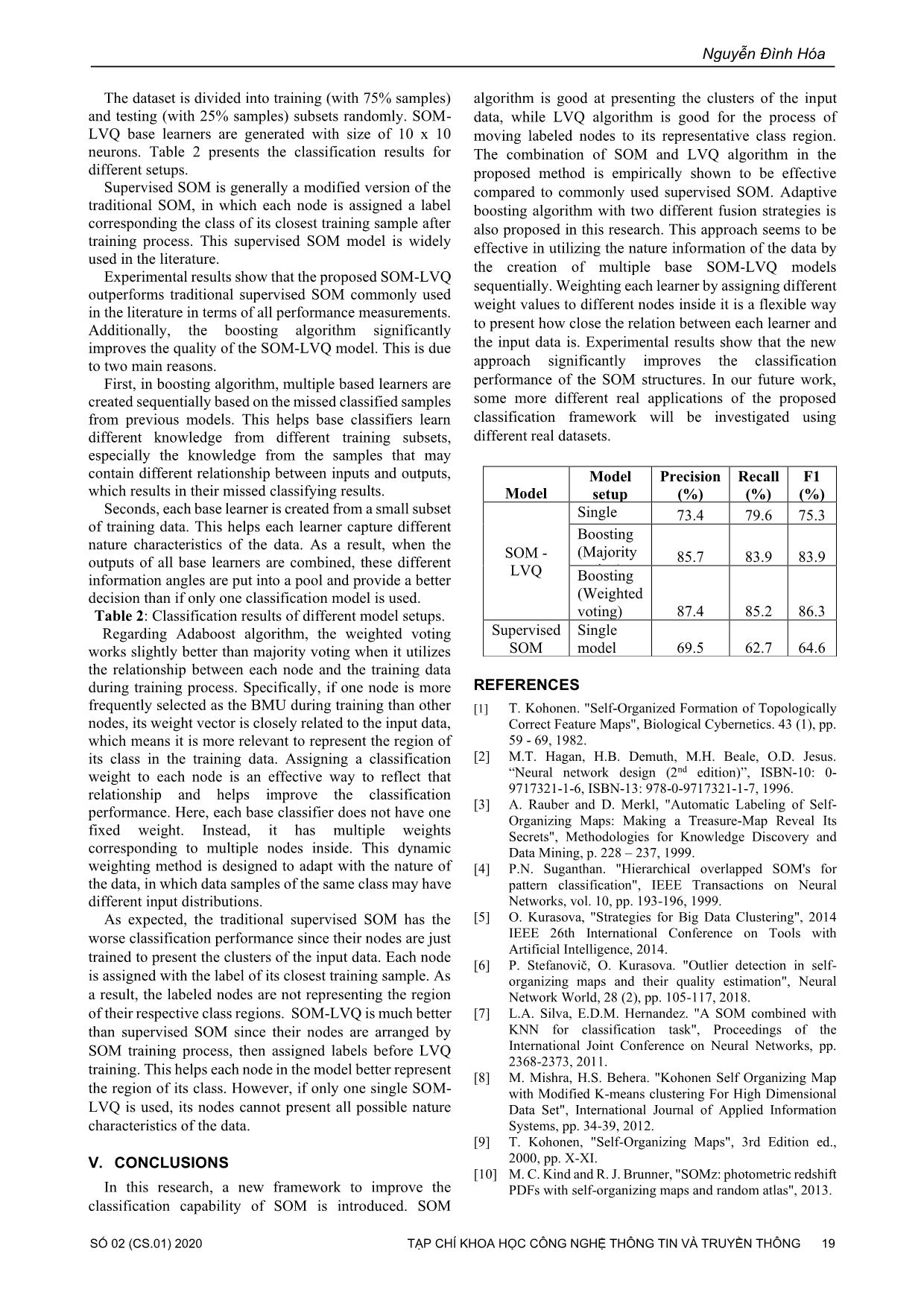

in the middle create a strong learner which eventually improve the region of a class that it does not represent, its weight vector prediction power of the model. may have to travel through a long path to get out of its Originally, adaptive boosting algorithm is developed for surrounding region. Because the weights of such a neuron binary classification problem [14]. However, the will be repulsed by vectors in the region it must cross. As classification problem gets more complicated when it a result, that neuron may not be able to the region of correct comes to multi-class classification. One simple solution to labeled data. This problem can be solved by a proper label A BOOSTING CLASSIFICATION APPROACH BASED ON SOM this is to break down the problem in to several two-class 2. mcg: McGeoch's method for signal sequence problems. Zhu et al. [15] introduced an algorithm for recognition. Adaboost that generalizes the original binary classification 3. gvh: von Heijne's method for signal sequence to the multi-class problem, called SAMME. Motivated by recognition. SAMME algorithm, this research also focuses on multi- 4. lip: von Heijne's Signal Peptidase II consensus sequence classification problem with the use of SOM-LVQ as the score. Binary attribute. base learner. 5. chg: Presence of charge on N-terminus of predicted Adaptive boosting can be applied to any supervised lipoproteins. Binary attribute. machine learning algorithm. However, it is pointed out by 6. aac: score of discriminant analysis of the amino acid Hastie et al. [13] that Adaboost algorithm works well with content of outer membrane and periplasmic proteins. weak learners, and decision tree model is especially suited 7. alm1: score of the ALOM membrane spanning region for boosting. Adaboost mainly focuses at reducing bias. prediction program. The base learners that are often considered for boosting are 8. alm2: score of ALOM program after excluding putative weak models with low variance but high bias. The most cleavable signal regions from the sequence. important motivation for the use of low variance but high There are 8 class labels in the dataset. Those labels are bias models as weak learners for boosting is that these distributed as in Table 1 as follows. models are in general less computationally expensive to fit. Table 1. The distribution of data samples in the dataset Indeed, as computations to fit the different models can’t be done in parallel, it could become too expensive to fit Class Class name Number of sequentially several complex models. code samples SOM-LVQ can be considered as a weak learner since it 0 cp (cytoplasm) 143 applies a naïve method (usually, majority voting) to label 1 im (inner membrane without 77 its nodes, therefore, it often classifies incorrectly samples signal sequence) positioning in the border regions of different classes. In this 2 pp (perisplasm) 52 research, supervised SOM, aka. SOM-LVQ, model is used 3 imU (inner membrane, 35 as a weak learner for the Adaboost algorithm. In Adaboost uncleavable signal sequence) algorithm, multiple SOM-LVQ models are generated 4 om (outer membrane) 20 sequentially. Combining the outputs of these models can 5 omL (outer membrane 5 follow one of pre-determined strategies as bellow. lipoprotein) Majority voting strategy 6 imL (inner membrane 2 lipoprotein) In this strategy, all base learners have equal weights. 7 imS (inner membrane, 2 Given a test sample, multiple base learners will provide cleavable signal sequence) multiple classification answers based on the label of the BMU of each base learner. These answers will be fused to As presented in Table 1, the majority of the samples fall make the final decision as the class label having the most into the first 5 classes. In the experimental results, count from all base learners. classification performance of the system for classes 5, 6, 7 Weighted voting strategy can be negligible. In order to evaluate the performance of the classification Different from majority voting strategy, in this weighted model, some metrics are used as follows. voting, each base learner is assigned a weight to its answer Precision is the number of correct positive samples based on the weight of the BMU in that base learner. divided by the number of positive results predicted by the Specifically, after training, each node of the SOM-LVQ model. model is assigned a class label together with a weight 푡 푒 표푠푖푡푖푣푒푠 determining how confident that node can represent the 푒 푖푠푖표푛 = (7) 푡 푒 표푠푡푖푣푒푠 + 푙푠푒 표푠푖푡푖푣푒푠 label of the sample closest to it. This weight is set as the Recall is the number of correct positive samples number of times the node is selected as the BMU during divided by the total number of actual positive samples. training process. If a node never wins during the training, 푡 푒 표푠푖푡푖푣푒푠 its weight is set to a very small value. At the fusion stage, 푒 푙푙 = (8) all weights belonging to each class label is summed up and 푡 푒 표푠푡푖푣푒푠 + 푙푠푒 푛푒 푡푖푣푒푠 the class with the highest total weight with be decided. In any classification model, the accuracy score is an important metric to present the quality of the model. The classification accuracy is simply the rate of correct IV. EXPERIMENTAL RESULTS classifications. However, in this dataset, there is a Dataset significant imbalance among all classes of the data, the In this research, the Ecoli dataset collected from the UCI accuracy is not necessary the precise score to present the Machine Learning Repository [16] is used. The dataset performance of the system. Instead, the F1 score can be contains protein localization sites. There are 336 instances used here. with 8 attributes in the dataset. Each sample has a class F1-score is the harmonic mean of precision and recall. representing the localization site of protein. The attribute 푒 푖푠푖표푛 × 푒 푙푙 퐹 = 2 (9) information is given as follows. 1 푒 푖푠푖표푛 + 푒 푙푙 1. Sequence Name: Accession number for the SWISS- PROT database Results and discussions Nguyễn Đình Hóa The dataset is divided into training (with 75% samples) algorithm is good at presenting the clusters of the input and testing (with 25% samples) subsets randomly. SOM- data, while LVQ algorithm is good for the process of LVQ base learners are generated with size of 10 x 10 moving labeled nodes to its representative class region. neurons. Table 2 presents the classification results for The combination of SOM and LVQ algorithm in the different setups. proposed method is empirically shown to be effective Supervised SOM is generally a modified version of the compared to commonly used supervised SOM. Adaptive traditional SOM, in which each node is assigned a label boosting algorithm with two different fusion strategies is corresponding the class of its closest training sample after also proposed in this research. This approach seems to be training process. This supervised SOM model is widely effective in utilizing the nature information of the data by used in the literature. the creation of multiple base SOM-LVQ models Experimental results show that the proposed SOM-LVQ sequentially. Weighting each learner by assigning different outperforms traditional supervised SOM commonly used weight values to different nodes inside it is a flexible way in the literature in terms of all performance measurements. Additionally, the boosting algorithm significantly to present how close the relation between each learner and improves the quality of the SOM-LVQ model. This is due the input data is. Experimental results show that the new to two main reasons. approach significantly improves the classification First, in boosting algorithm, multiple based learners are performance of the SOM structures. In our future work, created sequentially based on the missed classified samples some more different real applications of the proposed from previous models. This helps base classifiers learn classification framework will be investigated using different knowledge from different training subsets, different real datasets. especially the knowledge from the samples that may contain different relationship between inputs and outputs, Model Precision Recall F1 which results in their missed classifying results. Model setup (%) (%) (%) Seconds, each base learner is created from a small subset Single 73.4 79.6 75.3 of training data. This helps each learner capture different Boostingmodel nature characteristics of the data. As a result, when the SOM - (Majority outputs of all base learners are combined, these different 85.7 83.9 83.9 LVQ voting) information angles are put into a pool and provide a better Boosting decision than if only one classification model is used. (Weighted Table 2: Classification results of different model setups. voting) 87.4 85.2 86.3 Regarding Adaboost algorithm, the weighted voting Supervised Single works slightly better than majority voting when it utilizes SOM model 69.5 62.7 64.6 the relationship between each node and the training data during training process. Specifically, if one node is more REFERENCES frequently selected as the BMU during training than other [1] T. Kohonen. "Self-Organized Formation of Topologically nodes, its weight vector is closely related to the input data, Correct Feature Maps", Biological Cybernetics. 43 (1), pp. which means it is more relevant to represent the region of 59 - 69, 1982. its class in the training data. Assigning a classification [2] M.T. Hagan, H.B. Demuth, M.H. Beale, O.D. Jesus. weight to each node is an effective way to reflect that “Neural network design (2nd edition)”, ISBN-10: 0- relationship and helps improve the classification 9717321-1-6, ISBN-13: 978-0-9717321-1-7, 1996. [3] A. Rauber and D. Merkl, "Automatic Labeling of Self- performance. Here, each base classifier does not have one Organizing Maps: Making a Treasure-Map Reveal Its fixed weight. Instead, it has multiple weights Secrets", Methodologies for Knowledge Discovery and corresponding to multiple nodes inside. This dynamic Data Mining, p. 228 – 237, 1999. weighting method is designed to adapt with the nature of [4] P.N. Suganthan. "Hierarchical overlapped SOM's for the data, in which data samples of the same class may have pattern classification", IEEE Transactions on Neural different input distributions. Networks, vol. 10, pp. 193-196, 1999. As expected, the traditional supervised SOM has the [5] O. Kurasova, "Strategies for Big Data Clustering", 2014 worse classification performance since their nodes are just IEEE 26th International Conference on Tools with Artificial Intelligence, 2014. trained to present the clusters of the input data. Each node [6] P. Stefanovič, O. Kurasova. "Outlier detection in self- is assigned with the label of its closest training sample. As organizing maps and their quality estimation", Neural a result, the labeled nodes are not representing the region Network World, 28 (2), pp. 105-117, 2018. of their respective class regions. SOM-LVQ is much better [7] L.A. Silva, E.D.M. Hernandez. "A SOM combined with than supervised SOM since their nodes are arranged by KNN for classification task", Proceedings of the SOM training process, then assigned labels before LVQ International Joint Conference on Neural Networks, pp. 2368-2373, 2011. training. This helps each node in the model better represent [8] M. Mishra, H.S. Behera. "Kohonen Self Organizing Map the region of its class. However, if only one single SOM- with Modified K-means clustering For High Dimensional LVQ is used, its nodes cannot present all possible nature Data Set", International Journal of Applied Information characteristics of the data. Systems, pp. 34-39, 2012. [9] T. Kohonen, "Self-Organizing Maps", 3rd Edition ed., V. CONCLUSIONS 2000, pp. X-XI. [10] M. C. Kind and R. J. Brunner, "SOMz: photometric redshift In this research, a new framework to improve the PDFs with self-organizing maps and random atlas", 2013. classification capability of SOM is introduced. SOM A BOOSTING CLASSIFICATION APPROACH BASED ON SOM [11] E. D. Bodt, M. Cottrell, P. Letrémy, and M. Verleysen, "On the use of self-organizing maps to accelerate vector quantization," Neurocomputing , vol. 56, pp. 187-203, 2004. [12] R. E. Schapire and Y. Freund, "A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting," journal of computer and system sciences, pp. 119-139, 1996. [13] T. Hastie, R. Tibshirani and F. Jerome, The Elements of Statistical Learning, 2nd edition, Stanford, California: Springer, 2008, p. 340. [14] P. Dangeti, Statistics for Machine Learning, Birmingham: Packt Publishing, July 2017. [15] J. Zhu, H. Zou, S. Rosset and T. Hastie, "Multi-class AdaBoost," Statistics and Its Interface , vol. 2, pp. 349-360, 2009. [16] "UCI Machine Learning Repository," [Online]. Available: https://archive.ics.uci.edu/ml/index.php. [17] T. Kohonen, P. Somervuo. “How to make large self- organizing maps for nonvectorial data”, Neural Networks, 15 (8-9), pp. 945-52, 2002. MỘT PHƯƠNG PHÁP NÂNG CAO KHẢ NĂNG PHÂN LOẠI DỮ LIỆU CỦA SOM SỬ DỤNG THUẬT TOÁN BOOSTING Tóm tắt: Bản đồ tự tổ chức (SOM) được biết đến là một công cụ hữu hiệu trong việc trực quan hóa và giảm kích thước của dữ liệu. SOM là công cụ học không giám sát và rất hữu ích cho các bài toán phân cụm. Bài báo này trình bày về một cách tiếp cận mới cho bài toán phân loại dựa trên SOM. Trong phương pháp này, SOM được kết hợp với thuật toán huấn luyện lượng tử hóa vectơ (LVQ) để tạo thành một mô hình mới là SOM-LVQ. Mộ hình phân loại dữ liệu sử dụng SOM-LVQ được tiếp tục cải tiến bằng cách áp dụng thuật toán tăng cường thích ứng (Adaboost) sử dụng SOM-LVQ làm các bộ phân loại cơ sở. Để kết hợp các kết quả từ các bộ phân loại cơ sở, hai kỹ thuật được áp dụng bao gồm bỏ phiếu theo đa số và bỏ phiếu theo trọng số. Kết quả thử nghiệm dựa trên bộ dữ liệu thực tế cho thấy phương pháp phân loại mới được đề xuất nhằm cải tiến SOM trong nghiên cứu này vượt trội hơn mô hình SOM truyền thống. Kết quả cũng cho thấy khả năng ứng dụng thực tế của mô hình này là rất khả quan. Từ khoá: Bản đồ tự tổ chức, học lượng tử hoá vector, thuật toán tăng cường, kết hợp theo trọng số. Hoa Dinh Nguyen earned bachelor and master of science degrees from Hanoi University of Technology in 2000 and 2002, respectively. He got his PhD. degree in electrical and computer engineering in 2013 from Oklahoma State University. He is now a lecturer in information technology at PTIT. His research fields of interest include dynamic systems, data mining, and machine learning.

File đính kèm:

a_boosting_classification_approach_based_on_som.pdf

a_boosting_classification_approach_based_on_som.pdf