An efficient algorithm for quality of service assessment

Abstract: In environmental monitoring systems, services are developed both in sensors and

the server (base station) to provide interfaces where users can interact to systems to retrieve

required information. Because of network’s resource limitation, some services may not keep

their quality level stable over long period which could lead to the violation of quality of

service agreements. In order to keep quality level stable, risk factors must be realized and

monitored as soon as possible. Based on information retrieved through the monitoring

process, an algorithm is built for estimating the quality of services. In this paper, we introduce

how this algorithm is built and how it is applied to estimate the quality of internet services.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tóm tắt nội dung tài liệu: An efficient algorithm for quality of service assessment

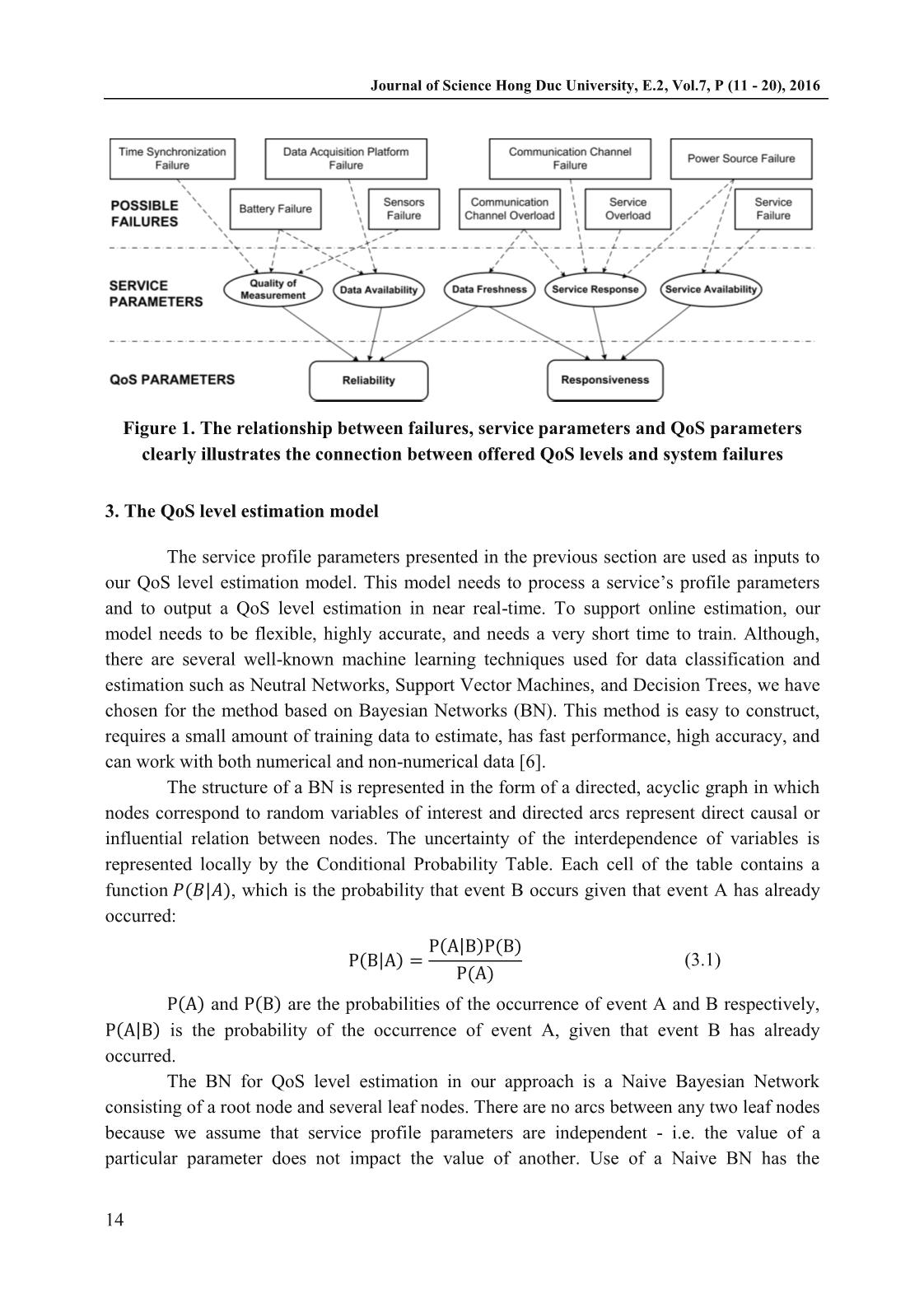

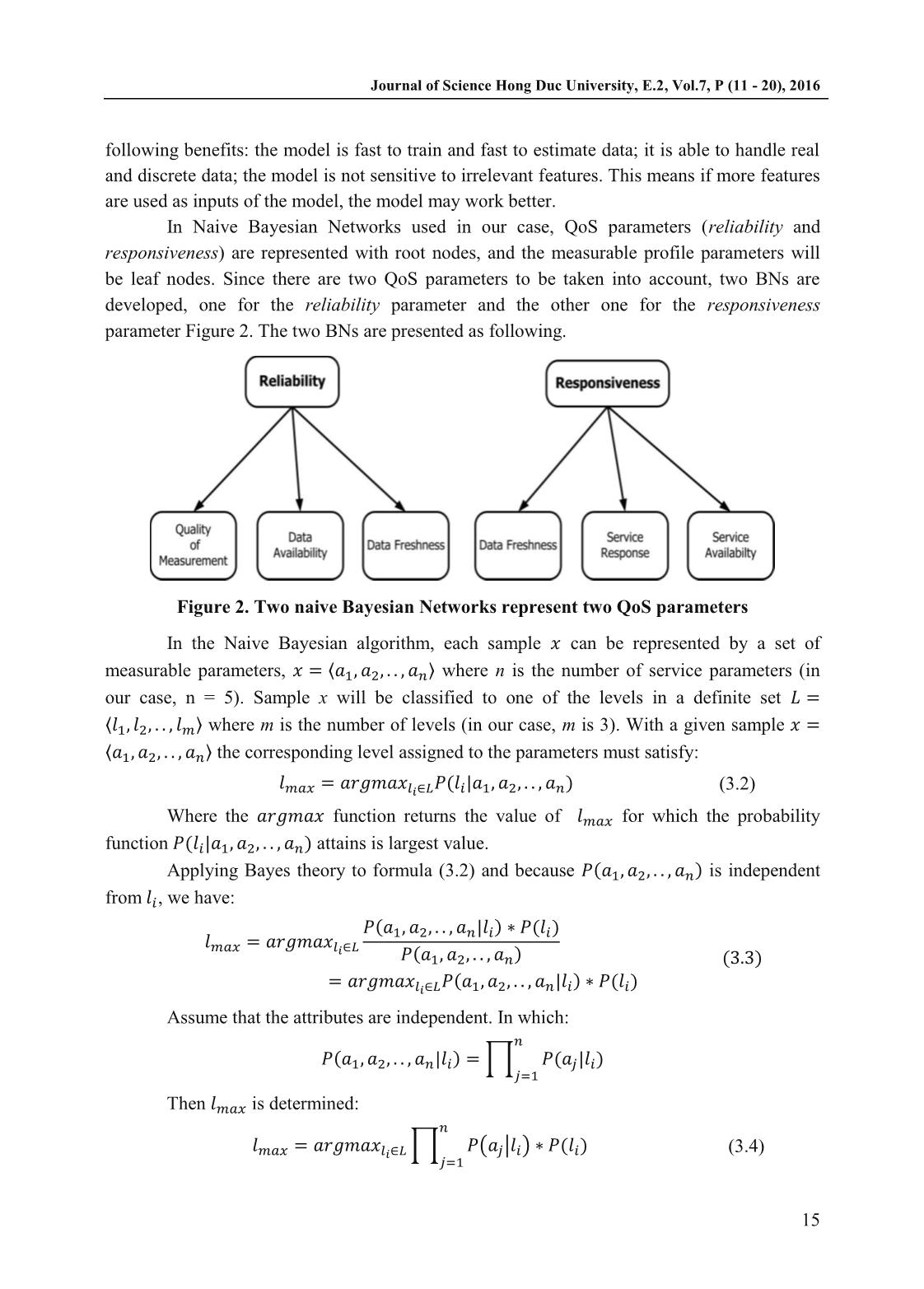

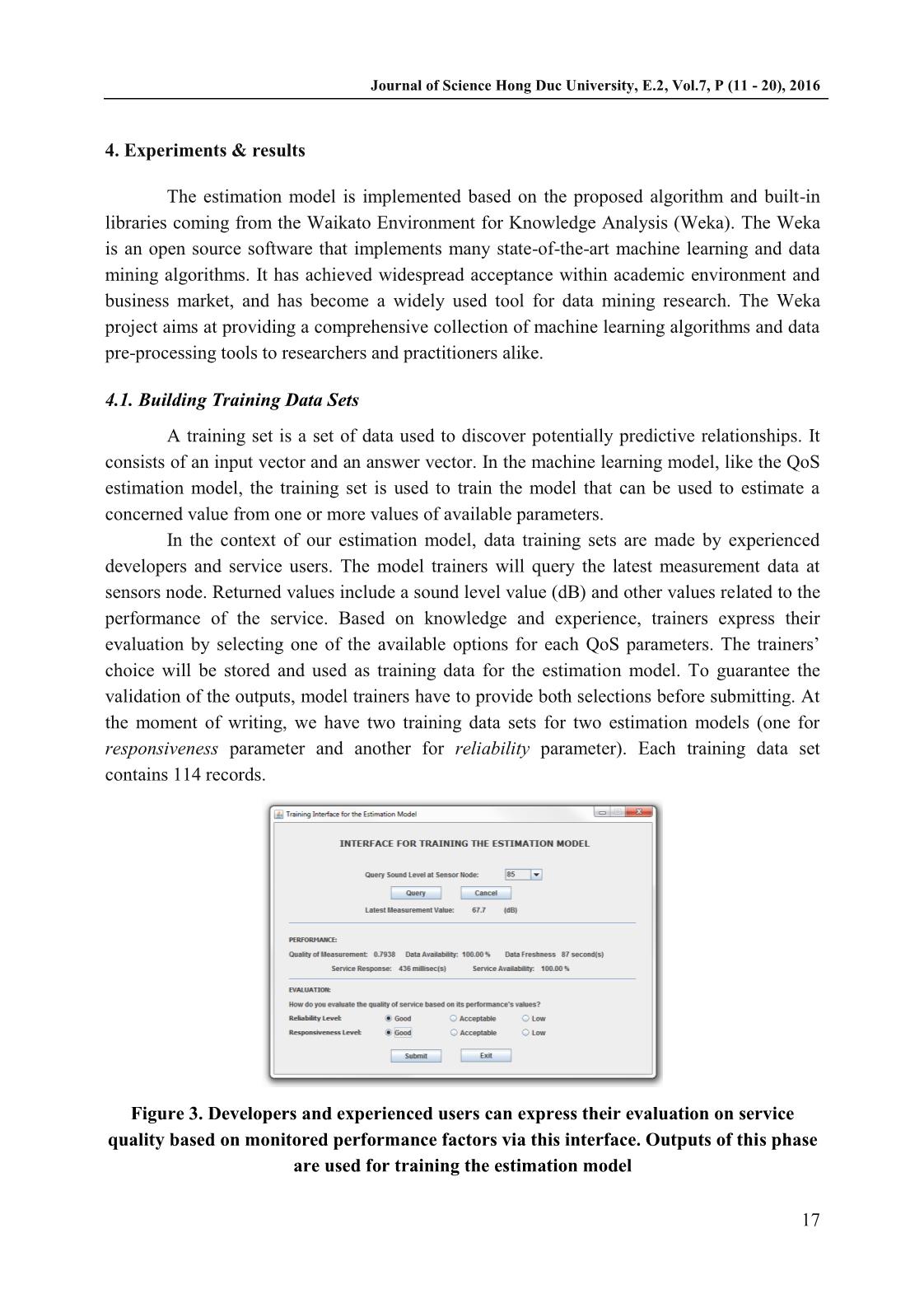

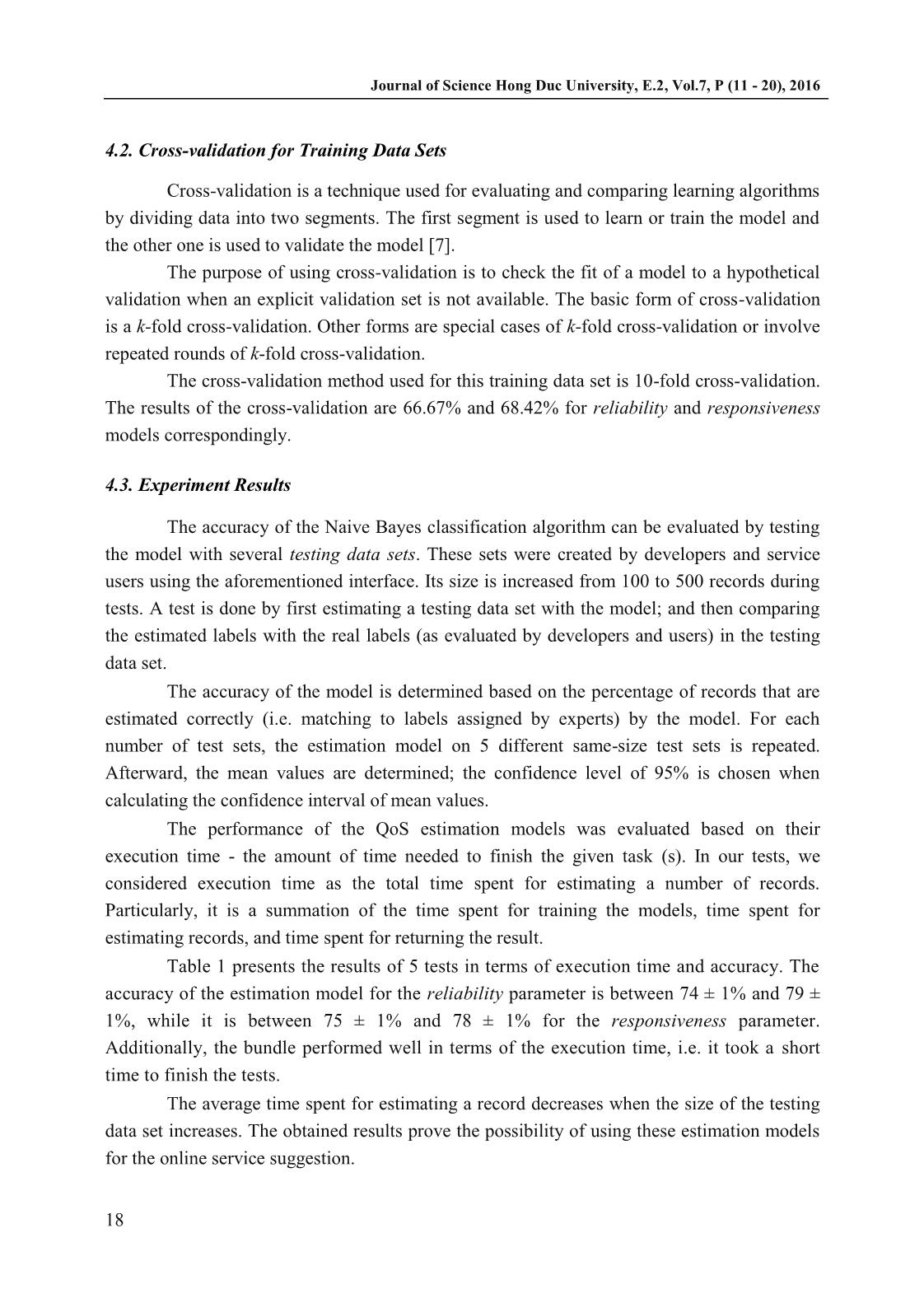

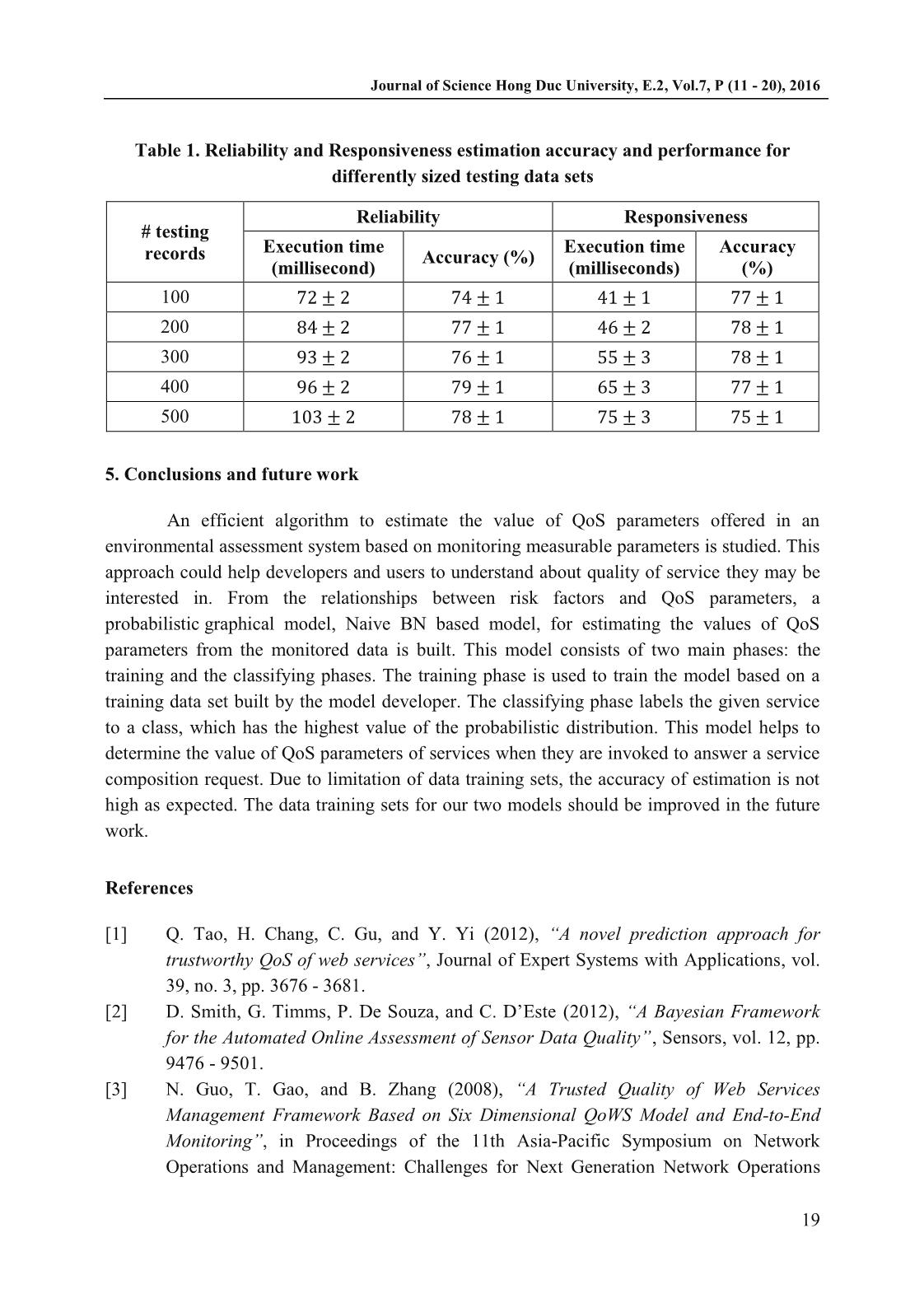

to given requests from users As depicted in Figure 1, there are dependencies between potential failures and aforementioned service parameters. Journal of Science Hong Duc University, E.2, Vol.7, P (11 - 20), 2016 14 Figure 1. The relationship between failures, service parameters and QoS parameters clearly illustrates the connection between offered QoS levels and system failures 3. The QoS level estimation model The service profile parameters presented in the previous section are used as inputs to our QoS level estimation model. This model needs to process a service’s profile parameters and to output a QoS level estimation in near real-time. To support online estimation, our model needs to be flexible, highly accurate, and needs a very short time to train. Although, there are several well-known machine learning techniques used for data classification and estimation such as Neutral Networks, Support Vector Machines, and Decision Trees, we have chosen for the method based on Bayesian Networks (BN). This method is easy to construct, requires a small amount of training data to estimate, has fast performance, high accuracy, and can work with both numerical and non-numerical data [6]. The structure of a BN is represented in the form of a directed, acyclic graph in which nodes correspond to random variables of interest and directed arcs represent direct causal or influential relation between nodes. The uncertainty of the interdependence of variables is represented locally by the Conditional Probability Table. Each cell of the table contains a function 𝑃(𝐵|𝐴), which is the probability that event B occurs given that event A has already occurred: P(B|A) = P(A|B)P(B) P(A) (3.1) P(A) and P(B) are the probabilities of the occurrence of event A and B respectively, P(A|B) is the probability of the occurrence of event A, given that event B has already occurred. The BN for QoS level estimation in our approach is a Naive Bayesian Network consisting of a root node and several leaf nodes. There are no arcs between any two leaf nodes because we assume that service profile parameters are independent - i.e. the value of a particular parameter does not impact the value of another. Use of a Naive BN has the Journal of Science Hong Duc University, E.2, Vol.7, P (11 - 20), 2016 15 following benefits: the model is fast to train and fast to estimate data; it is able to handle real and discrete data; the model is not sensitive to irrelevant features. This means if more features are used as inputs of the model, the model may work better. In Naive Bayesian Networks used in our case, QoS parameters (reliability and responsiveness) are represented with root nodes, and the measurable profile parameters will be leaf nodes. Since there are two QoS parameters to be taken into account, two BNs are developed, one for the reliability parameter and the other one for the responsiveness parameter Figure 2. The two BNs are presented as following. Figure 2. Two naive Bayesian Networks represent two QoS parameters In the Naive Bayesian algorithm, each sample 𝑥 can be represented by a set of measurable parameters, 𝑥 = 〈𝑎1, 𝑎2, . . , 𝑎𝑛〉 where n is the number of service parameters (in our case, n = 5). Sample x will be classified to one of the levels in a definite set 𝐿 = 〈𝑙1, 𝑙2, . . , 𝑙𝑚〉 where m is the number of levels (in our case, m is 3). With a given sample 𝑥 = 〈𝑎1, 𝑎2, . . , 𝑎𝑛〉 the corresponding level assigned to the parameters must satisfy: 𝑙𝑚𝑎𝑥 = 𝑎𝑟𝑔𝑚𝑎𝑥𝑙𝑖∈𝐿𝑃(𝑙𝑖|𝑎1, 𝑎2, . . , 𝑎𝑛) (3.2) Where the 𝑎𝑟𝑔𝑚𝑎𝑥 function returns the value of 𝑙𝑚𝑎𝑥 for which the probability function 𝑃(𝑙𝑖|𝑎1, 𝑎2, . . , 𝑎𝑛) attains is largest value. Applying Bayes theory to formula (3.2) and because 𝑃(𝑎1, 𝑎2, . . , 𝑎𝑛) is independent from 𝑙𝑖, we have: 𝑙𝑚𝑎𝑥 = 𝑎𝑟𝑔𝑚𝑎𝑥𝑙𝑖∈𝐿 𝑃(𝑎1, 𝑎2, . . , 𝑎𝑛|𝑙𝑖) ∗ 𝑃(𝑙𝑖) 𝑃(𝑎1, 𝑎2, . . , 𝑎𝑛) = 𝑎𝑟𝑔𝑚𝑎𝑥𝑙𝑖∈𝐿𝑃(𝑎1, 𝑎2, . . , 𝑎𝑛|𝑙𝑖) ∗ 𝑃(𝑙𝑖) (3.3) Assume that the attributes are independent. In which: 𝑃(𝑎1, 𝑎2, . . , 𝑎𝑛|𝑙𝑖) = ∏ 𝑃(𝑎𝑗|𝑙𝑖) 𝑛 𝑗=1 Then 𝑙𝑚𝑎𝑥 is determined: 𝑙𝑚𝑎𝑥 = 𝑎𝑟𝑔𝑚𝑎𝑥𝑙𝑖∈𝐿 ∏ 𝑃(𝑎𝑗|𝑙𝑖) ∗ 𝑃(𝑙𝑖) 𝑛 𝑗=1 (3.4) Journal of Science Hong Duc University, E.2, Vol.7, P (11 - 20), 2016 16 Based on Equation (3.4), an algorithm (see Algorithm 1) is developed to estimate the QoS level of the offered services. The algorithm consists of two phases: training phase and classification phase. Training phase: Compute the probability of the value of service parameters and the value of labels in the training data set. Classification phase: Assign the sample to the label name which gets the highest probability. The algorithm is applied to two QoS parameters to estimate their values before the data service is consumed. Because the algorithm uses a training data set, which is created based on historical observations; the value of QoS level based on the probability theorem could be estimated. After being trained, the estimation models can be tested with other data sets to check its accuracy. These data sets were also created by experienced experts. The number of records in the training data set is finite, so the algorithm is finished after a limited time. Algorithm 1: QoS level estimation Algorithm 𝑰𝒏𝒑𝒖𝒕: 𝑊𝑒𝑏𝑠𝑒𝑟𝑣𝑖𝑐𝑒𝑥 = 〈𝑎1, 𝑎2, . . , 𝑎𝑛〉 ≫ 𝑎𝑗: 𝑝𝑎𝑟𝑎𝑚𝑒𝑡𝑒𝑟 𝑣𝑎𝑙𝑢𝑒𝑠 𝑆𝑒𝑡 𝑜𝑓 𝑙𝑒𝑣𝑒𝑙𝑠 𝐿 = 〈𝑙1, 𝑙2, . . , 𝑙𝑚〉 ≫ 𝑙𝑖 : 𝑎 𝑛𝑎𝑚𝑒 𝑜𝑓 𝑎 𝑙𝑒𝑣𝑒𝑙 𝑩𝒆𝒈𝒊𝒏 𝑓𝑚𝑎𝑥 ← 0 ≫ 𝑈𝑠𝑒𝑑 𝑡𝑜 𝑠𝑡𝑜𝑟𝑒 𝑡ℎ𝑒 ℎ𝑖𝑔ℎ𝑒𝑠𝑡 𝑝𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑣𝑎𝑙𝑢𝑒 𝑙𝑚𝑎𝑥 ← 𝑛𝑢𝑙𝑙 ≫ 𝑈𝑠𝑒𝑑 𝑡𝑜 𝑠𝑡𝑜𝑟𝑒 𝑡ℎ𝑒 𝑙𝑒𝑣𝑒𝑙 𝑤𝑖𝑡ℎ 𝑡ℎ𝑒 ℎ𝑖𝑔ℎ𝑒𝑠𝑡 𝑣𝑎𝑙𝑢𝑒 𝑭𝒐𝒓𝒆𝒂𝒄𝒉 𝑙𝑖 ∈ 𝐿 𝒅𝒐 𝑩𝒆𝒈𝒊𝒏 𝑃(𝑙𝑖) ← 𝑃𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑜𝑓 𝑙𝑖 𝑖𝑛 𝑡ℎ𝑒 𝑡𝑟𝑎𝑖𝑛𝑖𝑛𝑔 𝑑𝑎𝑡𝑎 𝑠𝑒𝑡 𝑓(𝑙𝑖) ← 𝑃(𝑙𝑖) ≫ 𝐼𝑛𝑖𝑡𝑖𝑎𝑙𝑖𝑧𝑒 𝑡ℎ𝑒 𝑓(𝑙𝑖) 𝑭𝒐𝒓𝒆𝒂𝒄𝒉 𝑎𝑗 ∈ 𝑥𝒅𝒐 𝑩𝒆𝒈𝒊𝒏 𝑃(𝑎𝑗|𝑙𝑖) ← 𝑃𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑜𝑓 𝑎𝑗 𝑔𝑖𝑣𝑒𝑛 𝑙𝑖 𝑖𝑛 𝑡ℎ𝑒 𝑡𝑟𝑎𝑖𝑛𝑖𝑛𝑔 𝑑𝑎𝑡𝑎 𝑠𝑒𝑡 𝑓(𝑙𝑖) ← 𝑓(𝑙𝑖) ∗ 𝑃(𝑎𝑗|𝑙𝑖) ≫ 𝐶𝑎𝑙𝑐𝑢𝑙𝑎𝑡𝑒 𝑡ℎ𝑒 𝑎𝑐𝑐𝑢𝑙𝑢𝑚𝑎𝑡𝑖𝑣𝑒 𝑣𝑎𝑙𝑢𝑒 𝑬𝒏𝒅 𝑰𝒇 𝑓(𝑙𝑖) ≥ 𝑓𝑚𝑎𝑥 𝒕𝒉𝒆𝒏 ≫ 𝐶ℎ𝑒𝑐𝑘 𝑤ℎ𝑒𝑡ℎ𝑒𝑟 𝑡ℎ𝑒 𝑛𝑒𝑤 𝑣𝑎𝑙𝑢𝑒 𝑖𝑠 ℎ𝑖𝑔ℎ𝑒𝑟 𝑡ℎ𝑎𝑛 𝑓𝑚𝑎𝑥 𝑩𝒆𝒈𝒊𝒏 𝑓𝑚𝑎𝑥 ← 𝑓(𝑙𝑖) ≫ 𝐴𝑠𝑠𝑖𝑔𝑛 𝑓𝑚𝑎𝑥 𝑤𝑖𝑡ℎ 𝑎 𝑛𝑒𝑤 𝑣𝑎𝑙𝑢𝑒 𝑙𝑚𝑎𝑥 ← 𝑙𝑖 ≫ 𝐴𝑠𝑠𝑖𝑔𝑛 𝑙𝑚𝑎𝑥 𝑤𝑖𝑡ℎ 𝑎 𝑛𝑒𝑤 𝑣𝑎𝑙𝑢𝑒 𝑬𝒏𝒅 𝑬𝒏𝒅 𝑬𝒏𝒅 𝑶𝒖𝒕𝒑𝒖𝒕: 𝑊𝑒𝑏 𝑠𝑒𝑟𝑣𝑖𝑐𝑒 𝑥 = 〈𝑎1, 𝑎2, . . , 𝑎𝑛〉 𝑖𝑠 𝑎𝑠𝑠𝑖𝑔𝑛𝑒𝑑 𝑡𝑜 𝑡ℎ𝑒 𝑐𝑙𝑎𝑠𝑠 𝑙𝑚𝑎𝑥 Journal of Science Hong Duc University, E.2, Vol.7, P (11 - 20), 2016 17 4. Experiments & results The estimation model is implemented based on the proposed algorithm and built-in libraries coming from the Waikato Environment for Knowledge Analysis (Weka). The Weka is an open source software that implements many state-of-the-art machine learning and data mining algorithms. It has achieved widespread acceptance within academic environment and business market, and has become a widely used tool for data mining research. The Weka project aims at providing a comprehensive collection of machine learning algorithms and data pre-processing tools to researchers and practitioners alike. 4.1. Building Training Data Sets A training set is a set of data used to discover potentially predictive relationships. It consists of an input vector and an answer vector. In the machine learning model, like the QoS estimation model, the training set is used to train the model that can be used to estimate a concerned value from one or more values of available parameters. In the context of our estimation model, data training sets are made by experienced developers and service users. The model trainers will query the latest measurement data at sensors node. Returned values include a sound level value (dB) and other values related to the performance of the service. Based on knowledge and experience, trainers express their evaluation by selecting one of the available options for each QoS parameters. The trainers’ choice will be stored and used as training data for the estimation model. To guarantee the validation of the outputs, model trainers have to provide both selections before submitting. At the moment of writing, we have two training data sets for two estimation models (one for responsiveness parameter and another for reliability parameter). Each training data set contains 114 records. Figure 3. Developers and experienced users can express their evaluation on service quality based on monitored performance factors via this interface. Outputs of this phase are used for training the estimation model Journal of Science Hong Duc University, E.2, Vol.7, P (11 - 20), 2016 18 4.2. Cross-validation for Training Data Sets Cross-validation is a technique used for evaluating and comparing learning algorithms by dividing data into two segments. The first segment is used to learn or train the model and the other one is used to validate the model [7]. The purpose of using cross-validation is to check the fit of a model to a hypothetical validation when an explicit validation set is not available. The basic form of cross-validation is a k-fold cross-validation. Other forms are special cases of k-fold cross-validation or involve repeated rounds of k-fold cross-validation. The cross-validation method used for this training data set is 10-fold cross-validation. The results of the cross-validation are 66.67% and 68.42% for reliability and responsiveness models correspondingly. 4.3. Experiment Results The accuracy of the Naive Bayes classification algorithm can be evaluated by testing the model with several testing data sets. These sets were created by developers and service users using the aforementioned interface. Its size is increased from 100 to 500 records during tests. A test is done by first estimating a testing data set with the model; and then comparing the estimated labels with the real labels (as evaluated by developers and users) in the testing data set. The accuracy of the model is determined based on the percentage of records that are estimated correctly (i.e. matching to labels assigned by experts) by the model. For each number of test sets, the estimation model on 5 different same-size test sets is repeated. Afterward, the mean values are determined; the confidence level of 95% is chosen when calculating the confidence interval of mean values. The performance of the QoS estimation models was evaluated based on their execution time - the amount of time needed to finish the given task (s). In our tests, we considered execution time as the total time spent for estimating a number of records. Particularly, it is a summation of the time spent for training the models, time spent for estimating records, and time spent for returning the result. Table 1 presents the results of 5 tests in terms of execution time and accuracy. The accuracy of the estimation model for the reliability parameter is between 74 ± 1% and 79 ± 1%, while it is between 75 ± 1% and 78 ± 1% for the responsiveness parameter. Additionally, the bundle performed well in terms of the execution time, i.e. it took a short time to finish the tests. The average time spent for estimating a record decreases when the size of the testing data set increases. The obtained results prove the possibility of using these estimation models for the online service suggestion. Journal of Science Hong Duc University, E.2, Vol.7, P (11 - 20), 2016 19 Table 1. Reliability and Responsiveness estimation accuracy and performance for differently sized testing data sets # testing records Reliability Responsiveness Execution time (millisecond) Accuracy (%) Execution time (milliseconds) Accuracy (%) 100 72 ± 2 74 ± 1 41 ± 1 77 ± 1 200 84 ± 2 77 ± 1 46 ± 2 78 ± 1 300 93 ± 2 76 ± 1 55 ± 3 78 ± 1 400 96 ± 2 79 ± 1 65 ± 3 77 ± 1 500 103 ± 2 78 ± 1 75 ± 3 75 ± 1 5. Conclusions and future work An efficient algorithm to estimate the value of QoS parameters offered in an environmental assessment system based on monitoring measurable parameters is studied. This approach could help developers and users to understand about quality of service they may be interested in. From the relationships between risk factors and QoS parameters, a probabilistic graphical model, Naive BN based model, for estimating the values of QoS parameters from the monitored data is built. This model consists of two main phases: the training and the classifying phases. The training phase is used to train the model based on a training data set built by the model developer. The classifying phase labels the given service to a class, which has the highest value of the probabilistic distribution. This model helps to determine the value of QoS parameters of services when they are invoked to answer a service composition request. Due to limitation of data training sets, the accuracy of estimation is not high as expected. The data training sets for our two models should be improved in the future work. References [1] Q. Tao, H. Chang, C. Gu, and Y. Yi (2012), “A novel prediction approach for trustworthy QoS of web services”, Journal of Expert Systems with Applications, vol. 39, no. 3, pp. 3676 - 3681. [2] D. Smith, G. Timms, P. De Souza, and C. D’Este (2012), “A Bayesian Framework for the Automated Online Assessment of Sensor Data Quality”, Sensors, vol. 12, pp. 9476 - 9501. [3] N. Guo, T. Gao, and B. Zhang (2008), “A Trusted Quality of Web Services Management Framework Based on Six Dimensional QoWS Model and End-to-End Monitoring”, in Proceedings of the 11th Asia-Pacific Symposium on Network Operations and Management: Challenges for Next Generation Network Operations Journal of Science Hong Duc University, E.2, Vol.7, P (11 - 20), 2016 20 and Service Management, pp. 437 - 440. [4] A. Rahman, D. Smith, and G. Timms (2014), “A Novel Machine Learning Approach towards Quality Assessment of Sensor Data”, IEEE Sensors Journal, vol. 14, no. 4, pp. 1035 - 1047. [5] L. Zeng, H. Lei, and H. Chang (2007), “Monitoring the QoS for Web Services”, in Service-Oriented Computing – ICSOC 2007, vol. 4749, Springer Berlin / Heidelberg, pp. 132 - 144. [6] P. Bhargavi and S. Jyothi (2009), “Applying Naive Bayes data mining technique for classification of agricultural land soils”, International Journal of Computer Science and Network Security, vol. 9, no. 8, pp. 117 - 122. [7] M. W. Browne (2000), “Cross-Validation Methods”, Journal of Mathematical Psychology, vol. 44, no. 1, pp. 108 - 132, Mar.

File đính kèm:

an_efficient_algorithm_for_quality_of_service_assessment.pdf

an_efficient_algorithm_for_quality_of_service_assessment.pdf