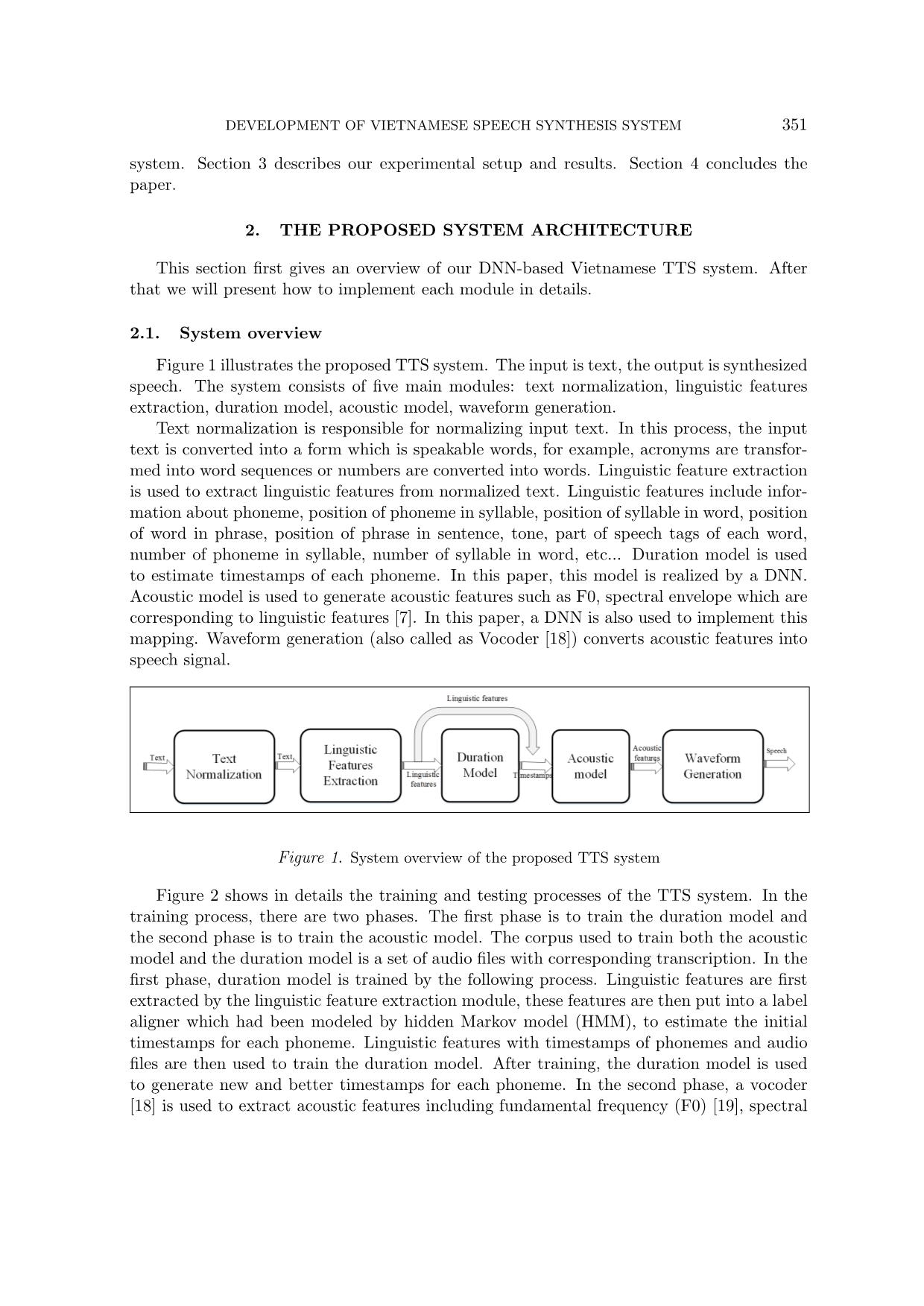

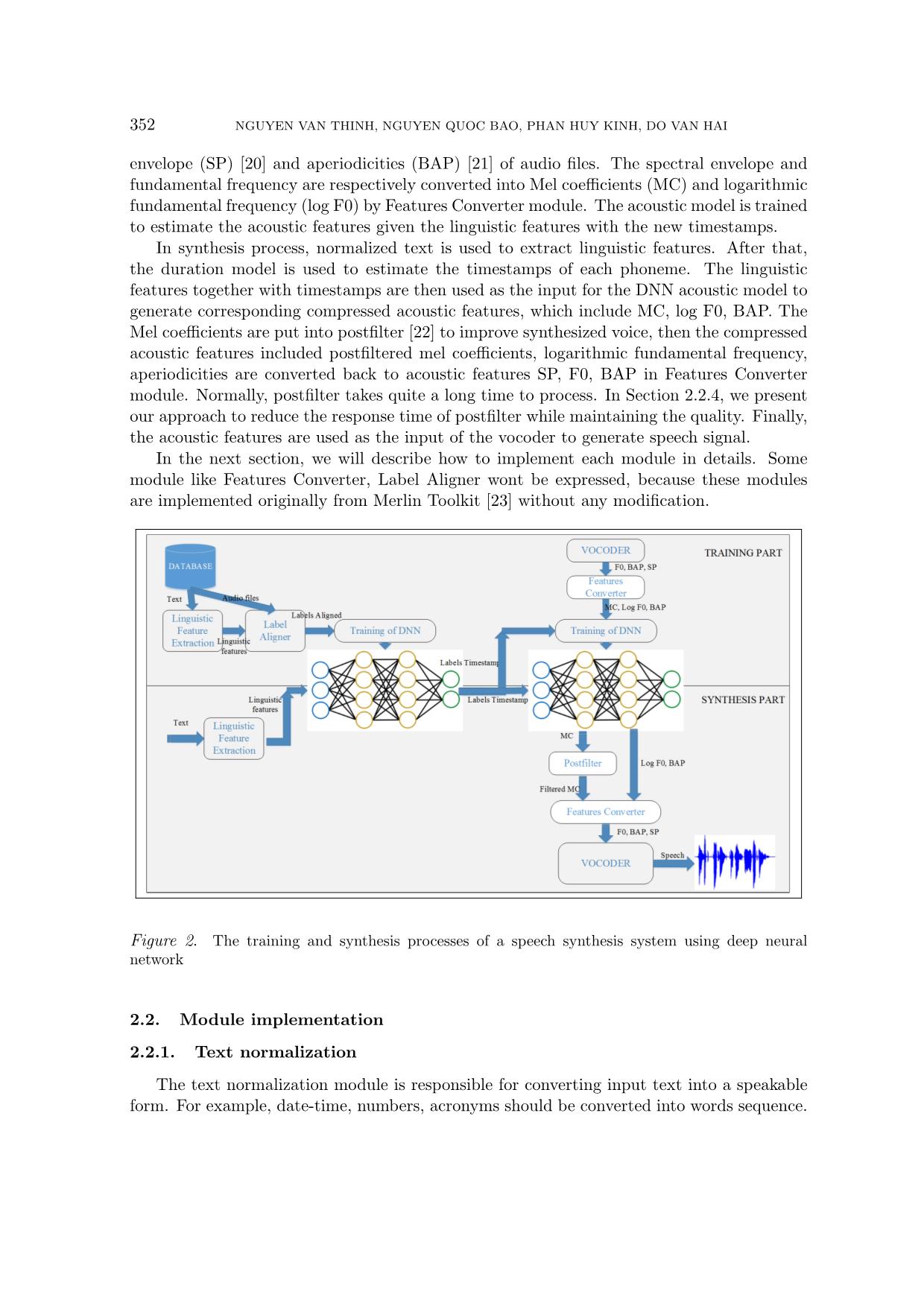

Development of vietnamese speech synthesis system using deep neural networks

In this paper, we present our first Vietnamese speech synthesis system based on deep

neural networks. To improve the training data collected from the Internet, a cleaning method is

proposed. The experimental results indicate that by using deeper architectures we can achieve better

performance for the TTS than using shallow architectures such as hidden Markov model. We also

present the effect of using different amounts of data to train the TTS systems. In the VLSP TTS

challenge 2018, our proposed DNN-based speech synthesis system won the first place in all three

subjects including naturalness, intelligibility, and MOS.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tải về để xem bản đầy đủ

Bạn đang xem 10 trang mẫu của tài liệu "Development of vietnamese speech synthesis system using deep neural networks", để tải tài liệu gốc về máy hãy click vào nút Download ở trên

Tóm tắt nội dung tài liệu: Development of vietnamese speech synthesis system using deep neural networks

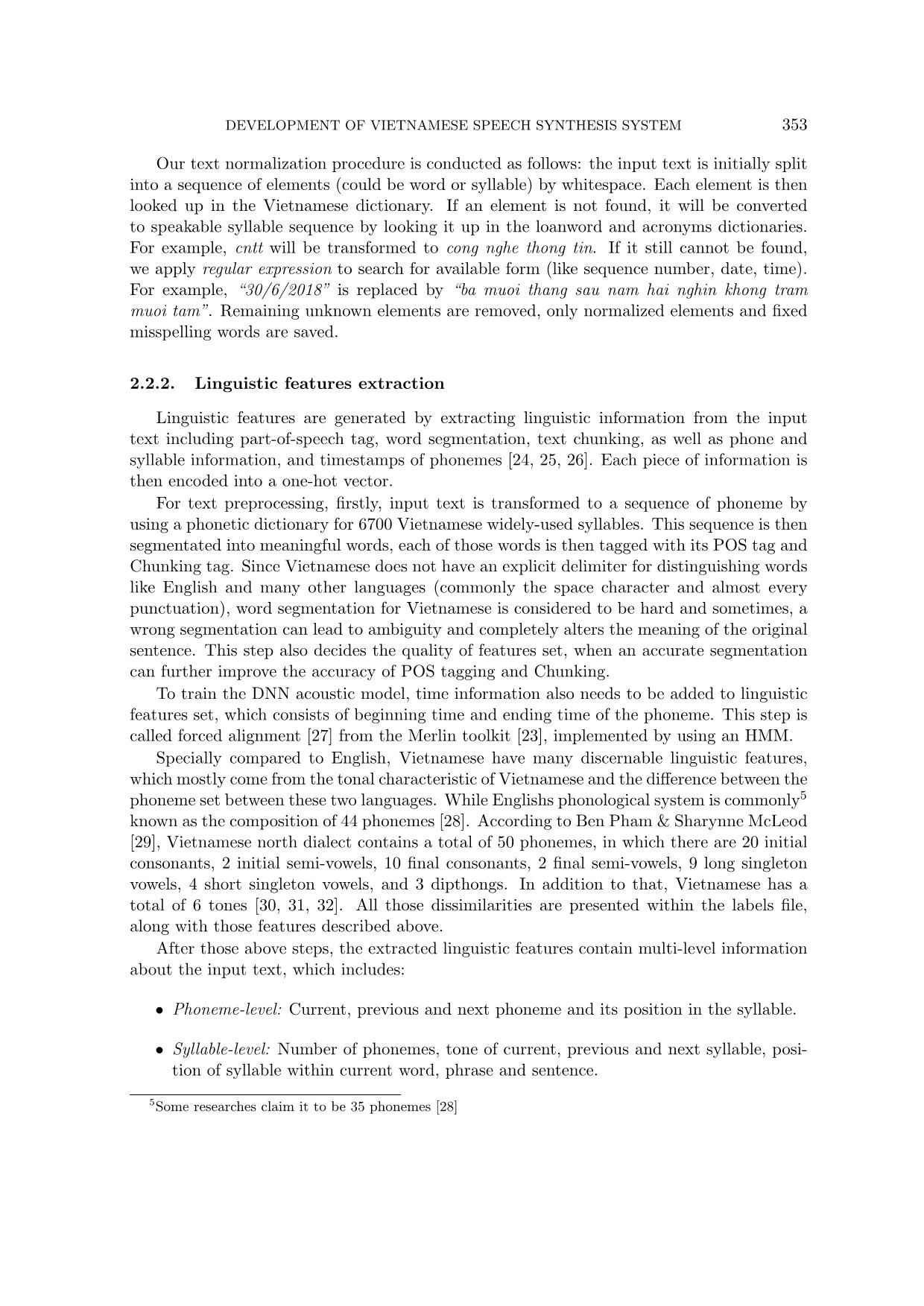

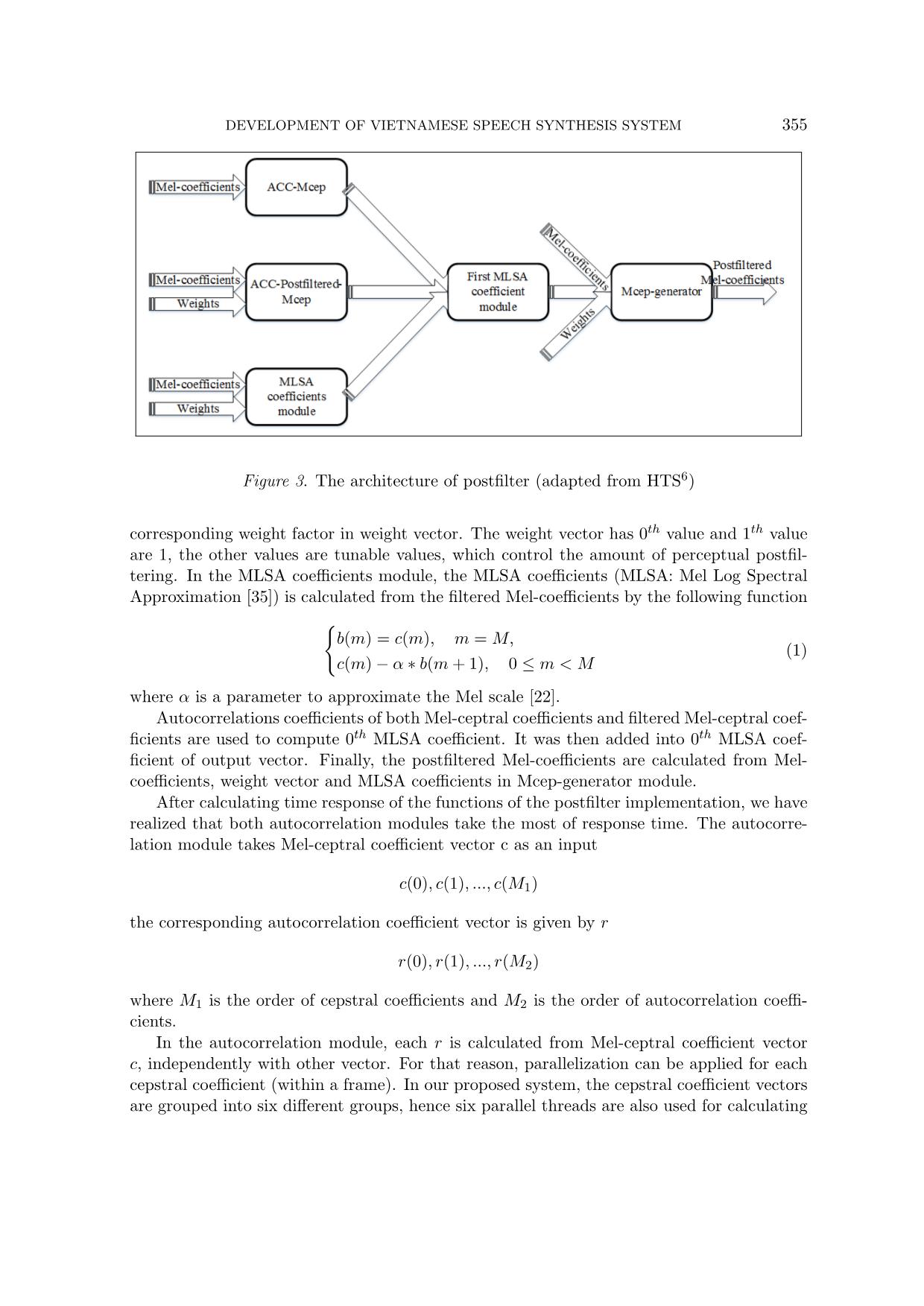

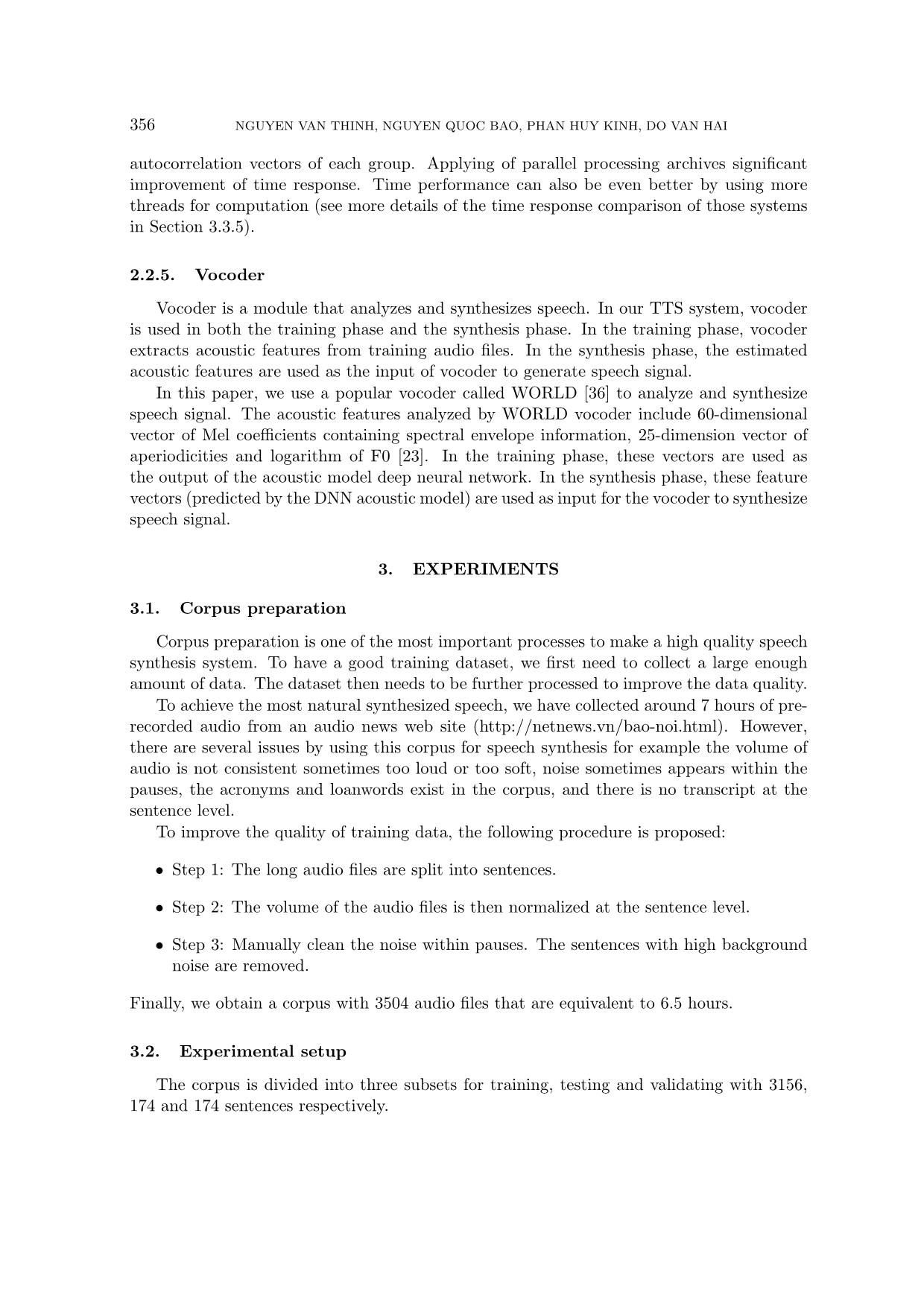

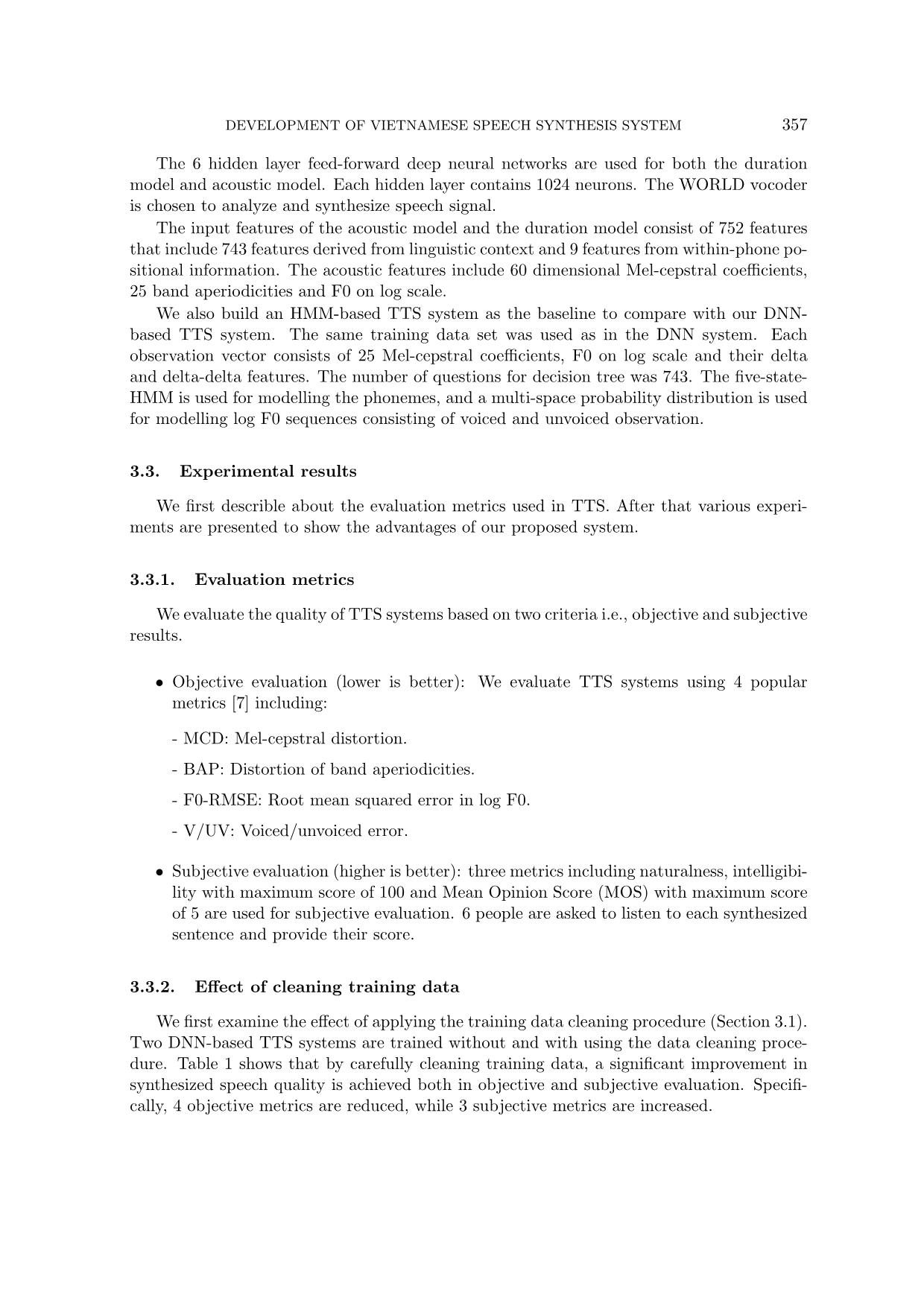

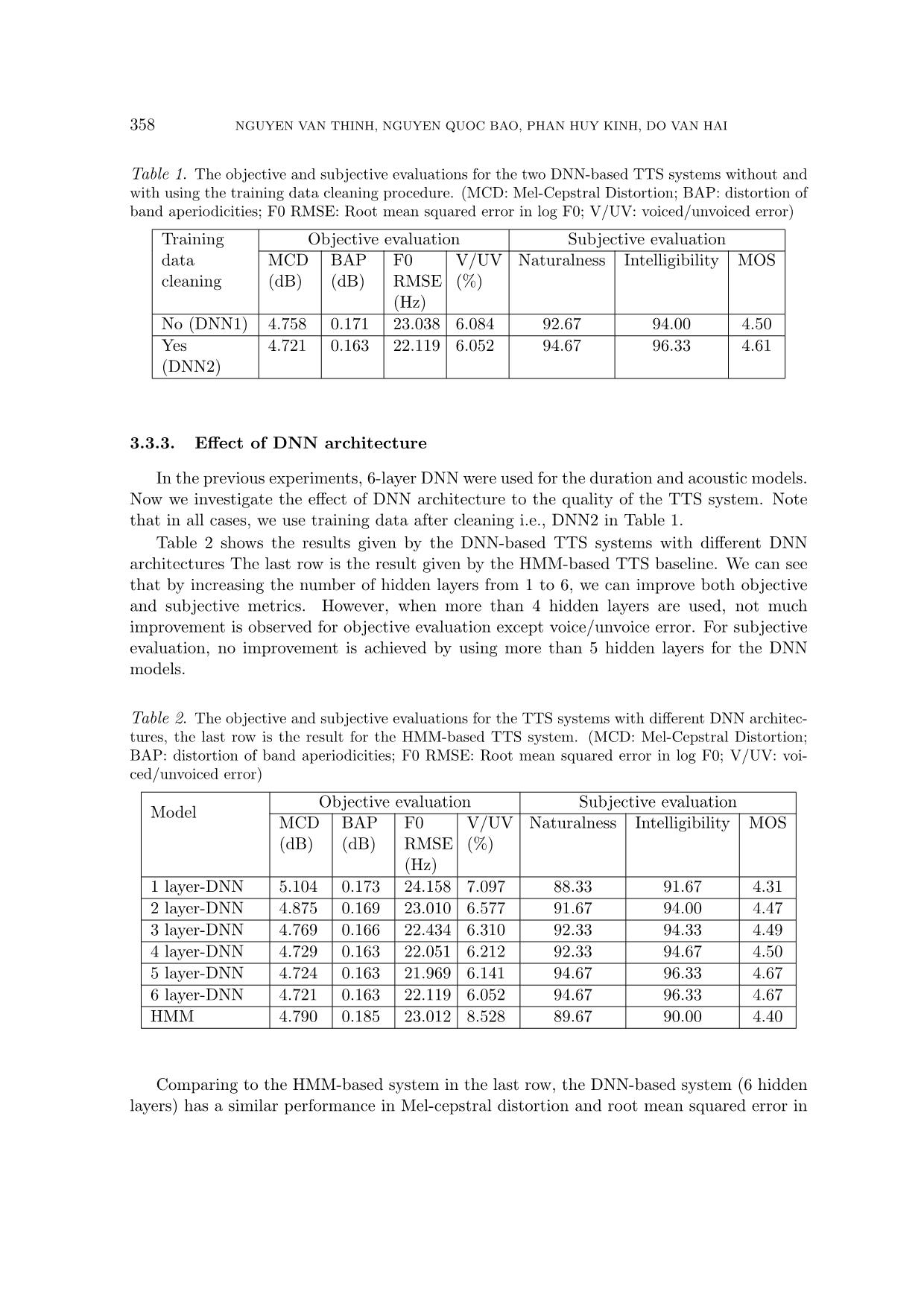

ction 3.1). Two DNN-based TTS systems are trained without and with using the data cleaning proce- dure. Table 1 shows that by carefully cleaning training data, a significant improvement in synthesized speech quality is achieved both in objective and subjective evaluation. Specifi- cally, 4 objective metrics are reduced, while 3 subjective metrics are increased. 358 NGUYEN VAN THINH, NGUYEN QUOC BAO, PHAN HUY KINH, DO VAN HAI Table 1. The objective and subjective evaluations for the two DNN-based TTS systems without and with using the training data cleaning procedure. (MCD: Mel-Cepstral Distortion; BAP: distortion of band aperiodicities; F0 RMSE: Root mean squared error in log F0; V/UV: voiced/unvoiced error) Training data cleaning Objective evaluation Subjective evaluation MCD (dB) BAP (dB) F0 RMSE (Hz) V/UV (%) Naturalness Intelligibility MOS No (DNN1) 4.758 0.171 23.038 6.084 92.67 94.00 4.50 Yes (DNN2) 4.721 0.163 22.119 6.052 94.67 96.33 4.61 3.3.3. Effect of DNN architecture In the previous experiments, 6-layer DNN were used for the duration and acoustic models. Now we investigate the effect of DNN architecture to the quality of the TTS system. Note that in all cases, we use training data after cleaning i.e., DNN2 in Table 1. Table 2 shows the results given by the DNN-based TTS systems with different DNN architectures The last row is the result given by the HMM-based TTS baseline. We can see that by increasing the number of hidden layers from 1 to 6, we can improve both objective and subjective metrics. However, when more than 4 hidden layers are used, not much improvement is observed for objective evaluation except voice/unvoice error. For subjective evaluation, no improvement is achieved by using more than 5 hidden layers for the DNN models. Table 2. The objective and subjective evaluations for the TTS systems with different DNN architec- tures, the last row is the result for the HMM-based TTS system. (MCD: Mel-Cepstral Distortion; BAP: distortion of band aperiodicities; F0 RMSE: Root mean squared error in log F0; V/UV: voi- ced/unvoiced error) Model Objective evaluation Subjective evaluation MCD (dB) BAP (dB) F0 RMSE (Hz) V/UV (%) Naturalness Intelligibility MOS 1 layer-DNN 5.104 0.173 24.158 7.097 88.33 91.67 4.31 2 layer-DNN 4.875 0.169 23.010 6.577 91.67 94.00 4.47 3 layer-DNN 4.769 0.166 22.434 6.310 92.33 94.33 4.49 4 layer-DNN 4.729 0.163 22.051 6.212 92.33 94.67 4.50 5 layer-DNN 4.724 0.163 21.969 6.141 94.67 96.33 4.67 6 layer-DNN 4.721 0.163 22.119 6.052 94.67 96.33 4.67 HMM 4.790 0.185 23.012 8.528 89.67 90.00 4.40 Comparing to the HMM-based system in the last row, the DNN-based system (6 hidden layers) has a similar performance in Mel-cepstral distortion and root mean squared error in DEVELOPMENT OF VIETNAMESE SPEECH SYNTHESIS SYSTEM 359 log F0. However, the DNN system is significantly better than the HMM system in distortion of band aperiodicities and voiced/unvoiced error. In the subjective evaluation, the DNN system outperforms consistently the HMM system in all three metrics including naturalness, intelligibility and MOS. This shows that by using deeper architectures we can achieve better performance for the TTS than using shallow architectures such as HMM or neural network with 1 hidden layer. 3.3.4. Effect of training data size Figure 4. Subjective evaluation for both the DNN-based and HMM-based TTS systems with different amounts of training data Now, we investigate the effect of training data size to TTS performance. We randomly sample the full training set (3156 sentences) to smaller subsets i.e., 1600, 800, and 400 sentences. Figure 4 shows subjective evaluation given by the DNN-based system (with 6 hidden layers) and the HMM-based system with different amounts of data to train the model. It can be seen that performance degradation is observed when using less training data for both the DNN and HMM systems. The DNN system achieved a significantly better performance in all aspects: naturalness, intelligibility and MOS metrics. 3.3.5. Effect of applying postfilter In this section, we discuss the effect of applying postfilter to synthesized quality. Two DNN-based system with 6 hidden layers are compared: the first system is configured with postfilter and the second system is a normal system without postfilter. The subjective evaluation is shown in Table 3. It can be seen that the DNN-based system with postfilter archive better results in naturalness, MOS and Intelligibility. 3.3.6. Effect of applying parallel processing to postfilter The result of previous section shows that, by applying postfilter to DNN-based speech synthesis system, notable improvement in synthesized quality has been recorded. In this section, we compared time response of three DNN-based text to speech systems with 6 hidden layers: the Original Postfilter system (system with original postfilter from HTS), 360 NGUYEN VAN THINH, NGUYEN QUOC BAO, PHAN HUY KINH, DO VAN HAI Table 3. Subjective evaluation for both the DNN-based TTS with applying postfilter and DNN-based TTS without applying postfilter Apply Postfilter MOS Naturelness Intelligibility No 4.39 83.73 92.05 Yes 4.67 94.67 96.33 the No Postfilter system (system without postfilter) and the Parallel Postfilter (system with parallelized postfilter). We made a performance test to compare time response of three systems above. The test corpus is a set of the sentences with variable length (like 4 word, 5 word, 6 word, 10 word,). For each length, three sentences were used for testing. The average response time of each system for each length group is demonstrated in Figure 5. It is clear that by using parallel processing, the systems response faster and the difference in time performance is getting more significant as the length of the sentence increases. Figure 5. The response time comparison of three systems: No postfilter is the speech synthesis system without postfiltering, Original Postfilter is the system with the postfilter originated from HTS, and Parallel Postfilter is the system with the postfilter implemented by applying parallel processing 3.3.7. Performance in the VLSP TTS challenge 2018 Our proposed TTS system was also submitted to the VLSP TTS challenge 2018. The test set consists of 30 sentences in news domain. Each team needs to submit 30 corresponding DEVELOPMENT OF VIETNAMESE SPEECH SYNTHESIS SYSTEM 361 Table 4. The scores given by 3 teams in the VLSP TTS challenge 2018 Team Naturalness Intelligibility MOS VAIS 65.50 72.54 3.48 MICA 72.69 76.94 3.79 Our system (Viettel) 90.54 93.02 4.66 synthesized audio files. 20 people including males/females, different dialects, phoneticians and non-phoneticians were asked to provide score for naturalness, intelligibility and MOS. As shown in Table 4, our TTS system (Viettel) won the first place and outperformed other TTS systems significantly in all subjects including naturalness, intelligibility, and MOS. 4. CONCLUSIONS In this paper, we presented our effort to build the first DNN-based Vietnamese TTS system. To reduce the synthesized time, a method of using parallel processing postfilter was proposed. Experimental results showed that using cleaned data improves the quality of synthesized speech given by the TTS system. We also showed that by using deeper archi- tectures, we can achieve better synthesized speech quality than using shallow architectures such as HMM or neural network with 1 hidden layer. The results also indicated that less training data also reduces speech quality. Generally talking, in all cases, the DNN system outperforms the HMM system. Our TTS system also won the first place in the VLSP TTS challenge 2018 in all three subjects including naturalness, intelligibility, and MOS. Our future work is to optimize the TTS systems for different dialects in Vietnam. REFERENCES [1] T. T. Do and T. Takara, “Precise tone generation for vietnamese text-to-speech system,” in Acoustics, Speech, and Signal Processing, 2003. Proceedings.(ICASSP’03). 2003 IEEE Interna- tional Conference on, vol. 1. IEEE, 2003, pp. 504–507. [2] J. J. Ohala, “Christian gottlieb kratzenstein: pioneer in speech synthesis,” Proc. 17th ICPhS, 2011. [3] H. Dudley, “The carrier nature of speech,” Bell System Technical Journal, vol. 19, no. 4, pp. 495–515, 1940. [4] R. Billi, F. Canavesio, A. Ciaramella, and L. Nebbia, “Interactive voice technology at work: The cselt experience,” Speech communication, vol. 17, no. 3-4, pp. 263–271, 1995. [5] R. W. Sproat, Multilingual text-to-speech synthesis. KLUWER academic publishers, 1997. [6] A. W. Black, H. Zen, and K. Tokuda, “Statistical parametric speech synthesis,” in Acoustics, Speech and Signal Processing, 2007. ICASSP 2007. IEEE International Conference on, vol. 4. IEEE, 2007, pp. IV–1229. [7] H. Ze, A. Senior, and M. Schuster, “Statistical parametric speech synthesis using deep neu- ral networks,” in Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE International Conference on. IEEE, 2013, pp. 7962–7966. 362 NGUYEN VAN THINH, NGUYEN QUOC BAO, PHAN HUY KINH, DO VAN HAI [8] A. Van Den Oord, S. Dieleman, H. Zen, K. Simonyan, O. Vinyals, A. Graves, N. Kalchbren- ner, A. Senior, and K. Kavukcuoglu, “Wavenet: A generative model for raw audio,” CoRR abs/1609.03499, 2016. [9] S. O. Arik, M. Chrzanowski, A. Coates, G. Diamos, A. Gibiansky, Y. Kang, X. Li, J. Mil- ler, A. Ng, J. Raiman et al., “Deep voice: Real-time neural text-to-speech,” arXiv preprint arXiv:1702.07825, 2017. [10] D. T. Nguyen, M. C. Luong, B. K. Vu, H. Mixdorff, and H. H. Ngo, “Fujisaki model based f0 contours in vietnamese tts,” in Eighth International Conference on Spoken Language Processing, 2004. [11] A.-T. Dinh, T.-S. Phan, T.-T. Vu, and C. M. Luong, “Vietnamese hmm-based speech synthesis with prosody information,” in Eighth ISCA Workshop on Speech Synthesis, 2013, pp. 55–59. [12] L. He, J. Yang, L. Zuo, and L. Kui, “A trainable vietnamese speech synthesis system based on hmm,” in Electric Information and Control Engineering (ICEICE), 2011 International Confe- rence on. IEEE, 2011, pp. 3910–3913. [13] S. Kayte, M. Mundada, and J. Gujrathi, “Hidden markov model based speech synthesis: A review,” International Journal of Computer Applications (0975–8887) Volume, 2015. [14] T. T. Vu, M. C. Luong, and S. Nakamura, “An hmm-based vietnamese speech synthesis system,” in Speech Database and Assessments, 2009 Oriental COCOSDA International Conference on. IEEE, 2009, pp. 116–121. [15] Q. S. Trinh, “Hmm-based vietnamese speech synthesis,” in 2015 IEEE/ACIS 14th International Conference on Computer and Information Science (ICIS). IEEE, 2015, pp. 349–353. [16] P. G. Shivakumar and P. Georgiou, “Transfer learning from adult to children for speech recog- nition: Evaluation, analysis and recommendations,” arXiv preprint arXiv:1805.03322, 2018. [17] K. Yun, J. Osborne, M. Lee, T. Lu, and E. Chow, “Automatic speech recognition for launch con- trol center communication using recurrent neural networks with data augmentation and custom language model,” in Disruptive Technologies in Information Sciences, vol. 10652. International Society for Optics and Photonics, 2018, p. 1065202. [18] M. Airaksinen, “Analysis/synthesis comparison of vocoders utilized in statistical parametric speech synthesis,” Master’s thesis, Aalto University, 2012. [19] M. Morise, H. Kawahara, and H. Katayose, “Fast and reliable f0 estimation method based on the period extraction of vocal fold vibration of singing voice and speech,” in Audio Engineering Society Conference: 35th International Conference: Audio for Games. Audio Engineering Society, 2009. [20] M. Morise, “Cheaptrick, a spectral envelope estimator for high-quality speech synthesis,” Speech Communication, vol. 67, pp. 1–7, 2015. [21] M. Morise., “Platinum: A method to extract excitation signals for voice synthesis system,” Acoustical Science and Technology, vol. 33, no. 2, pp. 123–125, 2012. [22] T. Yoshimura, K. Tokuda, T. Masuko, T. Kobayashi, and T. Kitamura, “Incorporating a mixed excitation model and postfilter into hmm-based text-to-speech synthesis,” Systems and Compu- ters in Japan, vol. 36, no. 12, pp. 43–50, 2005. DEVELOPMENT OF VIETNAMESE SPEECH SYNTHESIS SYSTEM 363 [23] Z. Wu, O. Watts, and S. King, “Merlin: An open source neural network speech synthesis system,” Proc. SSW, Sunnyvale, USA, 2016. [24] Z. Zhang, M. Li, Y. Zhang, W. Zhang, Y. Liu, S. Yang, and Y. Lu, “The i2r-nwpu-ntu text-to- speech system at blizzard challenge 2016,” in Proc. Blizzard Challenge workshop, 2016. [25] K. Pa¨rssinen and M. Moberg, “Multilingual data configurable text-to-speech system for embed- ded devices,” in Multilingual Speech and Language Processing, 2006. [26] Z.-Z. Wu, E. S. Chng, and H. Li, “Development of hmm-based malay text-to-speech system,” in Proceedings of the Second APSIPA Annual Summit and Conference, 2010, pp. 494–497. [27] D. Jurafsky and J. H. Martin, “Speech and language processing: An introduction to natural language processing, computational linguistics, and speech recognition,” pp. 1–1024, 2009. [28] A. Bizzocchi, “How many phonemes does the english language have?” International Journal on Studies in English Language and Literature (IJSELL), vol. 5, pp. 36–46, 10 2017. [29] B. Phm and S. McLeod, “Consonants, vowels and tones across vietnamese dialects,” Internati- onal journal of speech-language pathology, vol. 18, no. 2, pp. 122–134, 2016. [30] M. Brunelle, “Northern and southern vietnamese tone coarticulation: A comparative case study,” Journal of Southeast Asian Linguistics, vol. 1, no. 1, pp. 49–62, 2009. [31] M. Brunelle., “Tone perception in northern and southern vietnamese,” Journal of Phonetics, vol. 37, no. 1, pp. 79–96, 2009. [32] J. Edmondson and N. V. Li, “Tones and voice quality in modern northern vietnamese: instru- mental case studies.,” Mon-Khmer Studies, vol. 28, 1997. [33] L.-H. Chen, T. Raitio, C. Valentini-Botinhao, Z.-H. Ling, and J. Yamagishi, “A deep generative architecture for postfiltering in statistical parametric speech synthesis,” IEEE/ACM Transacti- ons on Audio, Speech and Language Processing (TASLP), vol. 23, no. 11, pp. 2003–2014, 2015. [34] K. Koishida, K. Tokuda, T. Kobayashi, and S. Imai, “Celp coding based on mel-cepstral analy- sis,” in Acoustics, Speech, and Signal Processing, 1995. ICASSP-95., 1995 International Confe- rence on, vol. 1. IEEE, 1995, pp. 33–36. [35] T. Masuko, “Hmm-based speech synthesis and its applications,” Institute of Technology, p. 185, 2002. [36] M. Morise, F. Yokomori, and K. Ozawa, “World: a vocoder-based high-quality speech synthe- sis system for real-time applications,” IEICE TRANSACTIONS on Information and Systems, vol. 99, no. 7, pp. 1877–1884, 2016. Received on October 04, 2018 Revised on December 28, 2018

File đính kèm:

development_of_vietnamese_speech_synthesis_system_using_deep.pdf

development_of_vietnamese_speech_synthesis_system_using_deep.pdf