Kinect based character navigation in VR game

In the last two years, in Vietnam, the game

attracted a lot of investments and researches.

According to [1], Vietnam is the largest market

in Southeast Asia Game. However, restrictions

still exist, obviously, the solutions are still

awaited. An important factor that make the

game to be attractive is the ability of character

movements. To control the movement of the

characters, players have to touch and navigate

specialized devices to control the movements of

the characters. These devices were typically

gamepad, keyboardist, Wiimote. Besides, with

technology advances, in virtual reality games,

players no need to touch game devices. In

these games, body actions or speech were used

to control the character movement through the

special devices, such as Kinect, Virtuix Omni.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Tóm tắt nội dung tài liệu: Kinect based character navigation in VR game

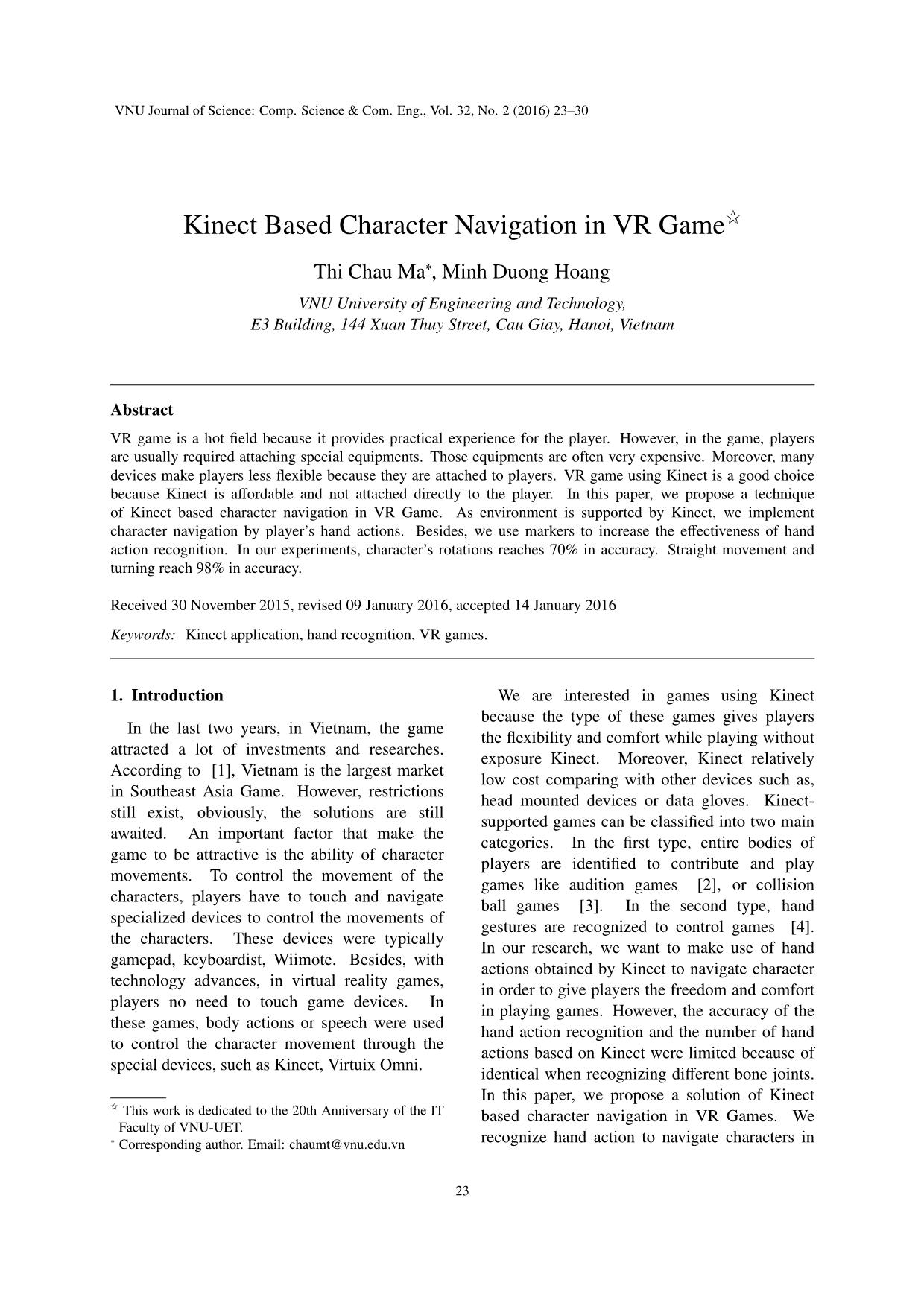

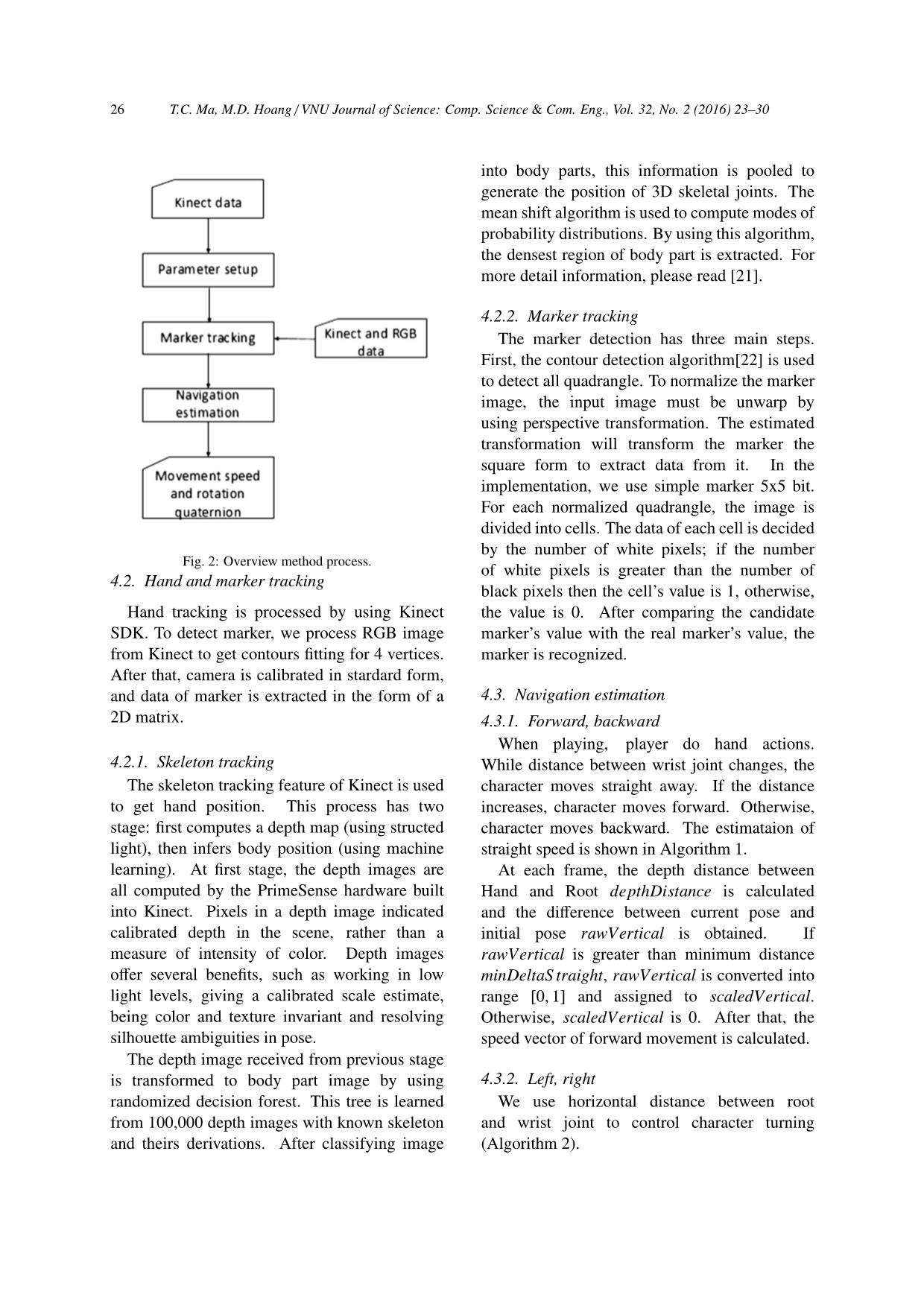

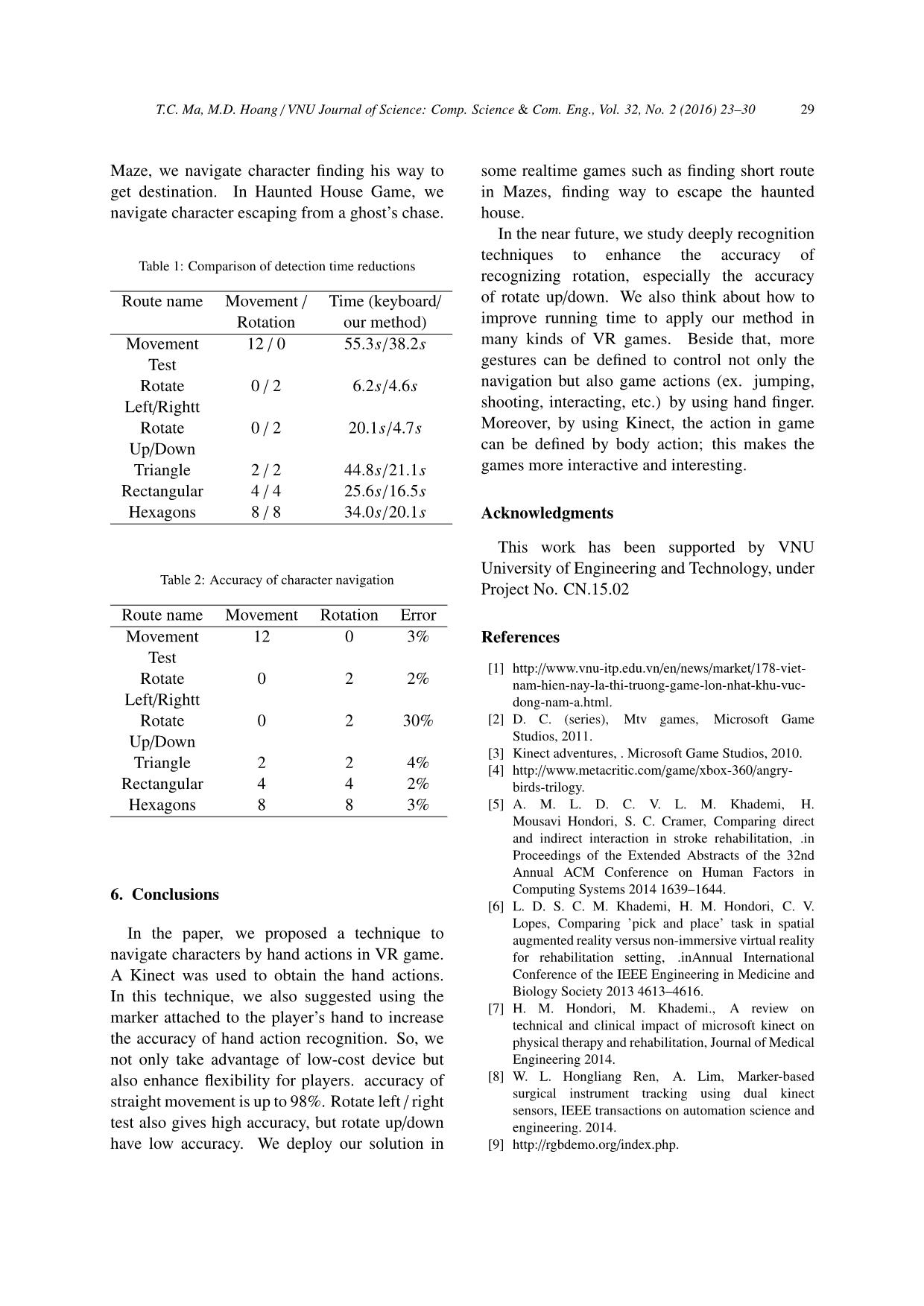

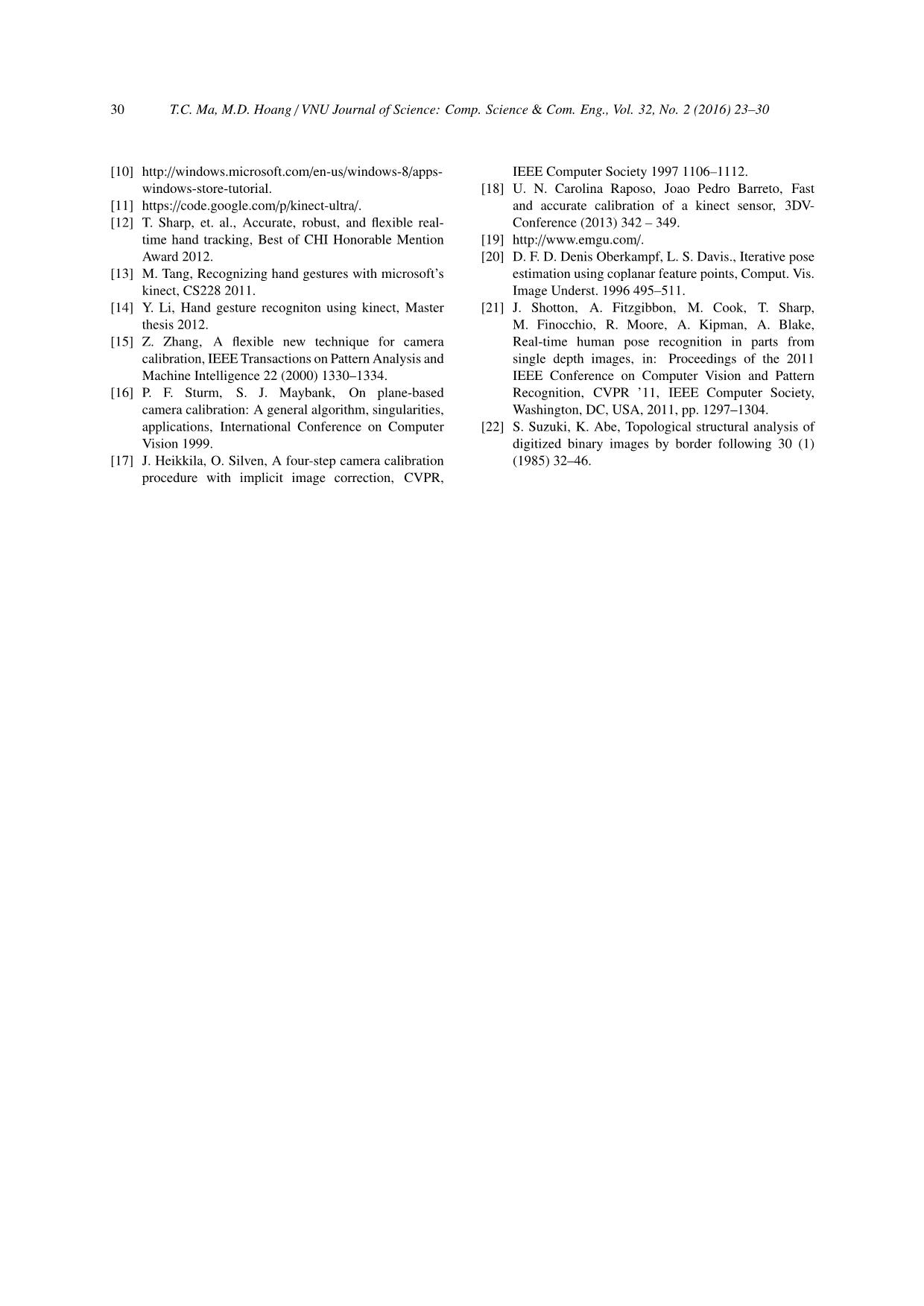

he wrist towards At the beginning process, player is required or away from the root. The change of the to stand in front of Kinect to collect initial data distance from the wrist to the root decides about root and wrist joint position. Player simply the direction (forward/backward) and speed need to be in the pose such that Kinect can see of movement. his root and wrist joint position. Player wears a • Turning: change the wrist to left or right marker in front of fist. In order to track rotation while distance between the wrist and root easily, we should use marker in the form of does not change. checker board. We define some stand distances by the initial depth data as follows: • Rotation: rotate the wrist. The changed angle decides the speed of rotation. • InitDistS traight = HandDepth − RootDepth These actions are determined by comparing • InitDistOrth = HandX − RootX the position of wrist with root and rotation of marker glued on a thumb finger. In detail, • HandRangeS traight = Max(HandDepth)- navigation technique includes three main steps Min(HandDepth) (Fig. 2): parameter setup, hand marker tracking, and navigation estimation. In parameter setup, • HandRangeOrth = Max(HandX)- players are asked to stand or sit in front of Min(HandX) 26 T.C. Ma, M.D. Hoang / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 32, No. 2 (2016) 23–30 into body parts, this information is pooled to generate the position of 3D skeletal joints. The mean shift algorithm is used to compute modes of probability distributions. By using this algorithm, the densest region of body part is extracted. For more detail information, please read [21]. 4.2.2. Marker tracking The marker detection has three main steps. First, the contour detection algorithm[22] is used to detect all quadrangle. To normalize the marker image, the input image must be unwarp by using perspective transformation. The estimated transformation will transform the marker the square form to extract data from it. In the implementation, we use simple marker 5x5 bit. For each normalized quadrangle, the image is divided into cells. The data of each cell is decided by the number of white pixels; if the number Fig. 2: Overview method process. of white pixels is greater than the number of 4.2. Hand and marker tracking black pixels then the cell’s value is 1, otherwise, Hand tracking is processed by using Kinect the value is 0. After comparing the candidate SDK. To detect marker, we process RGB image marker’s value with the real marker’s value, the from Kinect to get contours fitting for 4 vertices. marker is recognized. After that, camera is calibrated in stardard form, and data of marker is extracted in the form of a 4.3. Navigation estimation 2D matrix. 4.3.1. Forward, backward When playing, player do hand actions. 4.2.1. Skeleton tracking While distance between wrist joint changes, the The skeleton tracking feature of Kinect is used character moves straight away. If the distance to get hand position. This process has two increases, character moves forward. Otherwise, stage: first computes a depth map (using structed character moves backward. The estimataion of light), then infers body position (using machine straight speed is shown in Algorithm 1. learning). At first stage, the depth images are At each frame, the depth distance between all computed by the PrimeSense hardware built Hand and Root depthDistance is calculated into Kinect. Pixels in a depth image indicated and the difference between current pose and calibrated depth in the scene, rather than a initial pose rawVertical is obtained. If measure of intensity of color. Depth images rawVertical is greater than minimum distance offer several benefits, such as working in low minDeltaS traight, rawVertical is converted into light levels, giving a calibrated scale estimate, range [0, 1] and assigned to scaledVertical. being color and texture invariant and resolving Otherwise, scaledVertical is 0. After that, the silhouette ambiguities in pose. speed vector of forward movement is calculated. The depth image received from previous stage is transformed to body part image by using 4.3.2. Left, right randomized decision forest. This tree is learned We use horizontal distance between root from 100,000 depth images with known skeleton and wrist joint to control character turning and theirs derivations. After classifying image (Algorithm 2). T.C. Ma, M.D. Hoang / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 32, No. 2 (2016) 23–30 27 Algorithm 1: Estimate forward/backward 1 depthDistance ← RootDepth - HandDepth 2 rawVertical ← (depthDistance − InitDistS traight) 3 if rawVertical > minDeltaS traight then 4 scaledVertical ← rawVertical / HandRangeS traight 5 else 6 scaledVertical ← 0 7 StraightSpeed ← StraightVector × playerMaxS peed × scaledVertical Algorithm 2: Estimate Left/right movement 1 orthDistance ← HandX - RootX 2 rawHorizontal ← orthDistance − InitDistOrth 3 if rawHorizontal > minDeltaOrth then 4 scaledHorizontal ← rawHorizontal / HandRangeOrth 5 else 6 scaledHorizontal ← 0 7 OrthoSpeed ← OrthoVector × playerMaxS peed × scaledHorizontal The horizontal distance between Hand and translation matrix HomoMat is calculated by and Root orthDistance is obtained and the coplanar POSIT algorithm. After extract yaw, difference between current pose and initial pose pitch, roll angle, the markerS tate is checked. rawHorizontal is calculated by subtract current If marker is in FRONT state and roll angle is orthDistance to InitDistOrth. If rawHorizontal greater than minimum angle to rotate minAngleX, is greater than minimum distance minDeltaOrth, axisX is obtained by normalize roll angle into rawHorizontal is converted into range [0, 1] range [0, 1]. If marker is in LEFT, RIGHT state, and assigned to scaledHorizontal. Otherwise, the axisX is respectively 1 and −1. The up/down scaledHorizontal is 0. After that, the speed rotation is measured by using pitch angle instead vector of left movement is calculated. of yaw angle because of the instability of yaw angle estimation. If pitch angle is too small then pitch 4.3.3. Rotation player does not rotate. Because the value of is the same when marker rotate up and down, the Player rotates the wrist to control character value of roll and yaw is checked to infer whether rotation. The marker is rotated follow the hand. player want to rotate up or down. If roll and By using Coplanar POSIT algorithm [20], the yaw less than 90, player wants to rotate up; and estimated angle can be inferred into 3 axis angles otherwise, player rotates down. After normalized (ie. yaw, pitch, roll). The estimated roll angle axis and axis , the rotation speed of each frame is used to rotate left, right, and the pitch angle X Y ∆ and ∆ are calculated. decide to rotate player up or down. After angleX angleY that, each frame add amount of angle to user’s quaternion to change the rotation angle of player 5. Evaluation (Algorithm 3). Firstly, the left/right rotation is measured. To prove the effectiveness of the proposed By tracking marker, the marker rotation state method, we experimented on several routes markerS tate is estimated. The estimated rotation (Fig. 3). These routes are in the shapes of lines, 28 T.C. Ma, M.D. Hoang / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 32, No. 2 (2016) 23–30 Algorithm 3: Estimate rotation 1 axisX ← 0, axisY ← 0 2 markerS tate ← Extract(markerData)% detect marker on thumb figure % 3 HomoMat ← CoPOS IT(source, des)% extract homography matrix % 4 (yaw, pitch, roll) ← Extract(HomoMat)% determine yaw, pitch or roll % 5 if markerS tate==FRONT then 6 if Abs(roll) > minAngleX then Fig. 3: A test map. 7 axisX ← roll/maxAngleX 8 else 9 axisX ← 0 10 else 11 if markerS tate==LEFT then 12 axisX ← 1 13 else 14 axisX ← −1 15 pitch ← pitch + 90 Fig. 4: A test. 16 if pitch < minAngleY then 17 axisY ← 0 18 else backward-left, etc. According to table 2, the 19 if roll < 90 and yaw < 90 then accuaracy of up/down rotation is lowest because 20 axisY ← Abs(pitch)/maxAngleY of shadow and errors of estimation algorithm. 21 else Each test is defined by a sequence of 22 ← − axisY Abs(pitch)/maxAngleY movements and rotations. An error is defined 23 ∆angleX ← axisX × dampling × TimeFrame as follow: ”when user sends control a signal, 24 ∆angleY ← axisY × dampling × TimeFrame the responding result is not corrected or the responding time is over a time threshold, then an error is occurred”. In order to calculate the accuracy, we experimented each test several triangulations, rectangles, hexagons. Each route (around 20) times. The experimenters do estimated minimum number of movement actions the sequence of actions as definition of the (ie. forward, backward, left, right) and rotation test in order to reach the final destination. (ie. left/right, up/down) actions to complete each For each sending signal, if the responding test (Fig. 4). We compare the time to complete movement/rotation is incorrect or the responding test of this method to keyboard/mouse method time is too long (the time threshold is 1 second), (Table 1). Though, our proposal’s runtime has not then it is counted as an error. The accuracy reached those of using keyboard/mouse. In small is calculated by the percentage of the number games including mainly character’s movement, of corrected actions overall the number of all our runtime is acceptable. The proposed method actions, where number of corrected actions equals works well with simple movement action, such to the subtraction between the number of actions as forward, backward, left, right; however, the and the number of errors. accuracy of movement is decrease a bit with We applied Kinect based character navigation compound movement actions like forward-left, in Maze Game and Haunted House Game. In T.C. Ma, M.D. Hoang / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 32, No. 2 (2016) 23–30 29 Maze, we navigate character finding his way to some realtime games such as finding short route get destination. In Haunted House Game, we in Mazes, finding way to escape the haunted navigate character escaping from a ghost’s chase. house. In the near future, we study deeply recognition techniques to enhance the accuracy of Table 1: Comparison of detection time reductions recognizing rotation, especially the accuracy Route name Movement / Time (keyboard/ of rotate up/down. We also think about how to Rotation our method) improve running time to apply our method in Movement 12 / 0 55.3s/38.2s many kinds of VR games. Beside that, more Test gestures can be defined to control not only the Rotate 0 / 2 6.2s/4.6s navigation but also game actions (ex. jumping, Left/Rightt shooting, interacting, etc.) by using hand finger. Rotate 0 / 2 20.1s/4.7s Moreover, by using Kinect, the action in game Up/Down can be defined by body action; this makes the Triangle 2 / 2 44.8s/21.1s games more interactive and interesting. Rectangular 4 / 4 25.6s/16.5s Hexagons 8 / 8 34.0s/20.1s Acknowledgments This work has been supported by VNU University of Engineering and Technology, under Table 2: Accuracy of character navigation Project No. CN.15.02 Route name Movement Rotation Error Movement 12 0 3% References Test [1] Rotate 0 2 2% nam-hien-nay-la-thi-truong-game-lon-nhat-khu-vuc- Left/Rightt dong-nam-a.html. Rotate 0 2 30% [2] D. C. (series), Mtv games, Microsoft Game Up/Down Studios, 2011. [3] Kinect adventures, . Microsoft Game Studios, 2010. Triangle 2 2 4% [4] Rectangular 4 4 2% birds-trilogy. Hexagons 8 8 3% [5] A. M. L. D. C. V. L. M. Khademi, H. Mousavi Hondori, S. C. Cramer, Comparing direct and indirect interaction in stroke rehabilitation, .in Proceedings of the Extended Abstracts of the 32nd Annual ACM Conference on Human Factors in 6. Conclusions Computing Systems 2014 1639–1644. [6] L. D. S. C. M. Khademi, H. M. Hondori, C. V. Lopes, Comparing ’pick and place’ task in spatial In the paper, we proposed a technique to augmented reality versus non-immersive virtual reality navigate characters by hand actions in VR game. for rehabilitation setting, .inAnnual International A Kinect was used to obtain the hand actions. Conference of the IEEE Engineering in Medicine and In this technique, we also suggested using the Biology Society 2013 4613–4616. [7] H. M. Hondori, M. Khademi., A review on marker attached to the player’s hand to increase technical and clinical impact of microsoft kinect on the accuracy of hand action recognition. So, we physical therapy and rehabilitation, Journal of Medical not only take advantage of low-cost device but Engineering 2014. also enhance flexibility for players. accuracy of [8] W. L. Hongliang Ren, A. Lim, Marker-based straight movement is up to 98%. Rotate left / right surgical instrument tracking using dual kinect sensors, IEEE transactions on automation science and test also gives high accuracy, but rotate up/down engineering. 2014. have low accuracy. We deploy our solution in [9] 30 T.C. Ma, M.D. Hoang / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 32, No. 2 (2016) 23–30 [10] IEEE Computer Society 1997 1106–1112. windows-store-tutorial. [18] U. N. Carolina Raposo, Joao Pedro Barreto, Fast [11] https://code.google.com/p/kinect-ultra/. and accurate calibration of a kinect sensor, 3DV- [12] T. Sharp, et. al., Accurate, robust, and flexible real- Conference (2013) 342 – 349. time hand tracking, Best of CHI Honorable Mention [19] Award 2012. [20] D. F. D. Denis Oberkampf, L. S. Davis., Iterative pose [13] M. Tang, Recognizing hand gestures with microsoft’s estimation using coplanar feature points, Comput. Vis. kinect, CS228 2011. Image Underst. 1996 495–511. [14] Y. Li, Hand gesture recogniton using kinect, Master [21] J. Shotton, A. Fitzgibbon, M. Cook, T. Sharp, thesis 2012. M. Finocchio, R. Moore, A. Kipman, A. Blake, [15] Z. Zhang, A flexible new technique for camera Real-time human pose recognition in parts from calibration, IEEE Transactions on Pattern Analysis and single depth images, in: Proceedings of the 2011 Machine Intelligence 22 (2000) 1330–1334. IEEE Conference on Computer Vision and Pattern [16] P. F. Sturm, S. J. Maybank, On plane-based Recognition, CVPR ’11, IEEE Computer Society, camera calibration: A general algorithm, singularities, Washington, DC, USA, 2011, pp. 1297–1304. applications, International Conference on Computer [22] S. Suzuki, K. Abe, Topological structural analysis of Vision 1999. digitized binary images by border following 30 (1) [17] J. Heikkila, O. Silven, A four-step camera calibration (1985) 32–46. procedure with implicit image correction, CVPR,

File đính kèm:

kinect_based_character_navigation_in_vr_game.pdf

kinect_based_character_navigation_in_vr_game.pdf