Generating test data for software structural testing using particle swarm optimization

Software is amandatory part of today's life,

and has become more and more important in

current information society. However, its

failure may lead to significanteconomic loss or

threat to life safety. As a consequence, software

qualityhas become a top concern today. Among

the methods of software quality assurance,

software testing has been proven as one of the

effective approachesto ensure and improve

software quality over the past threedecades.

However, as most of the software testing is

being done manually, the workforce and cost

required are accordingly high [1]. In general,

about 50 percent of workforce and cost in the

software development process is spent on

software testing [2]. Considering those reasons,

automated software testing has been evaluated

as an efficient and necessary method in order to

reduce those efforts and costs.

Automated structural test data generation is

becoming the research topic attracting much

interest in automated software testingbecause it

enhances the efficiency while reducing

considerably costs of software testing. In our

paper, we will focus on path coverage test data

generation, considering that almost all structural

test data generation problems can be transformed

to the path coverage test datageneration one.

Moreover, Kernighan and Plauger [3] also pointed

out that path coverage test data generation can

find out more than 65 percent of bugs in the given

program under test (PUT).

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tải về để xem bản đầy đủ

Tóm tắt nội dung tài liệu: Generating test data for software structural testing using particle swarm optimization

, 4) + fBchDist(month, ≠, 6) + f4F

month= 9 || month= 11), F] fBchDist (month, ≠, 9) + fBchDist(month, ≠, 11)

o

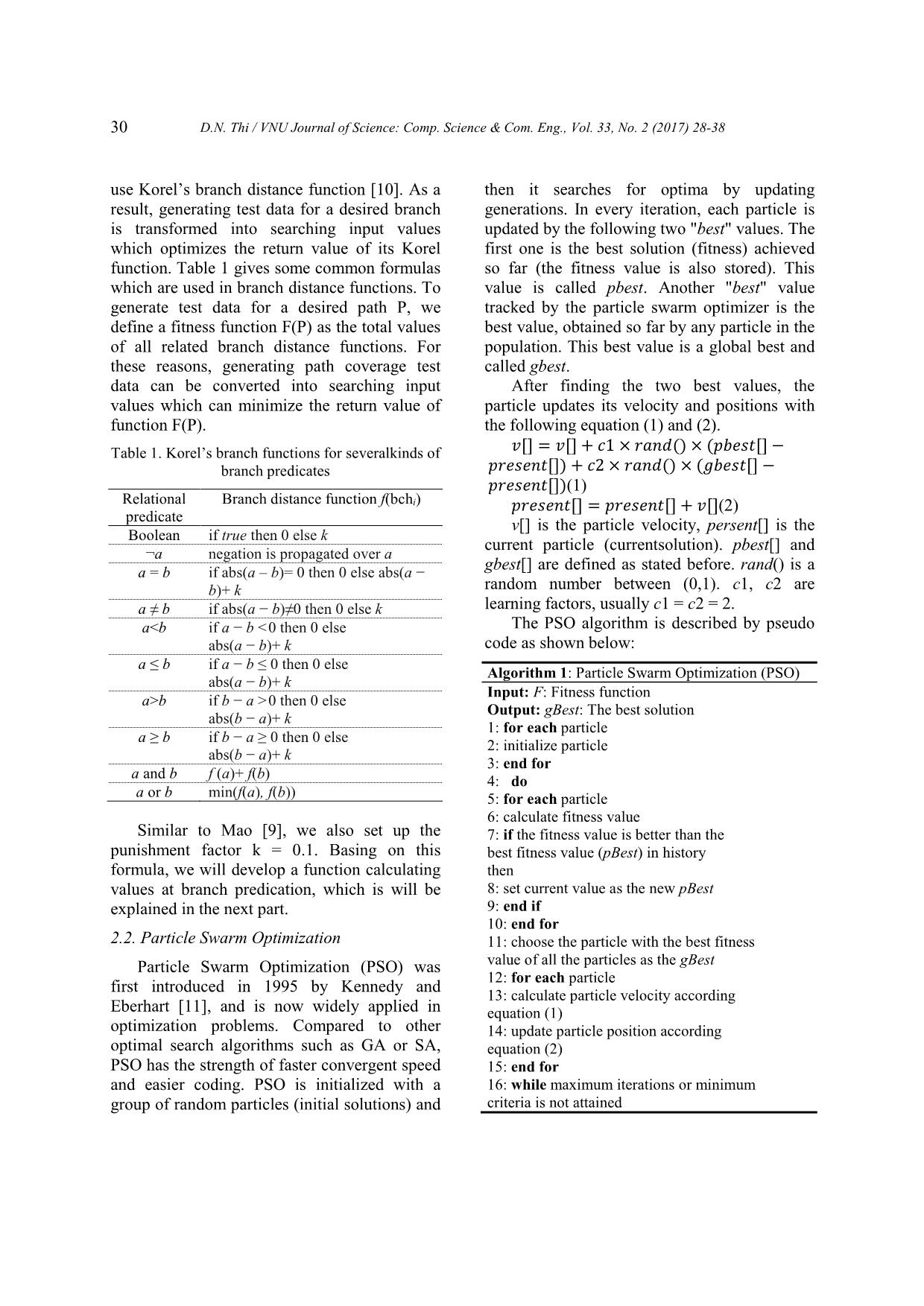

Algorithm 4: Branch distance function (fBchDist) 12: case “≥”:

Input: double a, condition type, double b 13 if b − a ≥ 0 then return 0 else return

Output:branch distance value (abs(b − a) + k)

1: switch (condition type) 14: end switch

2: case “=”:

3: if abs(a − b) = 0 then retrun 0 else

return abs(a − b) + k) Base onthese formulas, forcalculating

4: case “≠”: fitness value for each branch predication, we

5: if abs(a − b)≠0 then return 0 else generate the fitness function for each test path

return k of the PUT getDayNum as below:

6: case “<”: Table 4. Fitness functions for each test path

7: if a − b <0 then return 0 else return of PUT getDayNum

(abs(a − b) + k)

8: case “≤”: PathID Test path fitness functions

9: if a − b ≤ 0 then return 0 else return path1 F1 = f1T + f2T + f3T

(abs(a − b) + k) path2 F2 = f1T + f2T + f3F

10: case “>”: path3 F3 = f1T + f2F + f4T

11: if b − a >0 then return 0 else return path4 F4 = f1T + f2F + f4F

(abs(b − a) + k) path5 F5 = f1F

D.N. Thi / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 33, No. 2 (2017) 28-38 35

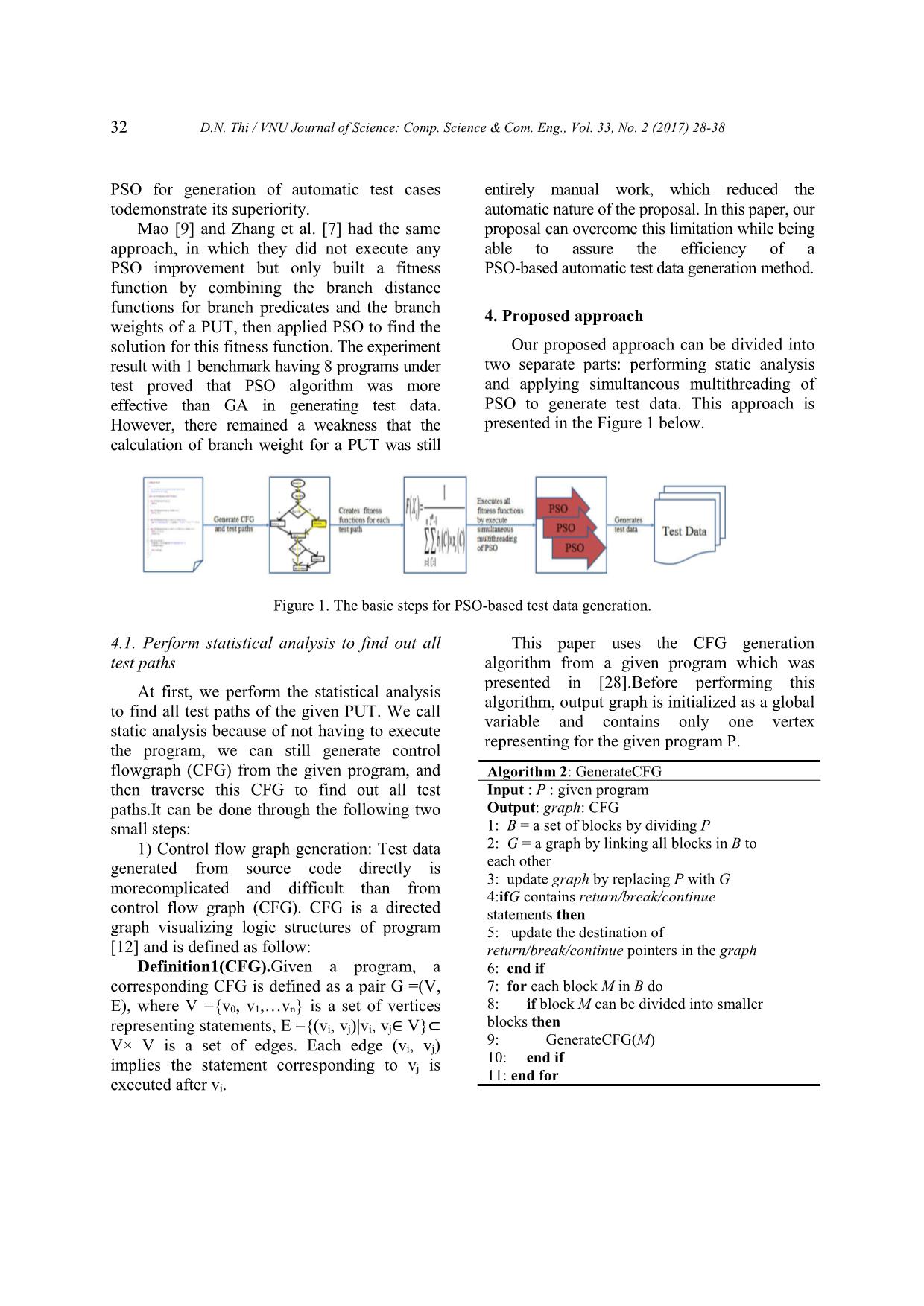

4.3. Apply multithreading of Particle Swarm 5. Experimental analysis

Optimization

We compared our experimental result to

With each fitness function of each test path, Mao’s proposal [9] in 2 criteria: the automatic

we use one PSO to find its solution (in this case ability of test data generation and the coverage

the solution means the test data which can cover capabilities of each proposal for each PUT of

the corresponding test path). In order to find the the given benchmark. Also we show our

solution for all fitness functions at the same approach is better than state-of-the-art

time, we perform simultaneous multithreading constraint-based test data generator Symbolic

of the PSO algorithm by defining PSO it as 1 PathFinder [21].

class extends Thread class of Java as follows:

public class PSOProcess extends Thread 5.1. Automatic ability

The multithreading of PSO can be executed

When referring to an automatic test data

through below algorithm:

generation method, the actual coverage of

"automatic" ability is one of the key criteria to

Algorithm 5: Multithreading of Particle Swarm

Optimization(MPSO) decide the proposal’s effectiveness. Mao [9]

Input: list of fitness functions used only 1 fitness to generate test data for all

Output:the set of test data that is solution to test paths of a PUT, therefore he had to

cover corresponding test path combine branch weight for each test path into

1: for each fitness function Fi the fitness function. The build of a branch

2: initialize an object psoi of class weight function (and also the fitness function)

PSOProcess is purely manual, and for long and complex

3: assign a fitness function Fi to object psoi PUT, sometimes it is even harder than

4: execute object pso: pso.start(); generating test data for the test paths, therefore

5: end for it affected the efficiency of his proposed

approach.

The experimental results of the above steps On the opposite side, taking advantage of

gave the results that our proposal has generated the fast convergence of PSO algorithm, we

test data which covered all test paths of propose the solution of using separate fitness

PUTgetDayNum: function for each test path. This solution has

clear benefits:

1. As there is no need to build the branch

weight function, the automatic feature of this

proposal will be improved.

2. The fitness functions are automatically

built basing on the pair of branch predication

and its decision of each test path, and these

pairs can be entirely generated automatically

from a PUT with above mentioned algorithm 2

and 3. This obviously advances the automatic

ability in our proposal.

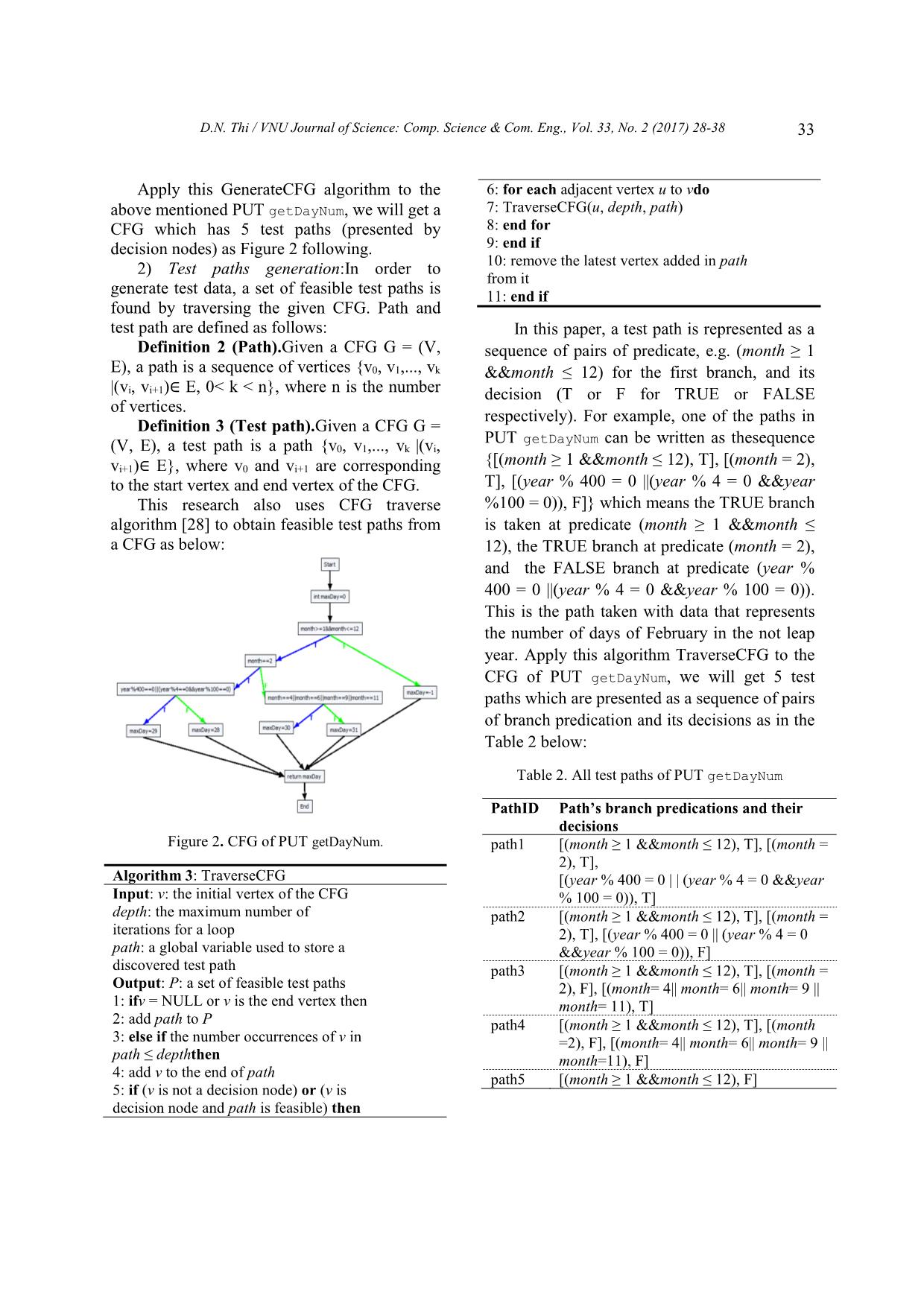

5.2. Path coverage ability

Figure 4. Generated test data for the PUT We also confirmed our proposed approach

getDayNum. on the benchmark which is used in Mao’s paper

[9]. We performed in the environment of MS

Windows 7 Ultimate with 32-bits and ran on

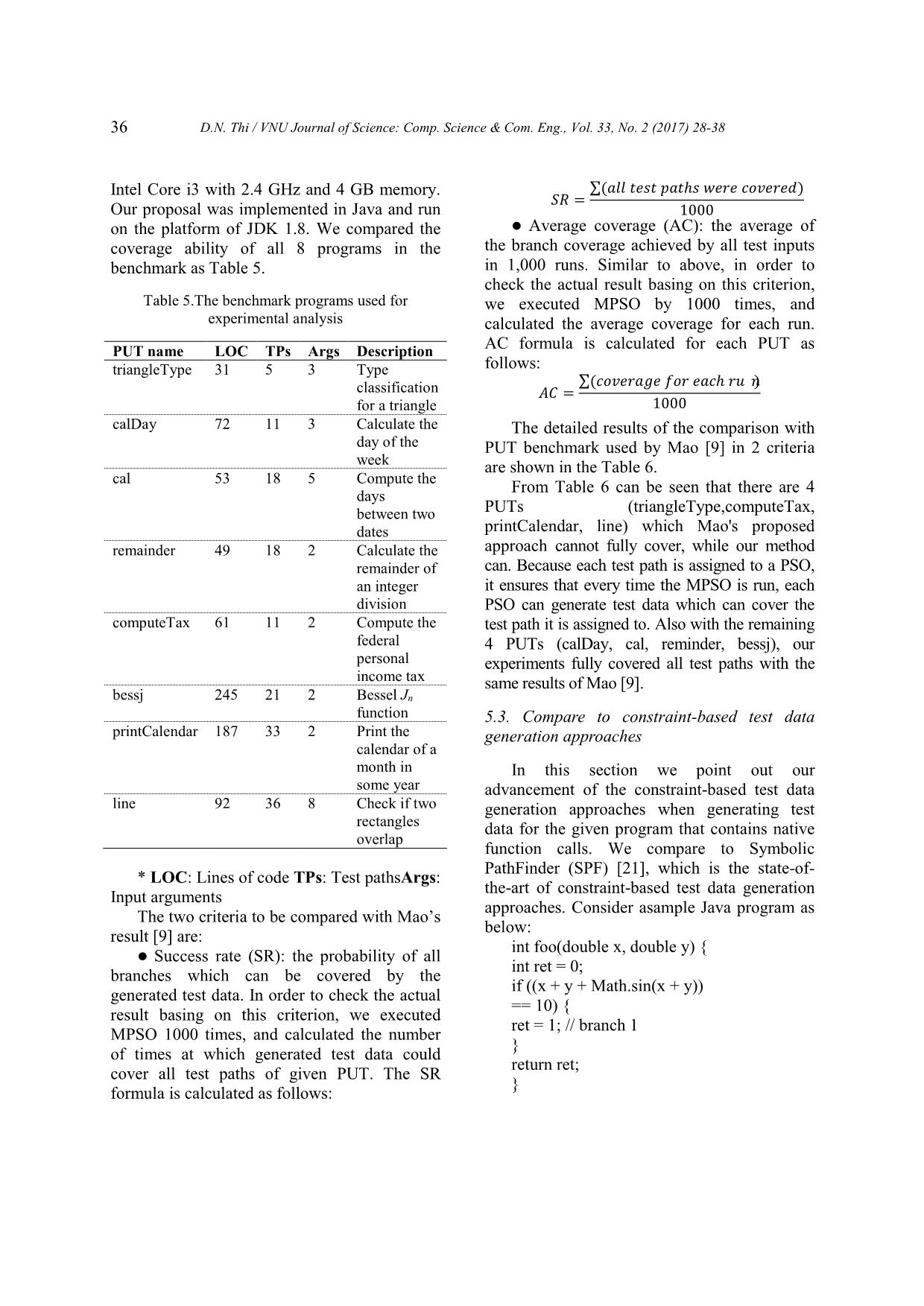

36 D.N. Thi / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 33, No. 2 (2017) 28-38

Intel Core i3 with 2.4 GHz and 4 GB memory. ∑(��� ���� ���ℎ� ���� �������)

�� =

Our proposal was implemented in Java and run 1000

on the platform of JDK 1.8. We compared the Average coverage (AC): the average of

coverage ability of all 8 programs in the the branch coverage achieved by all test inputs

benchmark as Table 5. in 1,000 runs. Similar to above, in order to

check the actual result basing on this criterion,

Table 5.The benchmark programs used for we executed MPSO by 1000 times, and

experimental analysis calculated the average coverage for each run.

PUT name LOC TPs Args Description AC formula is calculated for each PUT as

triangleType 31 5 3 Type follows:

∑(�������� ��� ���ℎ ���)

classification �� =

for a triangle 1000

calDay 72 11 3 Calculate the The detailed results of the comparison with

day of the PUT benchmark used by Mao [9] in 2 criteria

week are shown in the Table 6.

cal 53 18 5 Compute the From Table 6 can be seen that there are 4

days

between two PUTs (triangleType,computeTax,

dates printCalendar, line) which Mao's proposed

remainder 49 18 2 Calculate the approach cannot fully cover, while our method

remainder of can. Because each test path is assigned to a PSO,

an integer it ensures that every time the MPSO is run, each

division PSO can generate test data which can cover the

computeTax 61 11 2 Compute the test path it is assigned to. Also with the remaining

federal 4 PUTs (calDay, cal, reminder, bessj), our

personal experiments fully covered all test paths with the

income tax same results of Mao [9].

bessj 245 21 2 Bessel Jn

function 5.3. Compare to constraint-based test data

printCalendar 187 33 2 Print the generation approaches

calendar of a

month in In this section we point out our

some year advancement of the constraint-based test data

line 92 36 8 Check if two generation approaches when generating test

rectangles data for the given program that contains native

overlap

function calls. We compare to Symbolic

PathFinder (SPF) [21], which is the state-of-

* LOC: Lines of code TPs: Test pathsArgs:

the-art of constraint-based test data generation

Input arguments

approaches. Consider asample Java program as

The two criteria to be compared with Mao’s

below:

result [9] are:

int foo(double x, double y) {

Success rate (SR): the probability of all

int ret = 0;

branches which can be covered by the

if ((x + y + Math.sin(x + y))

generated test data. In order to check the actual

== 10) {

result basing on this criterion, we executed

ret = 1; // branch 1

MPSO 1000 times, and calculated the number

}

of times at which generated test data could

return ret;

cover all test paths of given PUT. The SR

}

formula is calculated as follows:

D.N. Thi / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 33, No. 2 (2017) 28-38 37

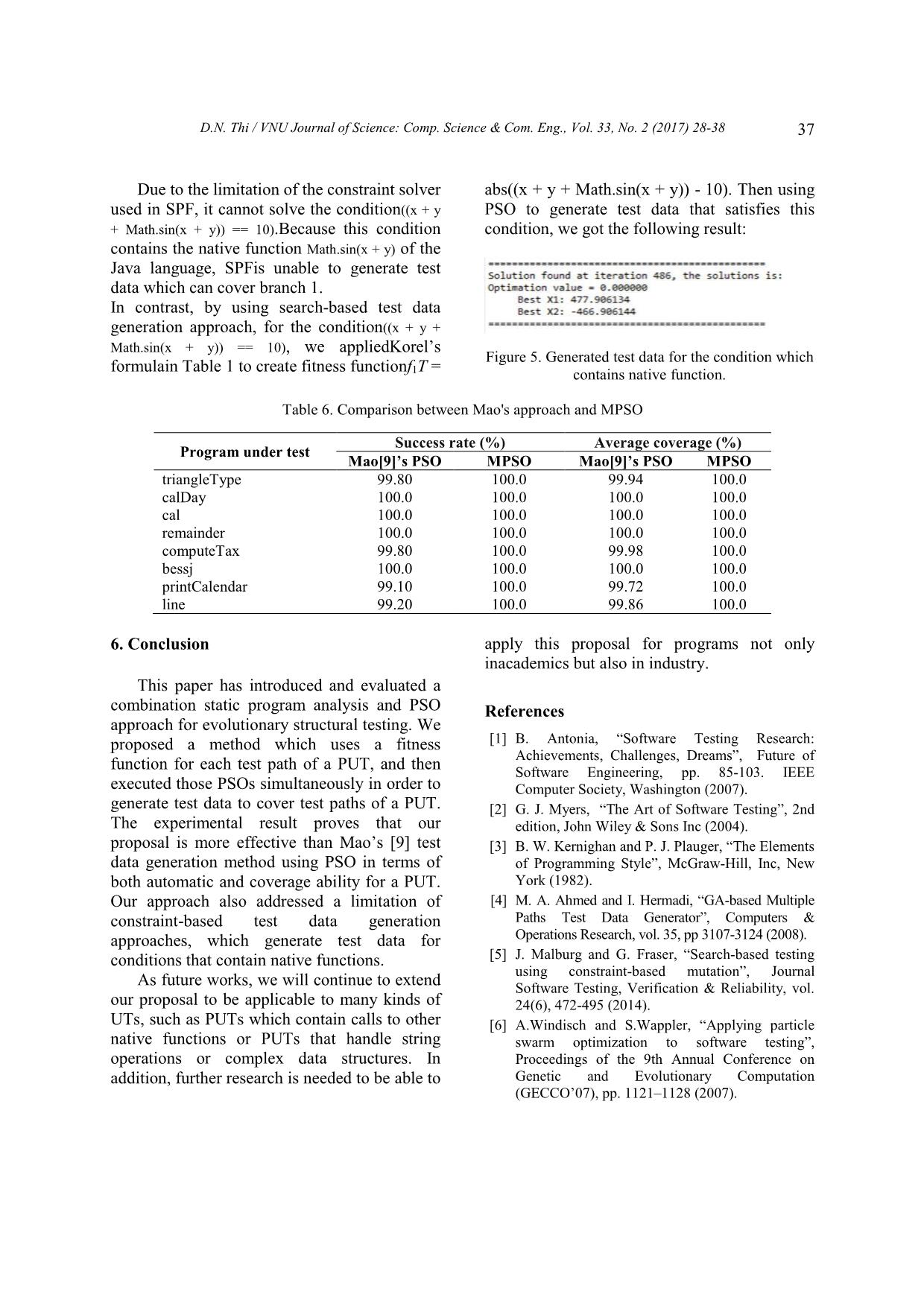

Due to the limitation of the constraint solver abs((x + y + Math.sin(x + y)) - 10). Then using

used in SPF, it cannot solve the condition((x + y PSO to generate test data that satisfies this

+ Math.sin(x + y)) == 10).Because this condition condition, we got the following result:

contains the native function Math.sin(x + y) of the

Java language, SPFis unable to generate test

data which can cover branch 1.

In contrast, by using search-based test data

generation approach, for the condition((x + y +

Math.sin(x + y)) == 10), we appliedKorel’s

Figure 5. Generated test data for the condition which

formulain Table 1 to create fitness functionf1T = contains native function.

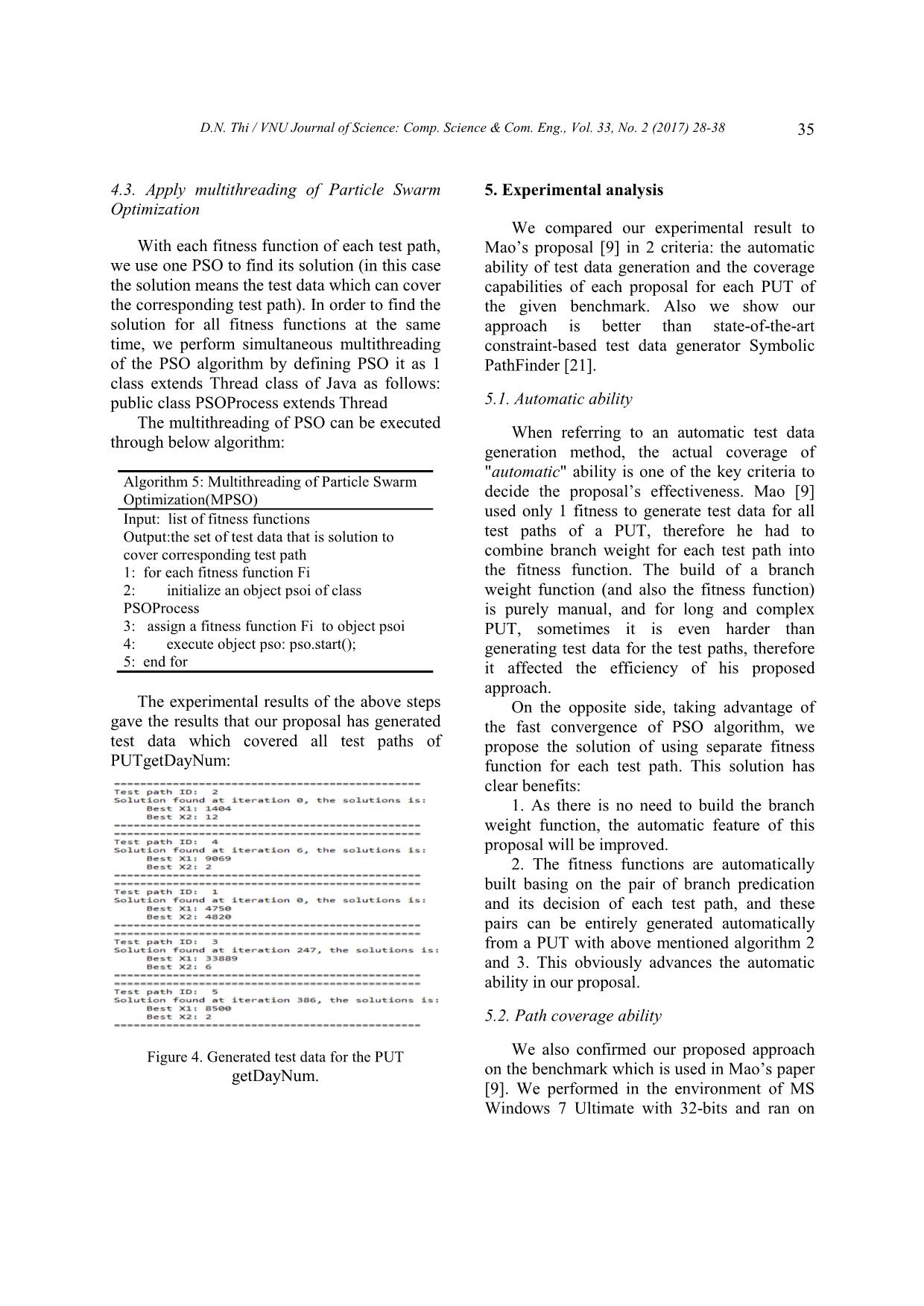

Table 6. Comparison between Mao's approach and MPSO

Success rate (%) Average coverage (%)

Program under test

Mao[9]’s PSO MPSO Mao[9]’s PSO MPSO

triangleType 99.80 100.0 99.94 100.0

calDay 100.0 100.0 100.0 100.0

cal 100.0 100.0 100.0 100.0

remainder 100.0 100.0 100.0 100.0

computeTax 99.80 100.0 99.98 100.0

bessj 100.0 100.0 100.0 100.0

printCalendar 99.10 100.0 99.72 100.0

line 99.20 100.0 99.86 100.0

6. Conclusion apply this proposal for programs not only

inacademics but also in industry.

This paper has introduced and evaluated a

combination static program analysis and PSO References

approach for evolutionary structural testing. We

proposed a method which uses a fitness [1] B. Antonia, “Software Testing Research:

Achievements, Challenges, Dreams”, Future of

function for each test path of a PUT, and then Software Engineering, pp. 85-103. IEEE

executed those PSOs simultaneously in order to Computer Society, Washington (2007).

generate test data to cover test paths of a PUT. [2] G. J. Myers, “The Art of Software Testing”, 2nd

The experimental result proves that our edition, John Wiley & Sons Inc (2004).

proposal is more effective than Mao’s [9] test [3] B. W. Kernighan and P. J. Plauger, “The Elements

data generation method using PSO in terms of of Programming Style”, McGraw-Hill, Inc, New

both automatic and coverage ability for a PUT. York (1982).

Our approach also addressed a limitation of [4] M. A. Ahmed and I. Hermadi, “GA-based Multiple

constraint-based test data generation Paths Test Data Generator”, Computers &

approaches, which generate test data for Operations Research, vol. 35, pp 3107-3124 (2008).

conditions that contain native functions. [5] J. Malburg and G. Fraser, “Search-based testing

using constraint-based mutation”, Journal

As future works, we will continue to extend Software Testing, Verification & Reliability, vol.

our proposal to be applicable to many kinds of 24(6), 472-495 (2014).

UTs, such as PUTs which contain calls to other [6] A.Windisch and S.Wappler, “Applying particle

native functions or PUTs that handle string swarm optimization to software testing”,

operations or complex data structures. In Proceedings of the 9th Annual Conference on

addition, further research is needed to be able to Genetic and Evolutionary Computation

(GECCO’07), pp. 1121–1128 (2007).

38 D.N. Thi / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 33, No. 2 (2017) 28-38

[7] Yanli Zhang, Aiguo Li, "Automatic Generating generation for data flow coverage with dominance

All-Path Test Data of a Program Based on PSO", concepts”, Int. J. Adv. Sci. Technol. 37 (2011).

vol. 04, pp. 189-193, 2009, [20] E. J. Weyuker, “The applicability of program

doi:10.1109/WCSE.2009.98. schema results to programs”, International Journal

[8] Ya-Hui Jia, Wei-Neng Chen, Jun Zhang, Jing-Jing of Parallel Programming, vol. 8, 387-403 (1979).

Li, “Generating Software Test Data by Particle [21] C. S. Pasareanu, W. Visser, D. Bushnell, J.

Swarm Optimization”, Proceedings of 10th Geldenhuys, P. Mehlitz, N. Rungta, “Symbolic

International Conference, SEAL 2014, Dunedin, PathFinder: Integrating Symbolic Execution with

New Zealand, December 15-18, 2014. Model Checking for Java Bytecode Analysis”,

[9] C.Mao, “Generating Test Data for Software”, Arabian Automated Software Engineering Journal,

Journal for Science and Engineering Structural Testing Springer (2013).

Based on Particle Swarm Optimization, vol 39, issue 6, [22] G. M. Michael, M. Schatz, “Generating software

pp 4593–4607 (June 2014). test data by evolution”, IEEE Transactions on

[10] B. Korel, “Automated software test data Software Engineering, vol. 27, 1085-1110 (2001).

generation”, IEEE Transactions on Software [23] J. Wegener, A. Baresel, and H. Sthamer,

Engineering, vol. 16, 870-879 (1990). “Evolutionary test environment for automatic

[11] J.Kennedy, and R.Eberhart, “Particle swam structural testing”, Information and Software

optimization”, Proceedings of IEEE International Technology, vol. 43, 841-854 (2001).

Conference on Neural Networks (ICNN’95), pp. [24] J. Wegener, B. Kerstin, and P. Hartmut,

1942–1948 (1995) “Automatic Test Data Generation For Structural

[12] Robert Gold, “Control flow graph and code Testing Of Embedded Software Systems By

coverage”, Int. J. Appl. Math. Comput. Sci., Vol. Evolutionary Testing”, Genetic and Evolutionary

20, No. 4, 2010, pp. 739-749 Computation Conference. Morgan Kaufmann

[13] Bryan F. Jones, Harmen-Hinrich Sthamer, and Publishers Inc. (2002).

D.E. Eyres, “Automatic structural testing using [25] S. Levin and A. Yehudai, ” Evolutionary Testing:

genetic algorithms”, Software Engineering, A Case Study”, Hardware and Software,

11(5):299–306, September 1996. Verification and Testing, 155-165 (2007).

[14] M.Harman, P.McMinn, “A theoretical and [26] S. Xanthakis, C. Ellis, C. Skourlas, A. Le Gall, S.

empirical study of search-based testing: local, Katsikas, and K. Karapoulios, “Application of

global, and hybrid search”, IEEE Trans. Softw. genetic algorithms to software testing

Eng. 36(2), 226–247 (2010) (Application des algorithmes genetiques au test

[15] Agrawal K., Srivastava G, “Towards software test des logiciels)”, 5th International Conference on

data generation using discrete quantum particle Software Engineering and its Applications, pp.

swarm optimization”, ISEC, Mysore, India 625--636. Toulouse, France (1992).

(February 2010). [27] W. Joachim, Andr, Baresel, and S. Harmen,

[16] S. Tiwari, K.K. Mishra, A.K. Misra, “Test case “Suitability of Evolutionary Algorithms for

generation for modified code using a variant of Evolutionary Testing”, 26th International

particle swarm optimization (PSO) Algorithm Computer Software and Applications Conference

[C]”, Proceedings of the Tenth IEEE International on Prolonging Software Life: Development and

Conference on Information Technology: New Redevelopment. IEEE Computer Society,

Generations (ITNG), 2013, pp. 363–368. Washington (2002).

[17] X.M. Zhu, X.F. Yang, “Software test data [28] Duc-Anh Nguyen, Pham Ngoc Hung, Viet-Ha

generation automatically based on improved Nguyen, "A method for automated unit testing of

adaptive particle swarm optimizer”, Proceedings C programs", Proceedings of 2016 3rd National

of the International Conference on Computational Foundation for Science and Technology

and Information Sciences, 2010, pp. 1300–1303. Development Conference on Information and

[18] S. Dahiya, J. Chhabra, S. Kumar., “PSO based Computer Science (NICS), 2016, pp. 17-22k.

pseudo dynamic method for automated test case

generation using interpreter”, Proceedings of the

Second International Conference on Advances in

Swarm intelligence, 2011, pp. 147–156.

[19] S. Singla, D. Kumar, H.M. Rai, P. Singla, “A

hybrid PSO approach to automate test data

File đính kèm:

generating_test_data_for_software_structural_testing_using_p.pdf

generating_test_data_for_software_structural_testing_using_p.pdf