Educational data clustering in a weighted feature space using kernel K-means and transfer learning algorithms

Educational data clustering on the students’ data collected with a program can find several groups of the

students sharing the similar characteristics in their behaviors and study performance. For some programs, it is not

trivial for us to prepare enough data for the clustering task. Data shortage might then influence the effectiveness

of the clustering process and thus, true clusters can not be discovered appropriately. On the other hand, there are

other programs that have been well examined with much larger data sets available for the task. Therefore, it is

wondered if we can exploit the larger data sets from other source programs to enhance the educational data

clustering task on the smaller data sets from the target program. Thanks to transfer learning techniques, a

transfer-learning-based clustering method is defined with the kernel k-means and spectral feature alignment

algorithms in our paper as a solution to the educational data clustering task in such a context. Moreover, our

method is optimized within a weighted feature space so that how much contribution of the larger source data sets

to the clustering process can be automatically determined. This ability is the novelty of our proposed transfer

learning-based clustering solution as compared to those in the existing works. Experimental results on several

real data sets have shown that our method consistently outperforms the other methods using many various

approaches with both external and internal validations.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tóm tắt nội dung tài liệu: Educational data clustering in a weighted feature space using kernel K-means and transfer learning algorithms

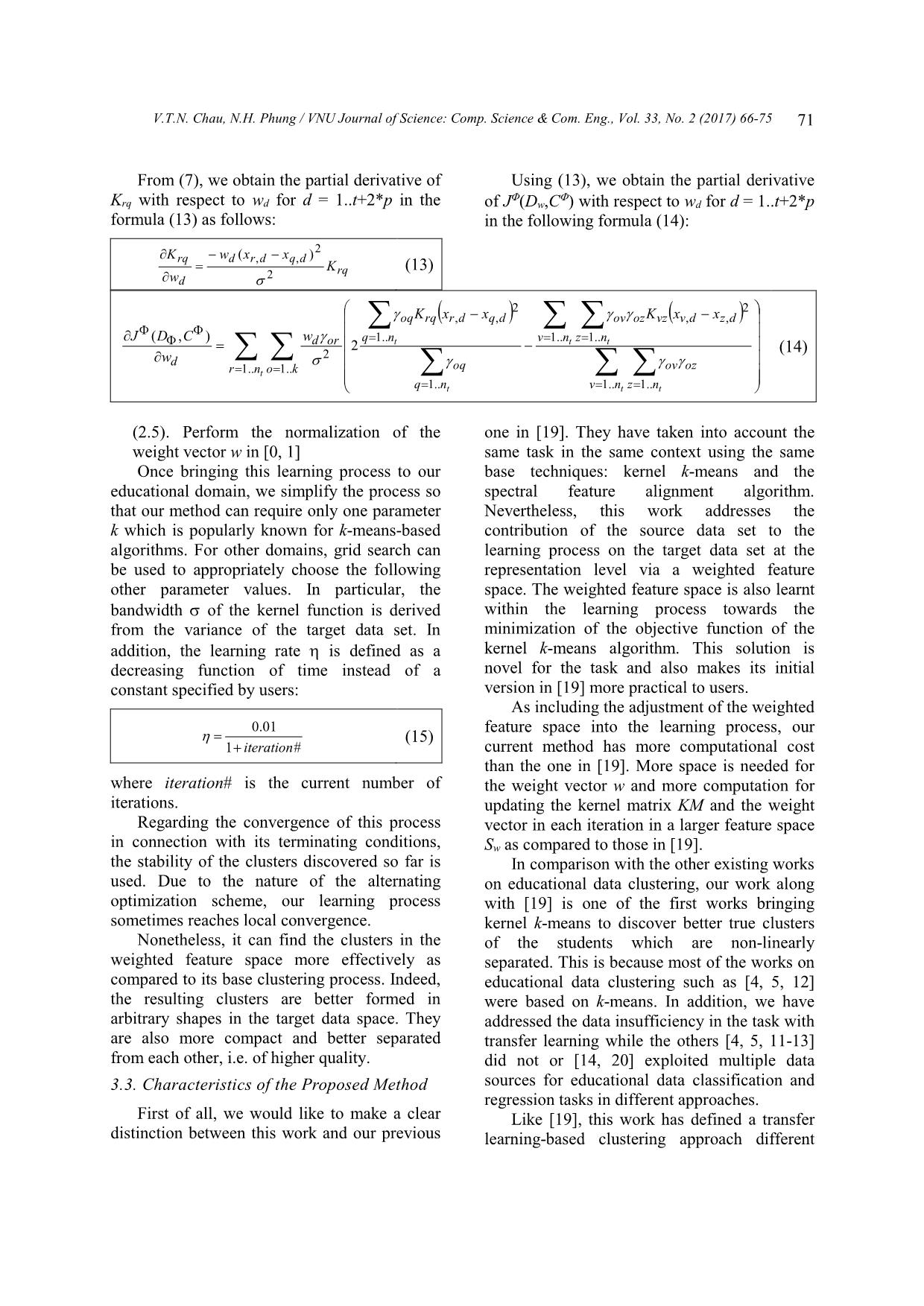

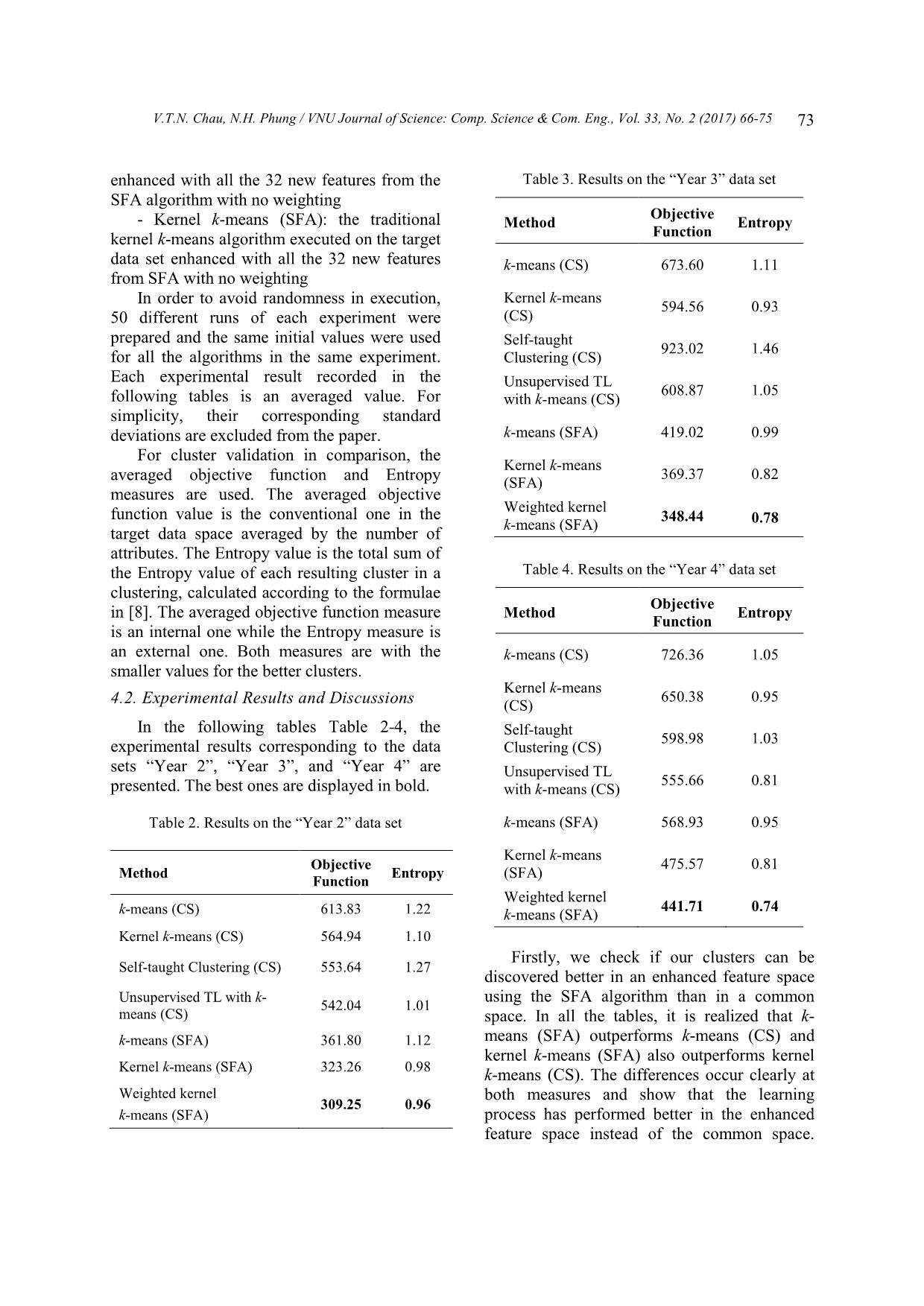

udents from year 2 automatically determined in our current work to year 4 corresponding to the “Year 2”, “Year while this issue was not examined in [8]. More 3”, and “Year 4” data sets for each program. recently proposed in [15], another unsupervised Their related details are given in Table 1. transfer learning algorithm has been defined for Table 1. Details of the programs short text clustering. This algorithm is also considered at the instance level as executed on Program Student# Subject# Group# both target and source data sets and then Computer Engineering filtering the instances from the source data set 186 43 3 to conclude the final clusters in the target data (Target, A) Computer Science set. For both algorithms in [8, 15], it was 1,317 43 3 (Source, B) assumed that the same data space was used in both source and target domains. In contrast, our For choosing parameter values in our works never require such an assumption. method, we set the number k of desired clusters It is believed that our proposed method has to 3, sigmas for the spectral feature alignment its own merits of discovering the inherent and kernel k-means algorithms to 0.3*variance clusters of the similar students based on study where variance is the total sum of the variance performance. It can be regarded as a novel for each attribute in the target data. The solution to the educational data clustering task. learning rate is set according to (15). For parameters in the methods in comparison, 4. Empirical evaluation default settings in their works are used. For comparison with our Weighted kernel In the previous subsection 3.3, we have k-means (SFA) method, we have taken into discussed the proposed method from the consideration the following methods: theoretical perspectives. In this section, more - k-means (CS): the traditional k-means discussions from the empirical perspectives are algorithm executed in the common space (CS) provided for an evaluation of our method. of both target and source data sets - Kernel k-means (CS): the traditional 4.1. Data and experiment settings kernel k-means algorithm executed in the Data used in our experiments stem from the common space of both data sets student information of the students at Faculty of - Self-taught Clustering (CS): the self- Computer Science and Engineering, Ho Chi taught clustering algorithm in [8] executed in Minh City University of Technology, Vietnam, the common space of both data sets [1] where the academic credit system is - Unsupervised TL with k-means (CS): the running. There are two educational programs in unsupervised transfer learning algorithm in [15] context establishment of the task: Computer executed with k-means as the base algorithm in Engineering and Computer Science. Computer the common space Engineering is our target program and - k-means (SFA): the traditional k-means Computer Science our source program. Each algorithm executed on the target data set V.T.N. Chau, N.H. Phung / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 33, No. 2 (2017) 66-75 73 enhanced with all the 32 new features from the Table 3. Results on the “Year 3” data set SFA algorithm with no weighting Objective - Kernel k-means (SFA): the traditional Method Entropy kernel k-means algorithm executed on the target Function data set enhanced with all the 32 new features k-means (CS) 673.60 1.11 from SFA with no weighting In order to avoid randomness in execution, Kernel k-means 594.56 0.93 50 different runs of each experiment were (CS) prepared and the same initial values were used Self-taught 923.02 1.46 for all the algorithms in the same experiment. Clustering (CS) Each experimental result recorded in the Unsupervised TL 608.87 1.05 following tables is an averaged value. For with k-means (CS) simplicity, their corresponding standard deviations are excluded from the paper. k-means (SFA) 419.02 0.99 For cluster validation in comparison, the Kernel k-means 369.37 0.82 averaged objective function and Entropy (SFA) measures are used. The averaged objective Weighted kernel function value is the conventional one in the 348.44 k-means (SFA) 0.78 target data space averaged by the number of attributes. The Entropy value is the total sum of the Entropy value of each resulting cluster in a Table 4. Results on the “Year 4” data set clustering, calculated according to the formulae Objective in [8]. The averaged objective function measure Method Entropy Function is an internal one while the Entropy measure is an external one. Both measures are with the k-means (CS) 726.36 1.05 smaller values for the better clusters. Kernel k-means 650.38 0.95 4.2. Experimental Results and Discussions (CS) In the following tables Table 2-4, the Self-taught 598.98 1.03 experimental results corresponding to the data Clustering (CS) sets “Year 2”, “Year 3”, and “Year 4” are Unsupervised TL 555.66 0.81 presented. The best ones are displayed in bold. with k-means (CS) Table 2. Results on the “Year 2” data set k-means (SFA) 568.93 0.95 Kernel k-means Objective 475.57 0.81 Method Entropy (SFA) Function Weighted kernel 441.71 0.74 k-means (CS) 613.83 1.22 k-means (SFA) Kernel k-means (CS) 564.94 1.10 Firstly, we check if our clusters can be Self-taught Clustering (CS) 553.64 1.27 discovered better in an enhanced feature space Unsupervised TL with k- using the SFA algorithm than in a common 542.04 1.01 means (CS) space. In all the tables, it is realized that k- k-means (SFA) 361.80 1.12 means (SFA) outperforms k-means (CS) and kernel k-means (SFA) also outperforms kernel Kernel k-means (SFA) 323.26 0.98 k-means (CS). The differences occur clearly at Weighted kernel both measures and show that the learning 309.25 0.96 k-means (SFA) process has performed better in the enhanced feature space instead of the common space. 74 V.T.N. Chau, N.H. Phung / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 33, No. 2 (2017) 66-75 This is understandable as the enhanced feature measures. These values have presented the space contains more informative details and better clusters with more compactness and non- thus, a transfer learning technique is valuable linear separation. Hence, the groups of the most for the data clustering task on small target data similar students behind these clusters can be sets like those in the educational domain. derived for supporting academic affairs. Secondly, we check if our transfer learning approach using the SFA algorithm is better than 5. Conclusion other transfer learning approaches in [8, 15]. Experimental results on all the data sets show In this paper, a transfer learning-based that our approach with three methods such as k- kernel k-means method, named Weighted means (SFA), kernel k-means (SFA), and kernel k-means (SFA), is proposed to discover Weighted kernel k-means (SFA) can help the clusters of the similar students via their generating better clusters on the “Year 2” and study performance in a weighted feature space. “Year 3” data sets as compared to both This method is a novel solution to an approaches in [8, 15]. On the “Year 4” data set, educational data clustering task which is our approach is just better than Self-taught addressed in such a context that there is a data clustering (CS) in [8] while comparable to shortage with the target program while there Unsupervised TL with k-means (CS) in [15]. exist more data with other source programs. This is because the “Year 4” data set is much Our method has thus exploited the source data denser and thus, the enhancement is just a bit sets at the representation level to learn a effective. By contrast, the “Year 2” and “Year weighted feature space where the clusters can 3” data sets are sparser with more data be discovered more effectively. The weighted insufficiency and thus, the enhancement is more feature space is automatically formed as part of effective. Nevertheless, our method is always the clustering process of our method, reflecting better than the others with the smallest values. the extent of the contribution of the source data This fact notes how appropriately and sets to the clustering process on the target one. effectively our method has been designed. Analyzed from the theoretical perspectives, our Thirdly, we would like to highlight the method is promising for finding better clusters. weighted feature space in our method as Evaluated from the empirical perspectives, compared to both common and traditionally our method outperforms the others with fixed enhanced spaces. In all the cases, our different approaches on three real educational method can discover the clusters in a weighted data sets along the study path of regular feature space better than the other methods in students. Better smaller values for the objective other spaces. A weighted feature space can be function and Entropy measures have been adjusted along with the learning process and recorded for our method. Those experimental thus help the learning process examine the results have shown the more effectiveness of discrimination of the instances in the space our method in comparison with those of the better. It is reasonable as each feature from other methods on a consistent basis. either original space or enhanced space is Making our method parameter-free by important to the extent that the learning process automatically deriving the number of desired can include it in computing the distances clusters inherent in a data set is planned as a between the instances. The importance of each future work. Furthermore, we will make use of feature is denoted by means of a weight learnt the resulting clusters in an educational decision in our learning process. This property allows support model based on case based reasoning. forming the better clusters in arbitrary shapes in This combination can provide a more practical a weighted feature space rather than a common but effective decision support model for our or a traditionally fixed enhanced feature space. educational decision support system. Besides, In short, our proposed method, Weighted more analysis on the groups of the students kernel k-means (SFA), can produce the smallest with similar study performance will be done to values for both objective function and Entropy V.T.N. Chau, N.H. Phung / VNU Journal of Science: Comp. Science & Com. Eng., Vol. 33, No. 2 (2017) 66-75 75 create study profiles of our students over the [10] K. D. Feuz and D. J. Cook, “Transfer learning time so that the study trends of our students can across feature-rich heterogeneous feature spaces be monitored towards their graduation. via feature-space remapping (FSR),” ACM Trans. Intell. Syst. Technol., vol. 6, pp. 1-27, March 2015. Acknowledgements [11] Y. Jayabal and C. Ramanathan, “Clustering students based on student’s performance – a Partial Least This research is funded by Vietnam Squares Path Modeling (PLS-PM) study,” Proc. National University Ho Chi Minh City, MLDM, LNAI 8556, pp. 393-407, 2014. Vietnam, under grant number C2016-20-16. [12] M. Jovanovic, M. Vukicevic, M. Milovanovic, Many sincere thanks also go to Mr. Nguyen M. Minovic, “Using data mining on student Duy Hoang, M.Eng., for his support of the behavior and cognitive style data for improving e-learning systems: a case study,” Int. Journal transfer learning algorithms in Matlab. of Computational Intelligence Systems, vol. 5, pp. 597-610, 2012. References [13] D. Kerr and G. K.W.K. Chung, “Identifying key features of student performance in educational [1] AAO, Academic Affairs Office, video games and simulations through cluster www.aao.hcmut.edu.vn, accessed on analysis,” Journal of Educational Data Mining, 01/05/2017. vol. 4, no. 1, pp. 144-182, Oct. 2012. [2] M. Belkin and P. Niyogi, “Laplacian eigenmaps [14] I. Koprinska, J. Stretton, and K. Yacef, for dimensionality reduction and data “Predicting student performance from multiple representation,” Neural Computation, vol. 15, data sources,” Proc. AIED, pp. 1-4, 2015. no. 6, pp. 1373-1396, 2003. [15] T. Martín-Wanton, J. Gonzalo, and E. Amigó, “An [3] J. Blitzer, R. McDonald, and F. Pereira, unsupervised transfer learning approach to “Domain adaptation with structural discover topics for online reputation correspondence learning,” Proc. The 2006 Conf. management,” Proc. CIKM, pp. 1565-1568, 2013. on Empirical Methods in Natural Language [16] A.Y. Ng, M. I. Jordan, and Y. Weiss, “On Processing, pp. 120-128, 2006. spectral clustering: analysis and an algorithm,” [4] V. P. Bresfelean, M. Bresfelean, and N. Advances in Neural Information Processing Ghisoiu, “Determining students’ academic Systems, vol. 14, pp. 1-8, 2002. failure profile founded on data mining [17] S. J. Pan, X. Ni, J-T. Sun, Q. Yang, and Z. methods,” Proc. The ITI 2008 30th Int. Conf. on Chen, “Cross-domain sentiment classification Information Technology Interfaces, pp. 317- via spectral feature alignment,” Proc. WWW 322, 2008. 2010, pp. 1-10, 2010. [5] R. Campagni, D. Merlini, and M. C. Verri, [18] G. Tzortzis and A. Likas, “The global kernel k- “Finding regularities in courses evaluation with k- means clustering algorithm,” Proc. The 2008 means clustering,” Proc. The 6th Int. Conf. on Int. Joint Conf. on Neural Networks, pp. 1978- Computer Supported Education, pp. 26-33, 2014. 1985, 2008. [6] W-C. Chang, Y. Wu, H. Liu, and Y. Yang, [19] C. T.N. Vo and P. H. Nguyen, “A two-phase “Cross-domain kernel induction for transfer educational data clustering method based on learning,” AAAI, pp. 1-7, 2017. transfer learning and kernel k-means,” Journal [7] F.R.K. Chung, “Spectral graph theory,” CBMS of Science and Technology on Information and Regional Conf. Series in Mathematics, No. 92, Communications, pp. 1-14, 2017. (accepted) American Mathematical Society, 1997. [20] L. Vo, C. Schatten, C. Mazziotti, and L. [8] W. Dai, Q. Yang, G-R. Xue, and Y. Yu, “Self- Schmidt-Thieme, “A transfer learning approach taught clustering,” Proc. The 25th Int. Conf. on for applying matrix factorization to small ITS Machine Learning, pp. 1-8, 2008. datasets,” Proc. The 8th Int. Conf. on [9] L. Duan, D. Xu, and I. W. Tsang, “Learning Educational Data Mining, pp. 372-375, 2015. with augmented features for heterogeneous [21] G. Zhou, T. He, W. Wu, and X. T. Hu, “Linking domain adaptation,” Proc. The 29th Int. Conf. on heterogeneous input features with pivots for Machine Learning, pp. 1-8, 2012. domain adaptation,” Proc. The 24th Int. Joint Conf. on Artificial Intelligence, pp. 1419-1425, 2015.

File đính kèm:

educational_data_clustering_in_a_weighted_feature_space_usin.pdf

educational_data_clustering_in_a_weighted_feature_space_usin.pdf