Pseudorandom sequences classification algorithm

Hiện nay, số vụ rò rỉ thông tin bởi

đối tượng vi phạm trong nội bộ gây ra ngày càng

gia tăng. Một trong những kênh có thể dẫn đến rò

rỉ thông tin là việc truyền dữ liệu ở dạng mã hóa

hoặc nén, vì các hệ thống chống rò rỉ dữ liệu (DLP)

hiện đại không thể phát hiện chữ ký và thông tin

trong loại dữ liệu này. Nội dung bài báo trình bày

thuật toán phân loại các chuỗi được hình thành

bằng thuật toán mã hóa và nén. Một mảng tần số

xuất hiện của các chuỗi con nhị phân có độ dài N

bit được sử dụng làm không gian đặc trưng. Tiêu

đề tệp hoặc bất kỳ thông tin ngữ cảnh nào khác

không được sử dụng để xây dựng không gian đối

tượng. Thuật toán được trình bày có độ chính xác

trong việc phân loại các chuỗi đạt 0,98 và có thể

được áp dụng trong các hệ thống DLP để ngăn

chặn việc rò rỉ thông tin khi truyền thông tin ở

dạng mã hóa hoặc nén.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Tóm tắt nội dung tài liệu: Pseudorandom sequences classification algorithm

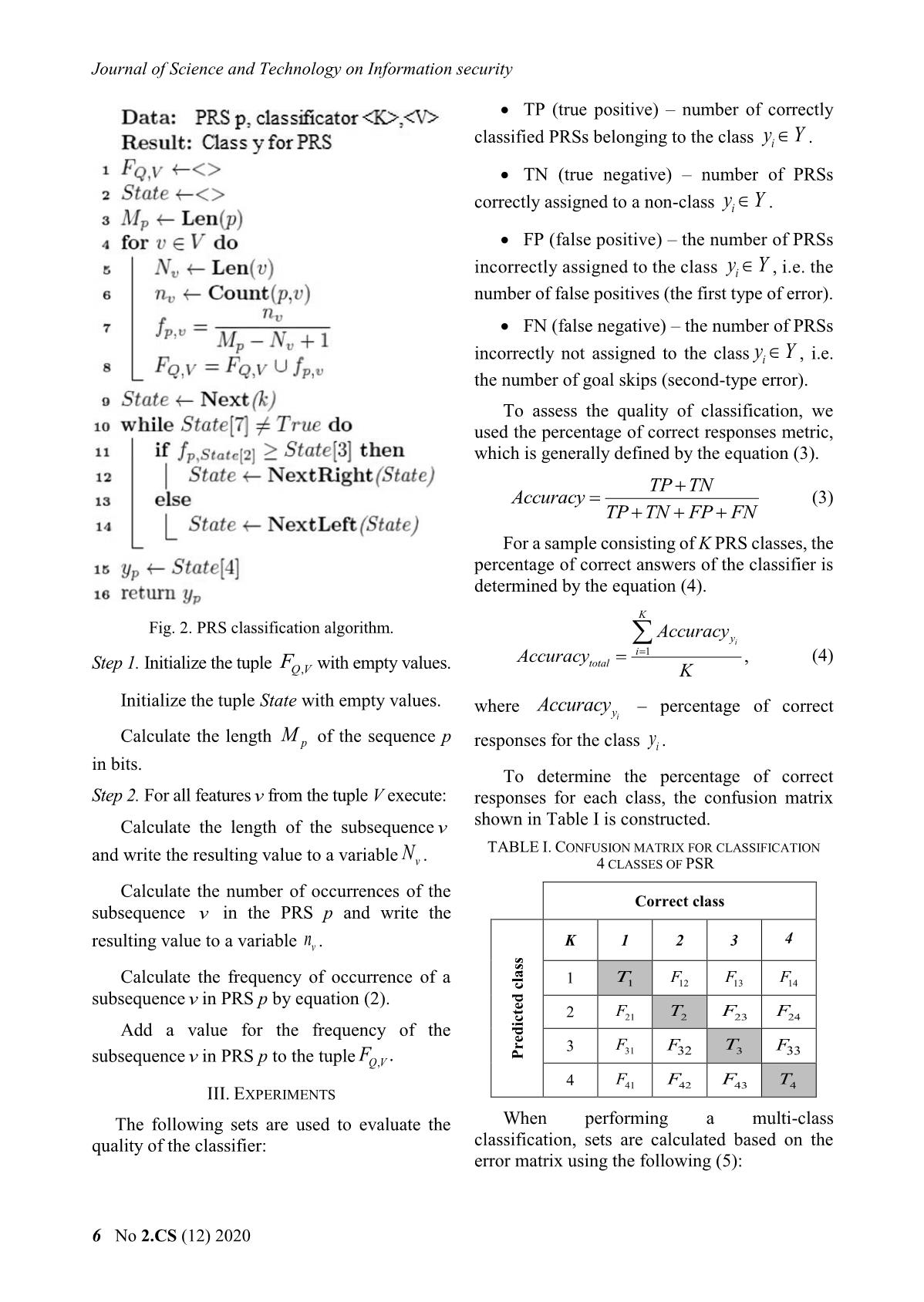

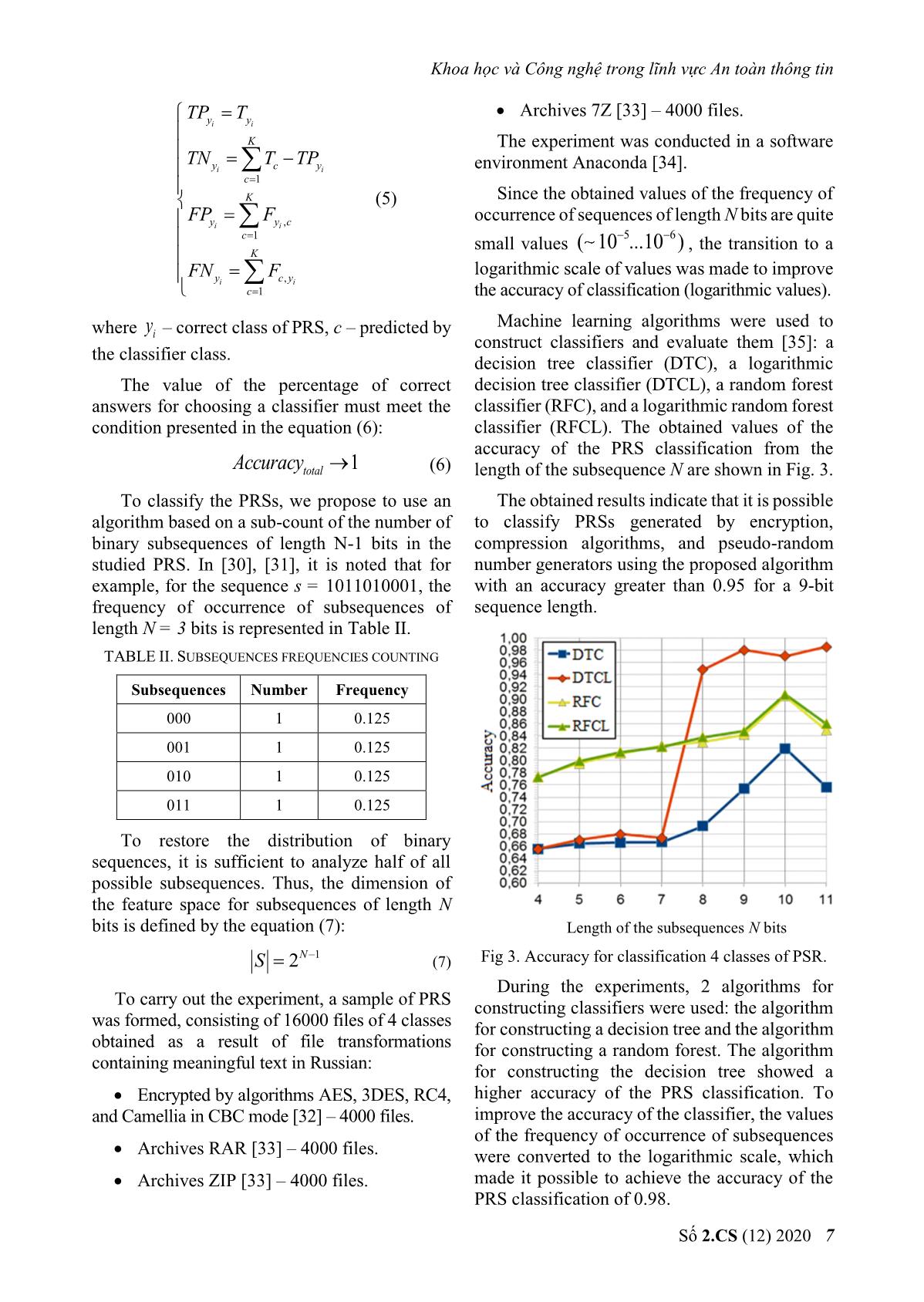

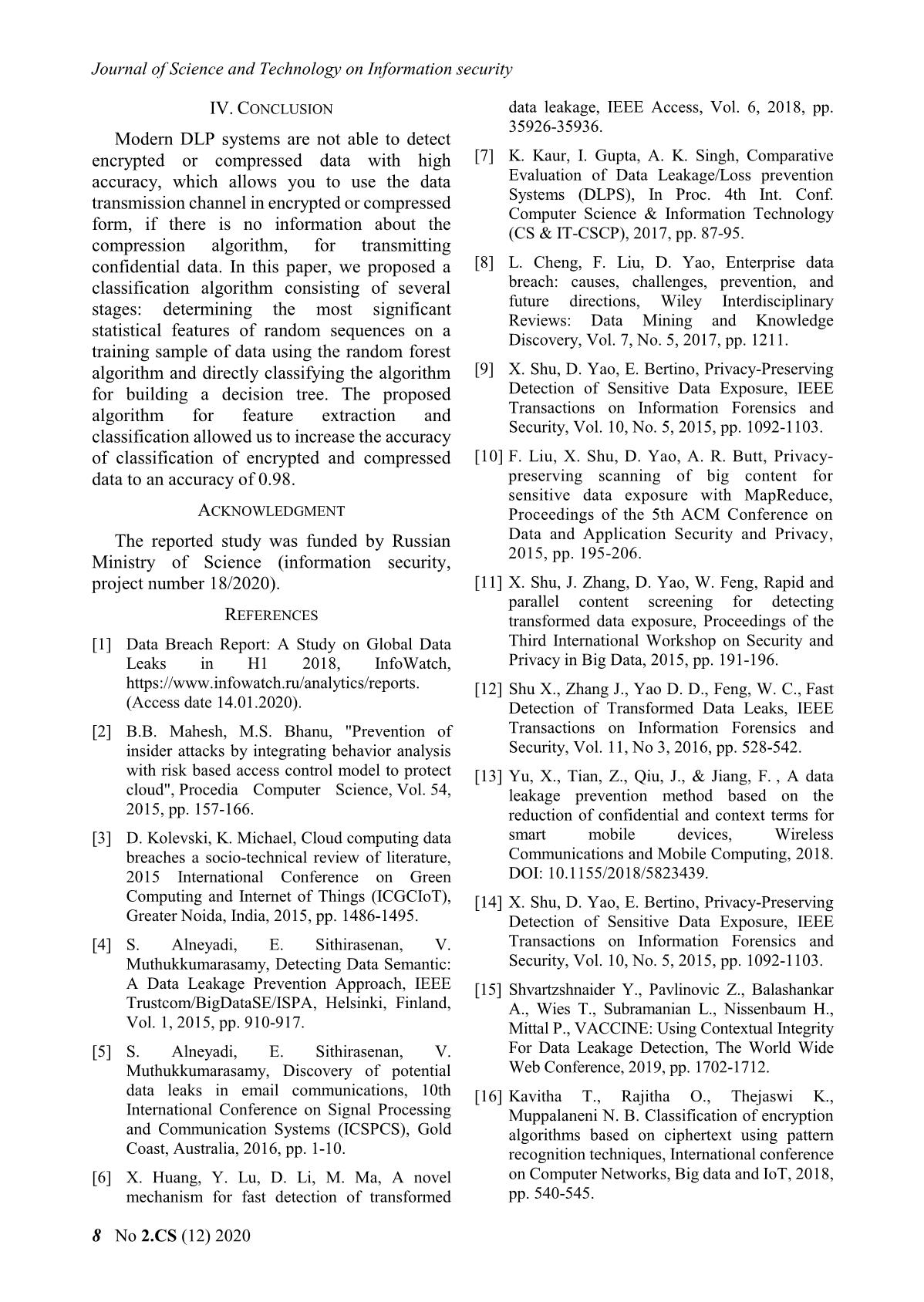

is formed by constructing all possible binary The resulting tuple of frequency values of subsequences of a given bit length. occurrence of bit-length subsequences is a The algorithm for constructing a feature characteristic space for further training and space is shown in Fig. 1. construction of the classifier. B. PRS Classification The initial data for performing the PRS classification are: PRS p, classifier K, set of the features V. The PRS classification algorithm is shown in Fig. 2. Fig. 1. Features space building algorithm. Số 2.CS (12) 2020 5 Journal of Science and Technology on Information security TP (true positive) – number of correctly classified PRSs belonging to the class yYi . TN (true negative) – number of PRSs correctly assigned to a non-class yYi . FP (false positive) – the number of PRSs incorrectly assigned to the class yYi , i.e. the number of false positives (the first type of error). FN (false negative) – the number of PRSs incorrectly not assigned to the class yYi , i.e. the number of goal skips (second-type error). To assess the quality of classification, we used the percentage of correct responses metric, which is generally defined by the equation (3). TP TN Accuracy (3) TP TN FP FN For a sample consisting of K PRS classes, the percentage of correct answers of the classifier is determined by the equation (4). K Fig. 2. PRS classification algorithm. Accuracy yi i 1 Accuracytotal , (4) Step 1. Initialize the tuple FQV, with empty values. K Initialize the tuple State with empty values. where Accuracy – percentage of correct yi Calculate the length M of the sequence p p responses for the class yi . in bits. To determine the percentage of correct Step 2. For all features v from the tuple V execute: responses for each class, the confusion matrix Calculate the length of the subsequence v shown in Table I is constructed. and write the resulting value to a variable N . TABLE I. CONFUSION MATRIX FOR CLASSIFICATION v 4 CLASSES OF PSR Calculate the number of occurrences of the Correct class subsequence v in the PRS p and write the 4 resulting value to a variable nv . K 1 2 3 Calculate the frequency of occurrence of a 1 T1 F12 F13 F14 subsequence v in PRS p by equation (2). 2 F21 T2 F23 F24 Add a value for the frequency of the 3 F31 F32 T3 F33 subsequence v in PRS p to the tuple FQV, . Predicted class 4 F41 F42 F43 T4 III. EXPERIMENTS The following sets are used to evaluate the When performing a multi-class quality of the classifier: classification, sets are calculated based on the error matrix using the following (5): 6 No 2.CS (12) 2020 Khoa học và Công nghệ trong lĩnh vực An toàn thông tin Archives 7Z [33] – 4000 files. TPyy T ii K The experiment was conducted in a software TNy T c TP y environment Anaconda [34]. ii c 1 K (5) Since the obtained values of the frequency of FP F occurrence of sequences of length N bits are quite yii y, c c 1 small values ( 10 56 ...10 ) , the transition to a K FN F logarithmic scale of values was made to improve yii c, y c 1 the accuracy of classification (logarithmic values). where y – correct class of PRS, с – predicted by Machine learning algorithms were used to i construct classifiers and evaluate them [35]: a the classifier class. decision tree classifier (DTC), a logarithmic The value of the percentage of correct decision tree classifier (DTCL), a random forest answers for choosing a classifier must meet the classifier (RFC), and a logarithmic random forest condition presented in the equation (6): classifier (RFCL). The obtained values of the accuracy of the PRS classification from the Accuracytotal 1 (6) length of the subsequence N are shown in Fig. 3. To classify the PRSs, we propose to use an The obtained results indicate that it is possible algorithm based on a sub-count of the number of to classify PRSs generated by encryption, binary subsequences of length N-1 bits in the compression algorithms, and pseudo-random studied PRS. In [30], [31], it is noted that for number generators using the proposed algorithm example, for the sequence s = 1011010001, the with an accuracy greater than 0.95 for a 9-bit frequency of occurrence of subsequences of sequence length. length N = 3 bits is represented in Table II. TABLE II. SUBSEQUENCES FREQUENCIES COUNTING Subsequences Number Frequency 000 1 0.125 001 1 0.125 010 1 0.125 011 1 0.125 To restore the distribution of binary sequences, it is sufficient to analyze half of all possible subsequences. Thus, the dimension of the feature space for subsequences of length N bits is defined by the equation (7): Length of the subsequences N bits S 2N 1 (7) Fig 3. Accuracy for classification 4 classes of PSR. During the experiments, 2 algorithms for To carry out the experiment, a sample of PRS constructing classifiers were used: the algorithm was formed, consisting of 16000 files of 4 classes for constructing a decision tree and the algorithm obtained as a result of file transformations for constructing a random forest. The algorithm containing meaningful text in Russian: for constructing the decision tree showed a Encrypted by algorithms AES, 3DES, RC4, higher accuracy of the PRS classification. To and Camellia in CBC mode [32] – 4000 files. improve the accuracy of the classifier, the values of the frequency of occurrence of subsequences Archives RAR [33] – 4000 files. were converted to the logarithmic scale, which Archives ZIP [33] – 4000 files. made it possible to achieve the accuracy of the PRS classification of 0.98. Số 2.CS (12) 2020 7 Journal of Science and Technology on Information security IV. CONCLUSION data leakage, IEEE Access, Vol. 6, 2018, pp. 35926-35936. Modern DLP systems are not able to detect encrypted or compressed data with high [7] K. Kaur, I. Gupta, A. K. Singh, Comparative accuracy, which allows you to use the data Evaluation of Data Leakage/Loss prevention transmission channel in encrypted or compressed Systems (DLPS), In Proc. 4th Int. Conf. Computer Science & Information Technology form, if there is no information about the (CS & IT-CSCP), 2017, pp. 87-95. compression algorithm, for transmitting confidential data. In this paper, we proposed a [8] L. Cheng, F. Liu, D. Yao, Enterprise data classification algorithm consisting of several breach: causes, challenges, prevention, and stages: determining the most significant future directions, Wiley Interdisciplinary Reviews: Data Mining and Knowledge statistical features of random sequences on a Discovery, Vol. 7, No. 5, 2017, pp. 1211. training sample of data using the random forest algorithm and directly classifying the algorithm [9] X. Shu, D. Yao, E. Bertino, Privacy-Preserving for building a decision tree. The proposed Detection of Sensitive Data Exposure, IEEE algorithm for feature extraction and Transactions on Information Forensics and Security, Vol. 10, No. 5, 2015, pp. 1092-1103. classification allowed us to increase the accuracy of classification of encrypted and compressed [10] F. Liu, X. Shu, D. Yao, A. R. Butt, Privacy- data to an accuracy of 0.98. preserving scanning of big content for sensitive data exposure with MapReduce, ACKNOWLEDGMENT Proceedings of the 5th ACM Conference on The reported study was funded by Russian Data and Application Security and Privacy, Ministry of Science (information security, 2015, pp. 195-206. project number 18/2020). [11] X. Shu, J. Zhang, D. Yao, W. Feng, Rapid and parallel content screening for detecting REFERENCES transformed data exposure, Proceedings of the [1] Data Breach Report: A Study on Global Data Third International Workshop on Security and Leaks in H1 2018, InfoWatch, Privacy in Big Data, 2015, pp. 191-196. https://www.infowatch.ru/analytics/reports. [12] Shu X., Zhang J., Yao D. D., Feng, W. C., Fast (Access date 14.01.2020). Detection of Transformed Data Leaks, IEEE [2] B.B. Mahesh, M.S. Bhanu, "Prevention of Transactions on Information Forensics and insider attacks by integrating behavior analysis Security, Vol. 11, No 3, 2016, pp. 528-542. with risk based access control model to protect [13] Yu, X., Tian, Z., Qiu, J., & Jiang, F. , A data cloud", Procedia Computer Science, Vol. 54, leakage prevention method based on the 2015, pp. 157-166. reduction of confidential and context terms for [3] D. Kolevski, K. Michael, Cloud computing data smart mobile devices, Wireless breaches a socio-technical review of literature, Communications and Mobile Computing, 2018. 2015 International Conference on Green DOI: 10.1155/2018/5823439. Computing and Internet of Things (ICGCIoT), [14] X. Shu, D. Yao, E. Bertino, Privacy-Preserving Greater Noida, India, 2015, pp. 1486-1495. Detection of Sensitive Data Exposure, IEEE [4] S. Alneyadi, E. Sithirasenan, V. Transactions on Information Forensics and Muthukkumarasamy, Detecting Data Semantic: Security, Vol. 10, No. 5, 2015, pp. 1092-1103. A Data Leakage Prevention Approach, IEEE [15] Shvartzshnaider Y., Pavlinovic Z., Balashankar Trustcom/BigDataSE/ISPA, Helsinki, Finland, A., Wies T., Subramanian L., Nissenbaum H., Vol. 1, 2015, pp. 910-917. Mittal P., VACCINE: Using Contextual Integrity [5] S. Alneyadi, E. Sithirasenan, V. For Data Leakage Detection, The World Wide Muthukkumarasamy, Discovery of potential Web Conference, 2019, pp. 1702-1712. data leaks in email communications, 10th [16] Kavitha T., Rajitha O., Thejaswi K., International Conference on Signal Processing Muppalaneni N. B. Classification of encryption and Communication Systems (ICSPCS), Gold algorithms based on ciphertext using pattern Coast, Australia, 2016, pp. 1-10. recognition techniques, International conference [6] X. Huang, Y. Lu, D. Li, M. Ma, A novel on Computer Networks, Big data and IoT, 2018, mechanism for fast detection of transformed pp. 540-545. 8 No 2.CS (12) 2020 Khoa học và Công nghệ trong lĩnh vực An toàn thông tin [17] C. Tan, Q. Ji, An approach to identifying classification: a systematic survey, IEEE cryptographic algorithm from ciphertext, 8th Communications Surveys & Tutorials, Vol. 21, IEEE International Conference on No. 2, 2018, pp. 1988-2014. Communication Software and Networks, 2016, [27] Hahn D., Apthorpe N., Feamster N., Detecting pp. 19-23. compressed cleartext traffic from consumer [18] C. Tan, Y. Li, S. Yao, A Novel Identification internet of things devices, arXiv preprint Approach to Encryption Mode of Block Cipher, arXiv:1805.02722, 2018. 4th International Conference on Sensors, [28] Casino F., Choo K. K. R., Patsakis C., HEDGE: Mechatronics and Automation, Zhuhai, China, efficient traffic classification of encrypted and 2016. DOI: 10.2991/icsma-16.2016.101. compressed packets, IEEE Transactions on [19] C. Tan, X. Deng, L. Zhang, Identification of Information Forensics and Security, Vol. 14, No. Block Ciphers under CBC Mode, Procedia 11, 2019, pp. 2916-2926. Computer Science, Vol. 131, 2018, pp. 65-71. [29] Tang Z., Zeng X. and Sheng Y., Entropy- [20] Ray P. K., Ojha S., Roy B. K., Basu A., based feature extraction algorithm for Classification of Encryption Algorithms using encrypted and non-encrypted compressed Fisher’s Discriminant Analysis, Defence traffic classification International Journal of Science Journal, Vol. 67, No. 1, 2017, pp. 59-65. ICIC, Vol. 15, No 3, 2019. [21] Pan J., Encryption scheme classification: a deep [30] Khakpour A. R., Liu A. X., An information- learning approach, International Journal of theoretical approach to high-speed flow nature Electronic Security and Digital Forensics, Vol. identification, IEEE/ACM transactions on 9, No. 4, 2017, pp. 381-395. networking, Vol. 21, No. 4, 2012, pp. 1076-1089. [22] Wang, W., Zhu, M., Zeng, X., Ye, X., & Sheng, [31] Konyshev M. U., Dvilyansky A. A., Y., Malware traffic classification using Barabashov A. Y., Petrov K. Y., Formation of convolutional neural network for probability distributions of binary vectors of the representation learning, International error source of a Markov discrete memory link Conference on Information Networking using the method of "grouping probabilities" of (ICOIN), 2017, pp. 712-717. error vectors, Industrial ACS and controllers, No. 3, 2018, p. 42. [23] Wang W., Zhu M., Wang J., Zeng X., Yang Z., End-to-end encrypted traffic classification with [32] Konyshev M. U., Dvilyansky A. A., Petrov K. one-dimensional convolution neural networks, Y., Ermishin G. A., Algorithm for compression IEEE International Conference on Intelligence of a distribution series of binary and Security Informatics (ISI), 2017, pp. 43-48. multidimensional random variables, Industrial ACS and controllers, No. 8, 2016, pp. 47-50. [24] Lotfollahi M., Siavoshani M. J., Zade R. S. H., Saberian M., Deep packet: A novel approach for [33] Toolkit for the transport layer security and encrypted traffic classification using deep secure sockets layer protocols, learning, Soft Computing, 2017, pp. 1-14. (Access date: 14.01.2020). [25] Zhang J., Chen X., Xiang Y., Zhou W., Wu J. [34] Archive manager WinRAR, Robust network traffic classification, (Access date: 14.01.2020). IEEE/ACM Transactions on Networking, Vol. [35] Programm environment Anaconda, 23, No. 4 , 2015, pp. 1257-1270. https://www.anaconda.com/distribution/, [26] Pacheco F., Exposito E., Gineste M., Baudoin (Access date: 14.01.2020). C., Aguilar J., Towards the deployment of [36] Breiman L., Classification and regression trees, machine learning solutions in network traffic Routledge, 2017, p. 358. Số 2.CS (12) 2020 9 Journal of Science and Technology on Information security ABOUT THE AUTHOR Alexander Kozachok Workplace: Academy of the Federal Guard Service of Russian Federation Email: alex.totrin@gmail.com Education: Received his PhD degree in Engineering Sciences in Academy of Federal Guard Service of the Russian Federation in December 2012; received his doctorate in Engineering Science in 2019. Recent research direction: information security, unauthorized access protection, mathematical cryptography, theoretical problems of computer science. Andrey Spirin Workplace: Academy of the Federal Guard Service of Russian Federation Email: spirin_aa@bk.ru Education: Postgraduate student in Academy of the Federal Guard Service of Russian Federation. Recent research direction: information security, DLP systems, machine learning algorithms, classification of binary sequences. 10 No 2.CS (12) 2020

File đính kèm:

pseudorandom_sequences_classification_algorithm.pdf

pseudorandom_sequences_classification_algorithm.pdf