Dynamic texture map based artifact reduction for compressed videos

Video traffic is increasing dramatically fast. As in a

study from Cisco [1], video traffic will achieve over 81%

of the global traffic by year 2021. So, the requirement

of compressing videos to reduce storage space and

channel bandwidth is inevitable. There are many blockbased compression standards such as JPEG, MPEG,

H.26x, etc. to meet this requirement. However, these

lossy compression methods suffer from spatial artifacts

(blocking and ringing) and temporal artifacts (mosquito

and flickering) ([2, 3]), especially at low bit rates.

Blocking artifacts occur when the neightboring blocks

are compressed independently. Beside that, the coarse

quantization and truncation of high-frequency Discrete

Cosine Transform (DCT) coefficients cause ringing artifacts. In interframe coding, at the borders of moving

objects, the interframe predicted block may contain a

part of the predicted moving object. The prediction

error sometime is large and can cause mosquito artifacts. The authors in [4] and [5] introduce a method of

flicker detection and reduction, however this method

requires the original frames which are not available at

the decoder.

Artifacts cause uncomfortableness to human visual

perception. Hence, artifact removal becomes a very

essential task. In general, image and video quality

enhancement techniques can be implemented either at

encoding side or decoding side. Enhancement methods

at the encoding side ([6] and [7]) are not compatible

to the existing video or image compression standards.

Therefore, postprocessing techniques at the decoding

side have received much more attention due to its

compatibility to existing compression standards.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tóm tắt nội dung tài liệu: Dynamic texture map based artifact reduction for compressed videos

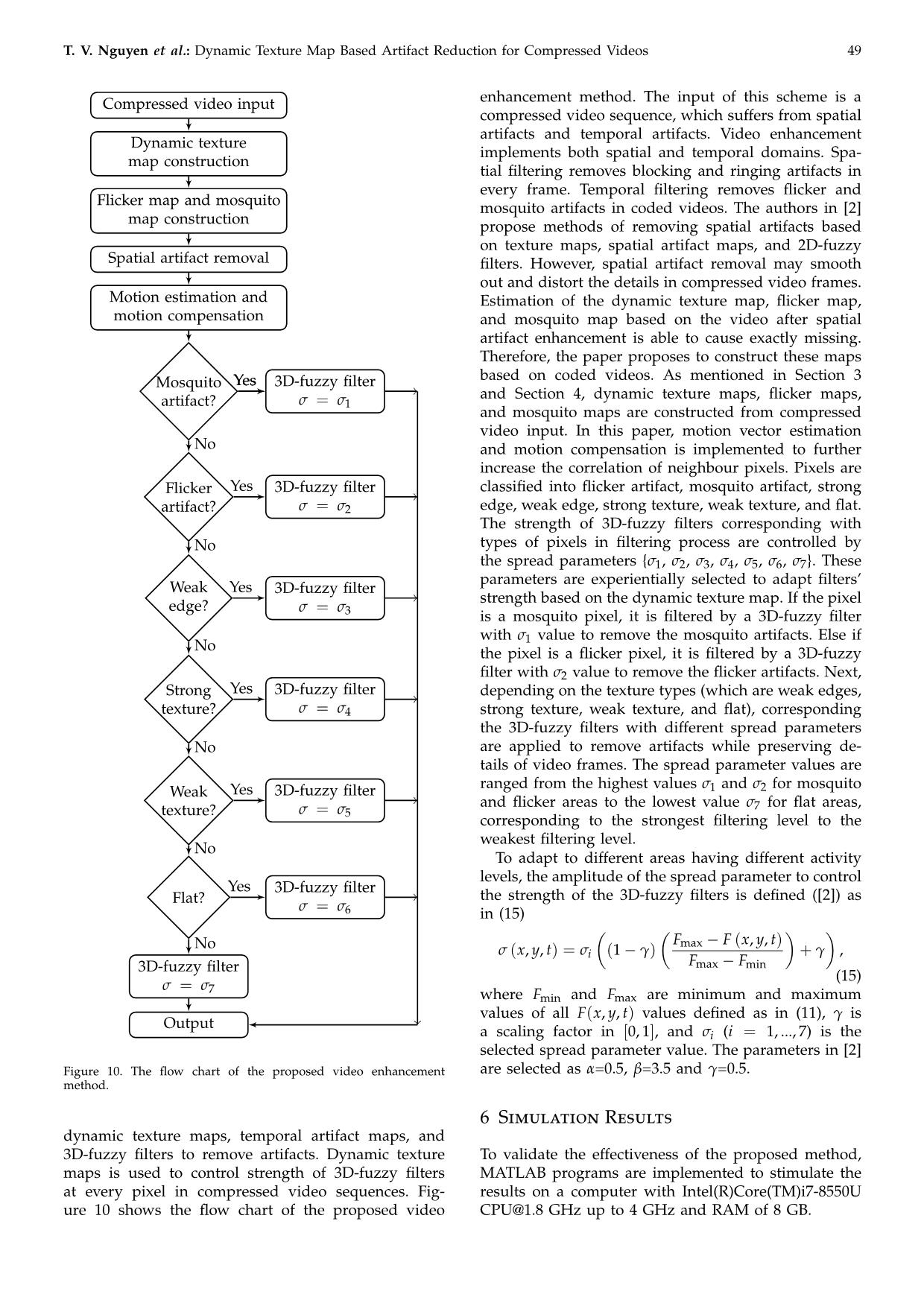

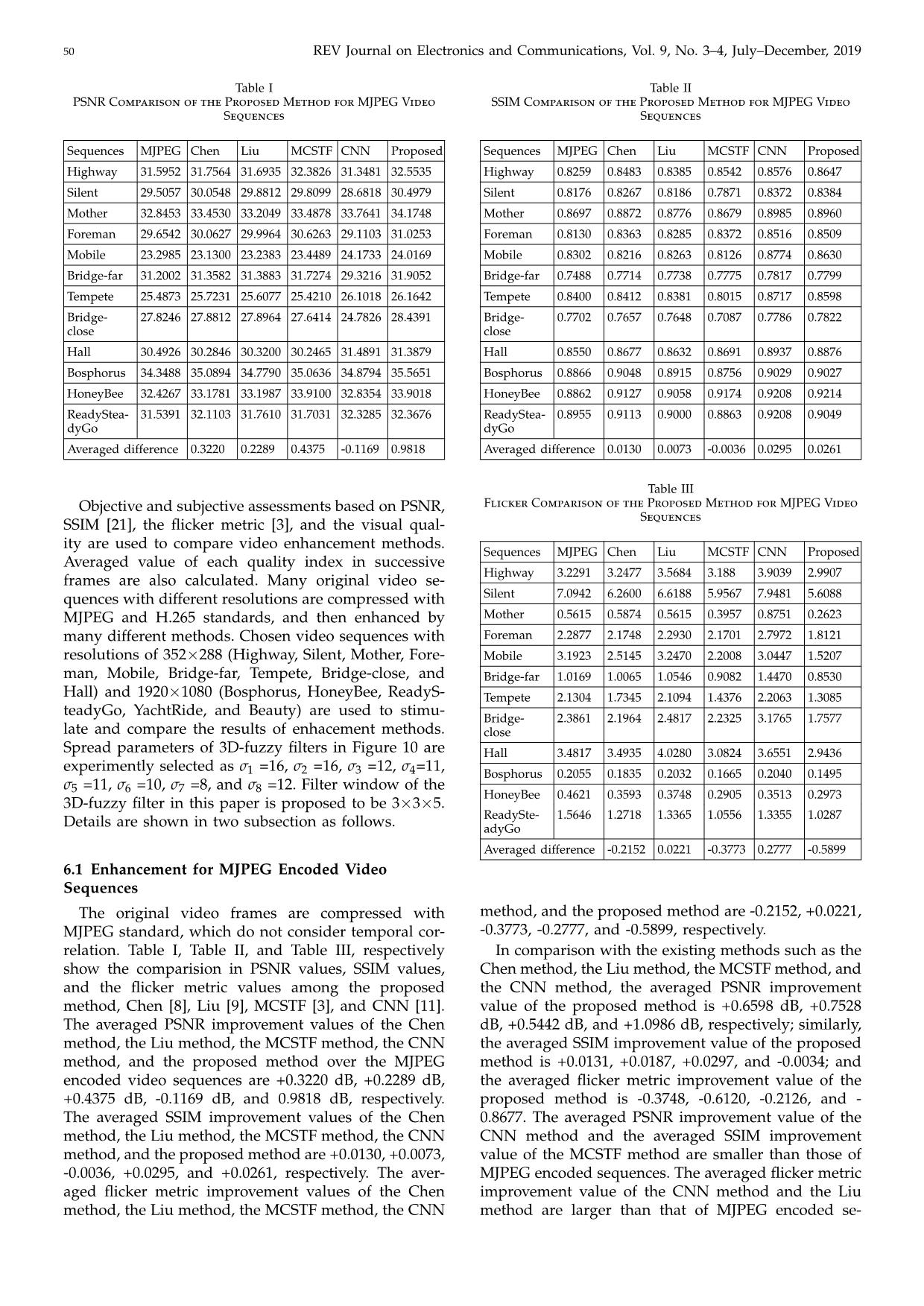

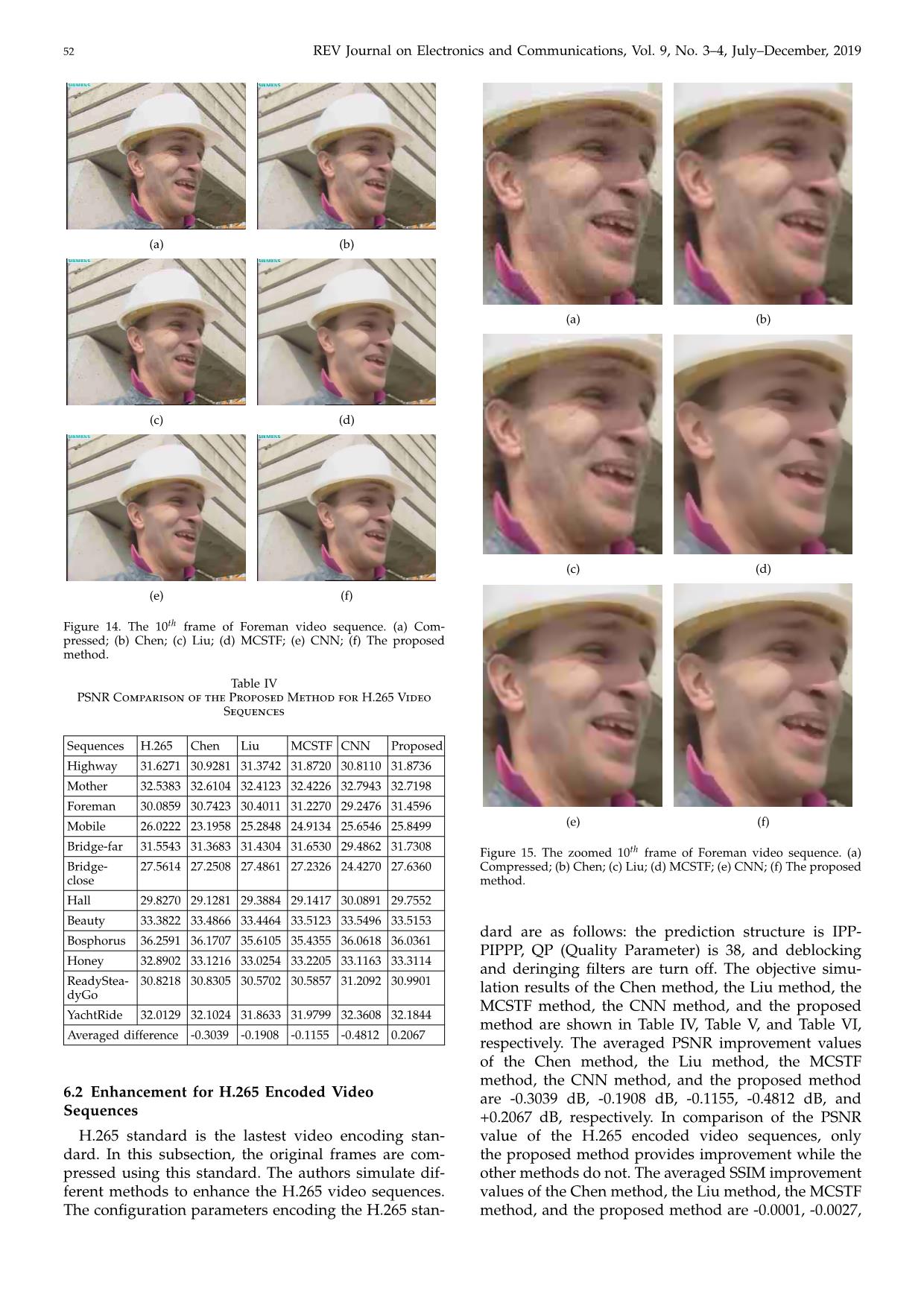

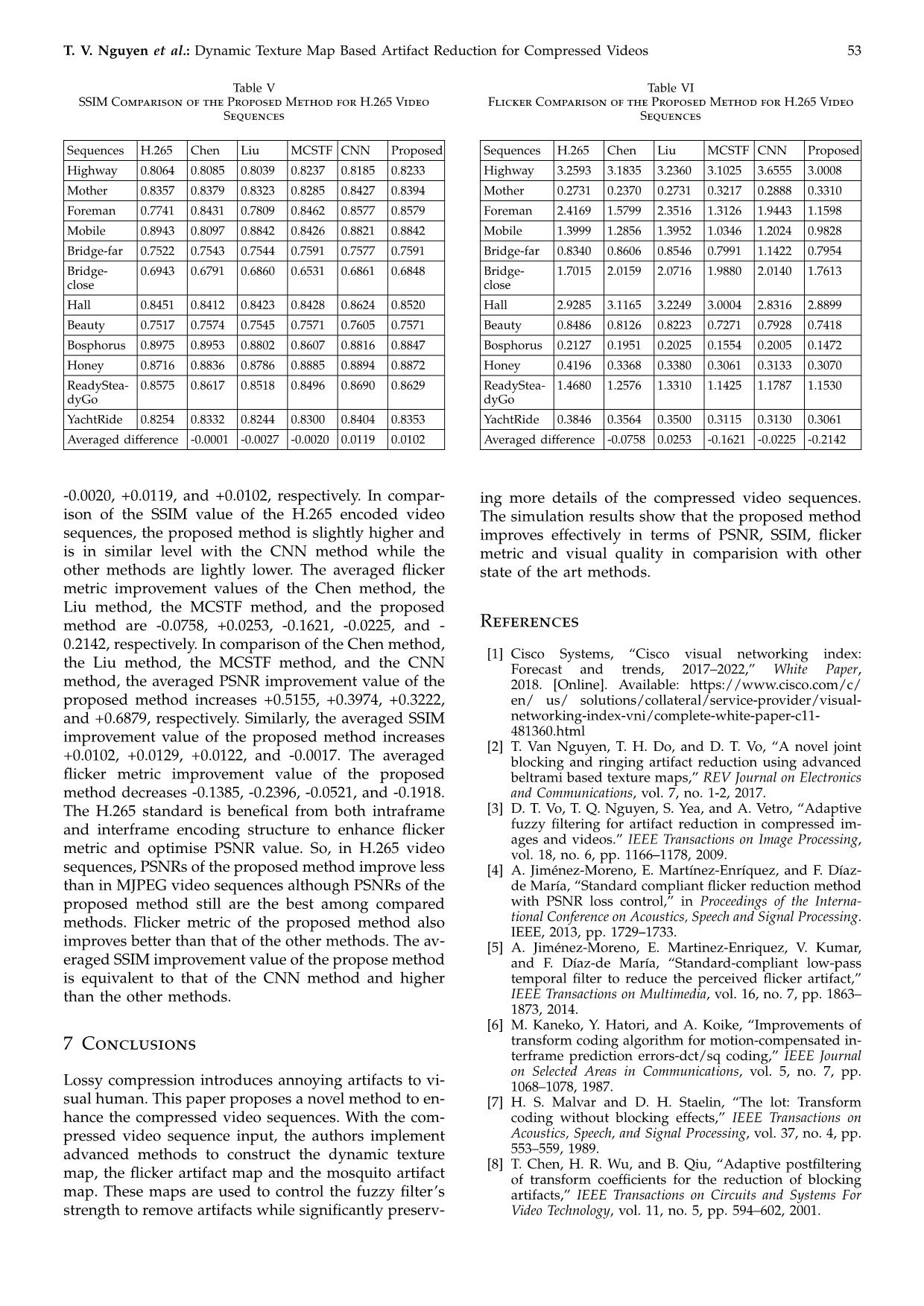

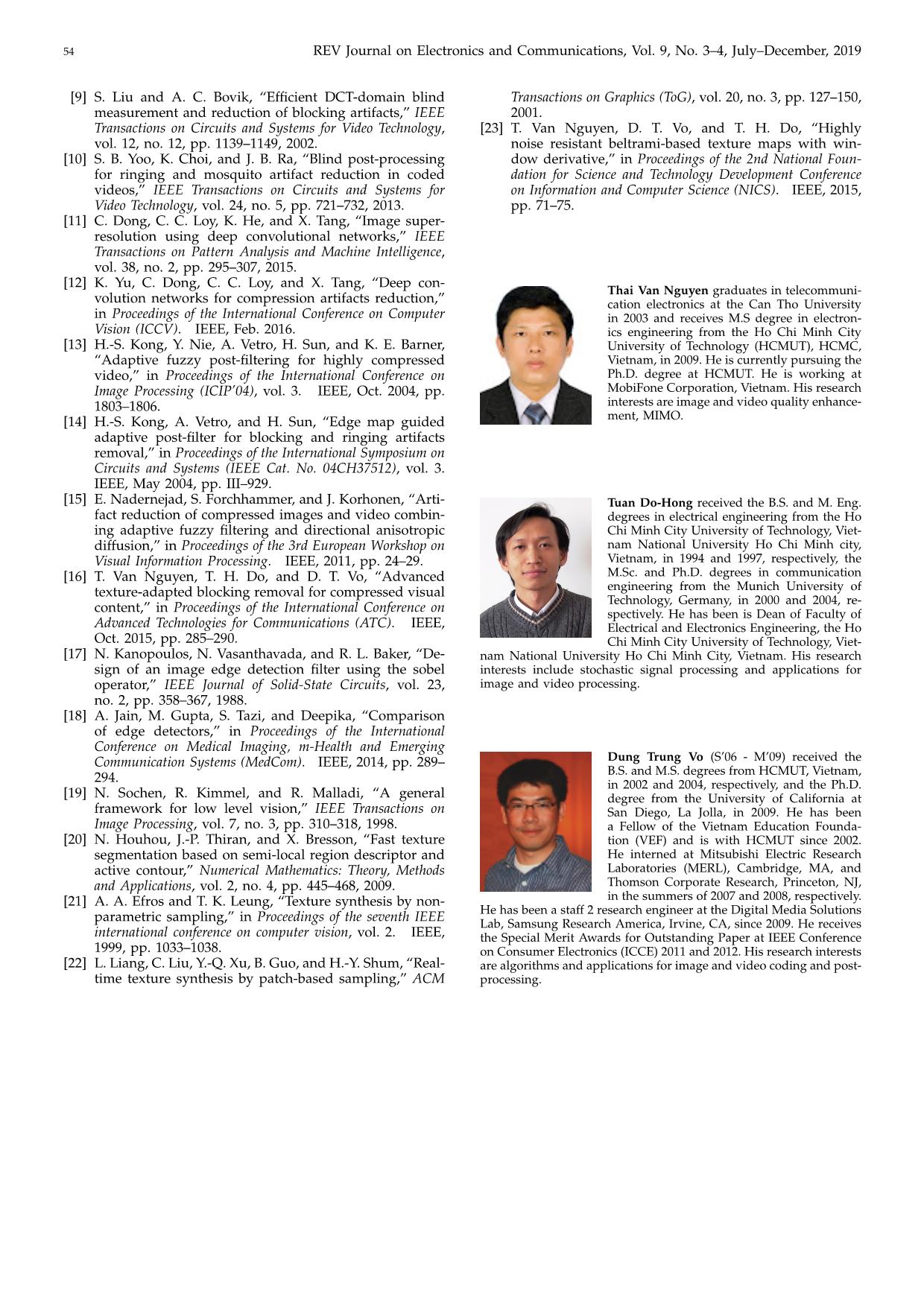

n Figure 11 and Figure 12. As can be seen in these results, Chen method result (Figure 12(b)) is blurry; the Liu method result (Figure 12(c)) still has many ringing artifacts, the MCSTF method introduces good result but many details are lost (Figure 12(d)); the CNN method re- sult (Figure 12(e)) and the proposed method result (Figure 12(f)) improve quality better than the other methods. However, according to Figure 13, the flicker metric of the CNN method is not improved. Similarly, the results of the 10th frame of the Foreman video (a) (b) (c) (d) (e) (f) Figure 12. The zoomed 10th frame of Mobile video sequence. (a) Compressed; (b) Chen; (c) Liu; (d) MCSTF; (e) CNN; (f) The proposed method. Figure 13. Flicker metric comparision in Mobile video sequence. sequence are shown on Figure 14 and Figure 15, where the proposed method, the MCSTF method, and the CNN method introduce the best qualities. However, the result of the CNN method is color bleeding, the result of the MCSTF method is lost many details. In the MJPEG encoded video sequcence enhance- ment, based on the above results, the proposed method outperforms the other methods in term of PSNRs, SSIMs (except the CNN method), the flicker met- rics, and the visual quality. The SSIM value of the CNN method and the proposed method are equavalent each other. 52 REV Journal on Electronics and Communications, Vol. 9, No. 3–4, July–December, 2019 (a) (b) (c) (d) (e) (f) Figure 14. The 10th frame of Foreman video sequence. (a) Com- pressed; (b) Chen; (c) Liu; (d) MCSTF; (e) CNN; (f) The proposed method. Table IV PSNR Comparison of the Proposed Method for H.265 Video Sequences Sequences H.265 Chen Liu MCSTF CNN Proposed Highway 31.6271 30.9281 31.3742 31.8720 30.8110 31.8736 Mother 32.5383 32.6104 32.4123 32.4226 32.7943 32.7198 Foreman 30.0859 30.7423 30.4011 31.2270 29.2476 31.4596 Mobile 26.0222 23.1958 25.2848 24.9134 25.6546 25.8499 Bridge-far 31.5543 31.3683 31.4304 31.6530 29.4862 31.7308 Bridge- close 27.5614 27.2508 27.4861 27.2326 24.4270 27.6360 Hall 29.8270 29.1281 29.3884 29.1417 30.0891 29.7552 Beauty 33.3822 33.4866 33.4464 33.5123 33.5496 33.5153 Bosphorus 36.2591 36.1707 35.6105 35.4355 36.0618 36.0361 Honey 32.8902 33.1216 33.0254 33.2205 33.1163 33.3114 ReadyStea- dyGo 30.8218 30.8305 30.5702 30.5857 31.2092 30.9901 YachtRide 32.0129 32.1024 31.8633 31.9799 32.3608 32.1844 Averaged difference -0.3039 -0.1908 -0.1155 -0.4812 0.2067 6.2 Enhancement for H.265 Encoded Video Sequences H.265 standard is the lastest video encoding stan- dard. In this subsection, the original frames are com- pressed using this standard. The authors simulate dif- ferent methods to enhance the H.265 video sequences. The configuration parameters encoding the H.265 stan- (a) (b) (c) (d) (e) (f) Figure 15. The zoomed 10th frame of Foreman video sequence. (a) Compressed; (b) Chen; (c) Liu; (d) MCSTF; (e) CNN; (f) The proposed method. dard are as follows: the prediction structure is IPP- PIPPP, QP (Quality Parameter) is 38, and deblocking and deringing filters are turn off. The objective simu- lation results of the Chen method, the Liu method, the MCSTF method, the CNN method, and the proposed method are shown in Table IV, Table V, and Table VI, respectively. The averaged PSNR improvement values of the Chen method, the Liu method, the MCSTF method, the CNN method, and the proposed method are -0.3039 dB, -0.1908 dB, -0.1155, -0.4812 dB, and +0.2067 dB, respectively. In comparison of the PSNR value of the H.265 encoded video sequences, only the proposed method provides improvement while the other methods do not. The averaged SSIM improvement values of the Chen method, the Liu method, the MCSTF method, and the proposed method are -0.0001, -0.0027, T. V. Nguyen et al.: Dynamic Texture Map Based Artifact Reduction for Compressed Videos 53 Table V SSIM Comparison of the Proposed Method for H.265 Video Sequences Sequences H.265 Chen Liu MCSTF CNN Proposed Highway 0.8064 0.8085 0.8039 0.8237 0.8185 0.8233 Mother 0.8357 0.8379 0.8323 0.8285 0.8427 0.8394 Foreman 0.7741 0.8431 0.7809 0.8462 0.8577 0.8579 Mobile 0.8943 0.8097 0.8842 0.8426 0.8821 0.8842 Bridge-far 0.7522 0.7543 0.7544 0.7591 0.7577 0.7591 Bridge- close 0.6943 0.6791 0.6860 0.6531 0.6861 0.6848 Hall 0.8451 0.8412 0.8423 0.8428 0.8624 0.8520 Beauty 0.7517 0.7574 0.7545 0.7571 0.7605 0.7571 Bosphorus 0.8975 0.8953 0.8802 0.8607 0.8816 0.8847 Honey 0.8716 0.8836 0.8786 0.8885 0.8894 0.8872 ReadyStea- dyGo 0.8575 0.8617 0.8518 0.8496 0.8690 0.8629 YachtRide 0.8254 0.8332 0.8244 0.8300 0.8404 0.8353 Averaged difference -0.0001 -0.0027 -0.0020 0.0119 0.0102 -0.0020, +0.0119, and +0.0102, respectively. In compar- ison of the SSIM value of the H.265 encoded video sequences, the proposed method is slightly higher and is in similar level with the CNN method while the other methods are lightly lower. The averaged flicker metric improvement values of the Chen method, the Liu method, the MCSTF method, and the proposed method are -0.0758, +0.0253, -0.1621, -0.0225, and - 0.2142, respectively. In comparison of the Chen method, the Liu method, the MCSTF method, and the CNN method, the averaged PSNR improvement value of the proposed method increases +0.5155, +0.3974, +0.3222, and +0.6879, respectively. Similarly, the averaged SSIM improvement value of the proposed method increases +0.0102, +0.0129, +0.0122, and -0.0017. The averaged flicker metric improvement value of the proposed method decreases -0.1385, -0.2396, -0.0521, and -0.1918. The H.265 standard is benefical from both intraframe and interframe encoding structure to enhance flicker metric and optimise PSNR value. So, in H.265 video sequences, PSNRs of the proposed method improve less than in MJPEG video sequences although PSNRs of the proposed method still are the best among compared methods. Flicker metric of the proposed method also improves better than that of the other methods. The av- eraged SSIM improvement value of the propose method is equivalent to that of the CNN method and higher than the other methods. 7 Conclusions Lossy compression introduces annoying artifacts to vi- sual human. This paper proposes a novel method to en- hance the compressed video sequences. With the com- pressed video sequence input, the authors implement advanced methods to construct the dynamic texture map, the flicker artifact map and the mosquito artifact map. These maps are used to control the fuzzy filter’s strength to remove artifacts while significantly preserv- Table VI Flicker Comparison of the Proposed Method for H.265 Video Sequences Sequences H.265 Chen Liu MCSTF CNN Proposed Highway 3.2593 3.1835 3.2360 3.1025 3.6555 3.0008 Mother 0.2731 0.2370 0.2731 0.3217 0.2888 0.3310 Foreman 2.4169 1.5799 2.3516 1.3126 1.9443 1.1598 Mobile 1.3999 1.2856 1.3952 1.0346 1.2024 0.9828 Bridge-far 0.8340 0.8606 0.8546 0.7991 1.1422 0.7954 Bridge- close 1.7015 2.0159 2.0716 1.9880 2.0140 1.7613 Hall 2.9285 3.1165 3.2249 3.0004 2.8316 2.8899 Beauty 0.8486 0.8126 0.8223 0.7271 0.7928 0.7418 Bosphorus 0.2127 0.1951 0.2025 0.1554 0.2005 0.1472 Honey 0.4196 0.3368 0.3380 0.3061 0.3133 0.3070 ReadyStea- dyGo 1.4680 1.2576 1.3310 1.1425 1.1787 1.1530 YachtRide 0.3846 0.3564 0.3500 0.3115 0.3130 0.3061 Averaged difference -0.0758 0.0253 -0.1621 -0.0225 -0.2142 ing more details of the compressed video sequences. The simulation results show that the proposed method improves effectively in terms of PSNR, SSIM, flicker metric and visual quality in comparision with other state of the art methods. References [1] Cisco Systems, “Cisco visual networking index: Forecast and trends, 2017–2022,” White Paper, 2018. [Online]. Available: https://www.cisco.com/c/ en/ us/ solutions/collateral/service-provider/visual- networking-index-vni/complete-white-paper-c11- 481360.html [2] T. Van Nguyen, T. H. Do, and D. T. Vo, “A novel joint blocking and ringing artifact reduction using advanced beltrami based texture maps,” REV Journal on Electronics and Communications, vol. 7, no. 1-2, 2017. [3] D. T. Vo, T. Q. Nguyen, S. Yea, and A. Vetro, “Adaptive fuzzy filtering for artifact reduction in compressed im- ages and videos.” IEEE Transactions on Image Processing, vol. 18, no. 6, pp. 1166–1178, 2009. [4] A. Jiménez-Moreno, E. Martínez-Enríquez, and F. Díaz- de María, “Standard compliant flicker reduction method with PSNR loss control,” in Proceedings of the Interna- tional Conference on Acoustics, Speech and Signal Processing. IEEE, 2013, pp. 1729–1733. [5] A. Jiménez-Moreno, E. Martinez-Enriquez, V. Kumar, and F. Díaz-de María, “Standard-compliant low-pass temporal filter to reduce the perceived flicker artifact,” IEEE Transactions on Multimedia, vol. 16, no. 7, pp. 1863– 1873, 2014. [6] M. Kaneko, Y. Hatori, and A. Koike, “Improvements of transform coding algorithm for motion-compensated in- terframe prediction errors-dct/sq coding,” IEEE Journal on Selected Areas in Communications, vol. 5, no. 7, pp. 1068–1078, 1987. [7] H. S. Malvar and D. H. Staelin, “The lot: Transform coding without blocking effects,” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. 37, no. 4, pp. 553–559, 1989. [8] T. Chen, H. R. Wu, and B. Qiu, “Adaptive postfiltering of transform coefficients for the reduction of blocking artifacts,” IEEE Transactions on Circuits and Systems For Video Technology, vol. 11, no. 5, pp. 594–602, 2001. 54 REV Journal on Electronics and Communications, Vol. 9, No. 3–4, July–December, 2019 [9] S. Liu and A. C. Bovik, “Efficient DCT-domain blind measurement and reduction of blocking artifacts,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 12, no. 12, pp. 1139–1149, 2002. [10] S. B. Yoo, K. Choi, and J. B. Ra, “Blind post-processing for ringing and mosquito artifact reduction in coded videos,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 24, no. 5, pp. 721–732, 2013. [11] C. Dong, C. C. Loy, K. He, and X. Tang, “Image super- resolution using deep convolutional networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 2, pp. 295–307, 2015. [12] K. Yu, C. Dong, C. C. Loy, and X. Tang, “Deep con- volution networks for compression artifacts reduction,” in Proceedings of the International Conference on Computer Vision (ICCV). IEEE, Feb. 2016. [13] H.-S. Kong, Y. Nie, A. Vetro, H. Sun, and K. E. Barner, “Adaptive fuzzy post-filtering for highly compressed video,” in Proceedings of the International Conference on Image Processing (ICIP’04), vol. 3. IEEE, Oct. 2004, pp. 1803–1806. [14] H.-S. Kong, A. Vetro, and H. Sun, “Edge map guided adaptive post-filter for blocking and ringing artifacts removal,” in Proceedings of the International Symposium on Circuits and Systems (IEEE Cat. No. 04CH37512), vol. 3. IEEE, May 2004, pp. III–929. [15] E. Nadernejad, S. Forchhammer, and J. Korhonen, “Arti- fact reduction of compressed images and video combin- ing adaptive fuzzy filtering and directional anisotropic diffusion,” in Proceedings of the 3rd European Workshop on Visual Information Processing. IEEE, 2011, pp. 24–29. [16] T. Van Nguyen, T. H. Do, and D. T. Vo, “Advanced texture-adapted blocking removal for compressed visual content,” in Proceedings of the International Conference on Advanced Technologies for Communications (ATC). IEEE, Oct. 2015, pp. 285–290. [17] N. Kanopoulos, N. Vasanthavada, and R. L. Baker, “De- sign of an image edge detection filter using the sobel operator,” IEEE Journal of Solid-State Circuits, vol. 23, no. 2, pp. 358–367, 1988. [18] A. Jain, M. Gupta, S. Tazi, and Deepika, “Comparison of edge detectors,” in Proceedings of the International Conference on Medical Imaging, m-Health and Emerging Communication Systems (MedCom). IEEE, 2014, pp. 289– 294. [19] N. Sochen, R. Kimmel, and R. Malladi, “A general framework for low level vision,” IEEE Transactions on Image Processing, vol. 7, no. 3, pp. 310–318, 1998. [20] N. Houhou, J.-P. Thiran, and X. Bresson, “Fast texture segmentation based on semi-local region descriptor and active contour,” Numerical Mathematics: Theory, Methods and Applications, vol. 2, no. 4, pp. 445–468, 2009. [21] A. A. Efros and T. K. Leung, “Texture synthesis by non- parametric sampling,” in Proceedings of the seventh IEEE international conference on computer vision, vol. 2. IEEE, 1999, pp. 1033–1038. [22] L. Liang, C. Liu, Y.-Q. Xu, B. Guo, and H.-Y. Shum, “Real- time texture synthesis by patch-based sampling,” ACM Transactions on Graphics (ToG), vol. 20, no. 3, pp. 127–150, 2001. [23] T. Van Nguyen, D. T. Vo, and T. H. Do, “Highly noise resistant beltrami-based texture maps with win- dow derivative,” in Proceedings of the 2nd National Foun- dation for Science and Technology Development Conference on Information and Computer Science (NICS). IEEE, 2015, pp. 71–75. Thai Van Nguyen graduates in telecommuni- cation electronics at the Can Tho University in 2003 and receives M.S degree in electron- ics engineering from the Ho Chi Minh City University of Technology (HCMUT), HCMC, Vietnam, in 2009. He is currently pursuing the Ph.D. degree at HCMUT. He is working at MobiFone Corporation, Vietnam. His research interests are image and video quality enhance- ment, MIMO. Tuan Do-Hong received the B.S. and M. Eng. degrees in electrical engineering from the Ho Chi Minh City University of Technology, Viet- nam National University Ho Chi Minh city, Vietnam, in 1994 and 1997, respectively, the M.Sc. and Ph.D. degrees in communication engineering from the Munich University of Technology, Germany, in 2000 and 2004, re- spectively. He has been is Dean of Faculty of Electrical and Electronics Engineering, the Ho Chi Minh City University of Technology, Viet- nam National University Ho Chi Minh City, Vietnam. His research interests include stochastic signal processing and applications for image and video processing. Dung Trung Vo (S’06 - M’09) received the B.S. and M.S. degrees from HCMUT, Vietnam, in 2002 and 2004, respectively, and the Ph.D. degree from the University of California at San Diego, La Jolla, in 2009. He has been a Fellow of the Vietnam Education Founda- tion (VEF) and is with HCMUT since 2002. He interned at Mitsubishi Electric Research Laboratories (MERL), Cambridge, MA, and Thomson Corporate Research, Princeton, NJ, in the summers of 2007 and 2008, respectively. He has been a staff 2 research engineer at the Digital Media Solutions Lab, Samsung Research America, Irvine, CA, since 2009. He receives the Special Merit Awards for Outstanding Paper at IEEE Conference on Consumer Electronics (ICCE) 2011 and 2012. His research interests are algorithms and applications for image and video coding and post- processing.

File đính kèm:

dynamic_texture_map_based_artifact_reduction_for_compressed.pdf

dynamic_texture_map_based_artifact_reduction_for_compressed.pdf