Application of parameters of voice singal autoregressive models to solve speaker recognition problems

These methods are widely studied for the

Slavic and Germanic languages and automatic

speaker recognition systems are developed on

their basis. However, the recognition accuracy of

such systems does not allow their industrial

implementation. The main reasons for this

situation are the following:

The absence of formalized criteria of

selecting the length of the window for the

original VS decomposition;

Ambiguity of choosing the basic VS

conversion functions;

Instability of informative speech features

relative to noise;

Transformation of the original VS, leading

to an increase in resource capacity and

significant errors in calculating informative

speech features;

Significant variability of informative

feature values for the same speaker.

Trang 1

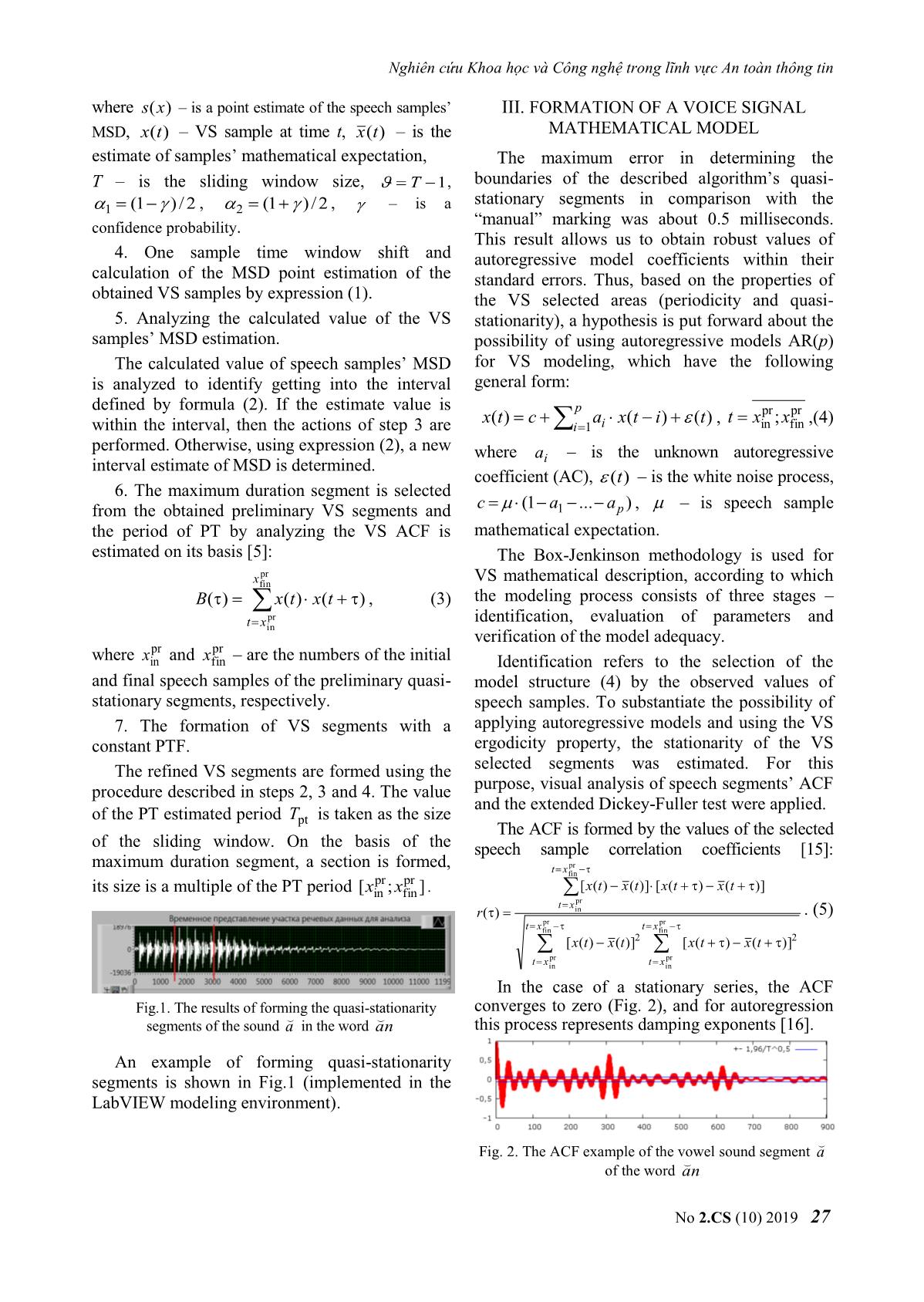

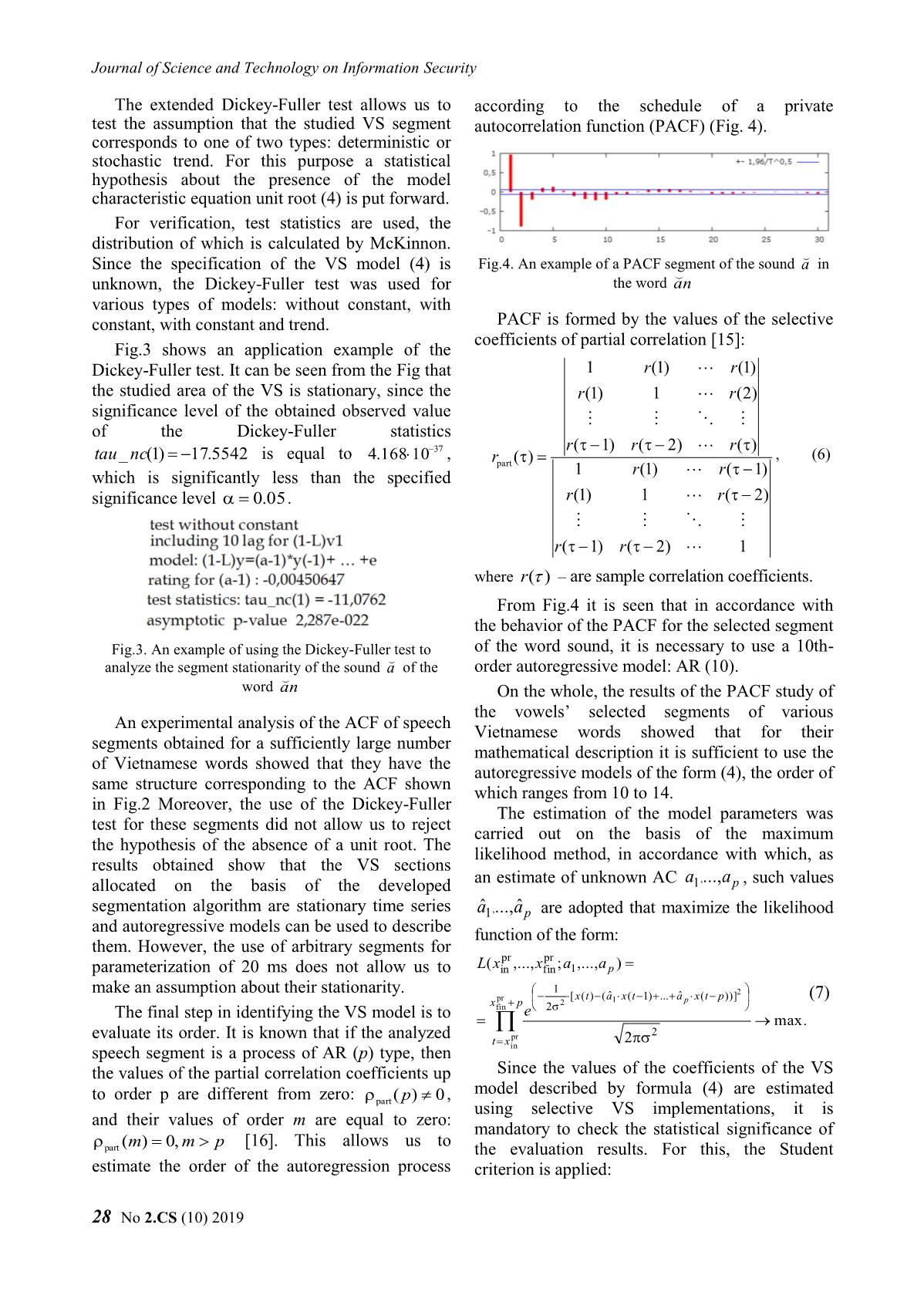

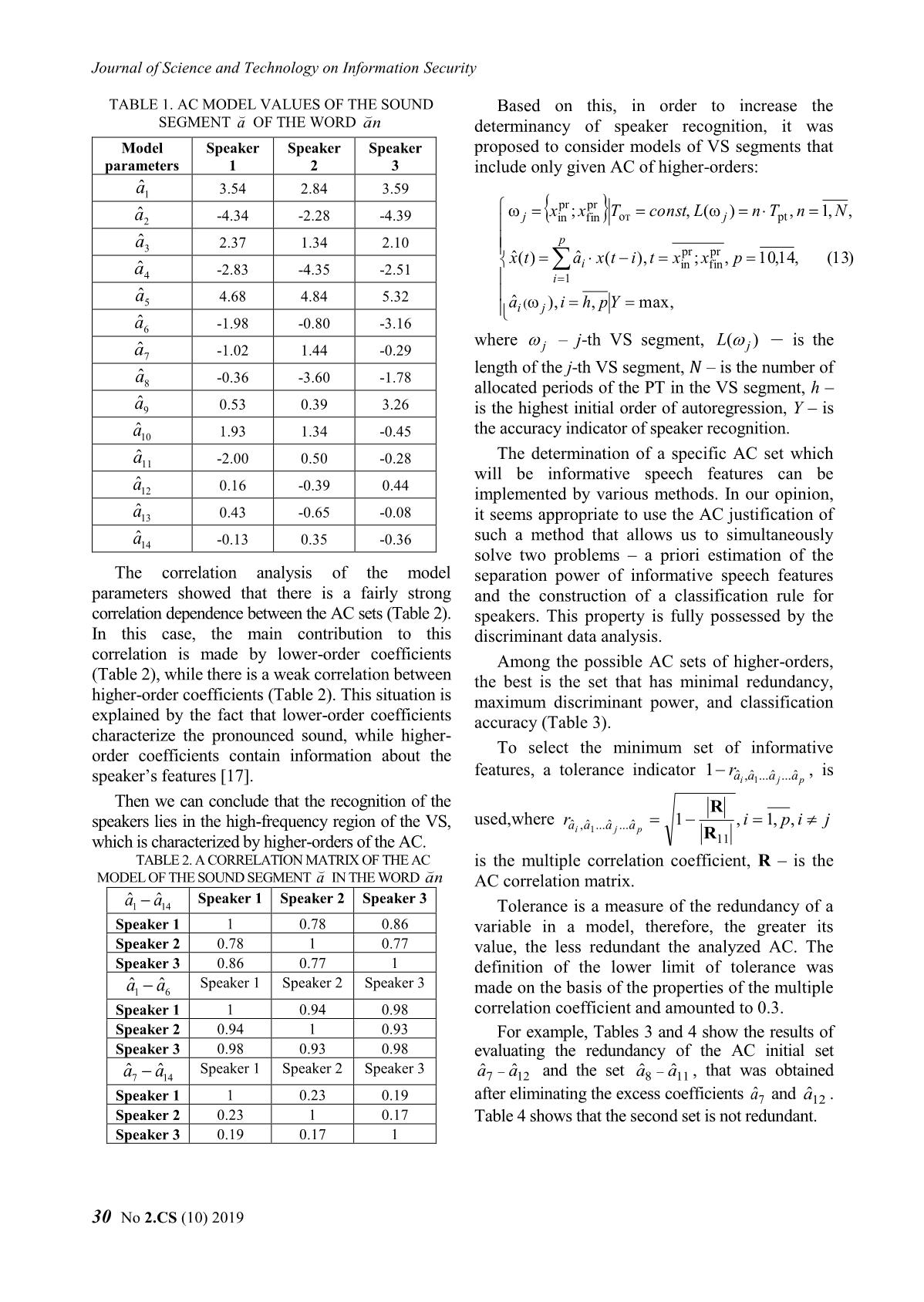

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tải về để xem bản đầy đủ

Tóm tắt nội dung tài liệu: Application of parameters of voice singal autoregressive models to solve speaker recognition problems

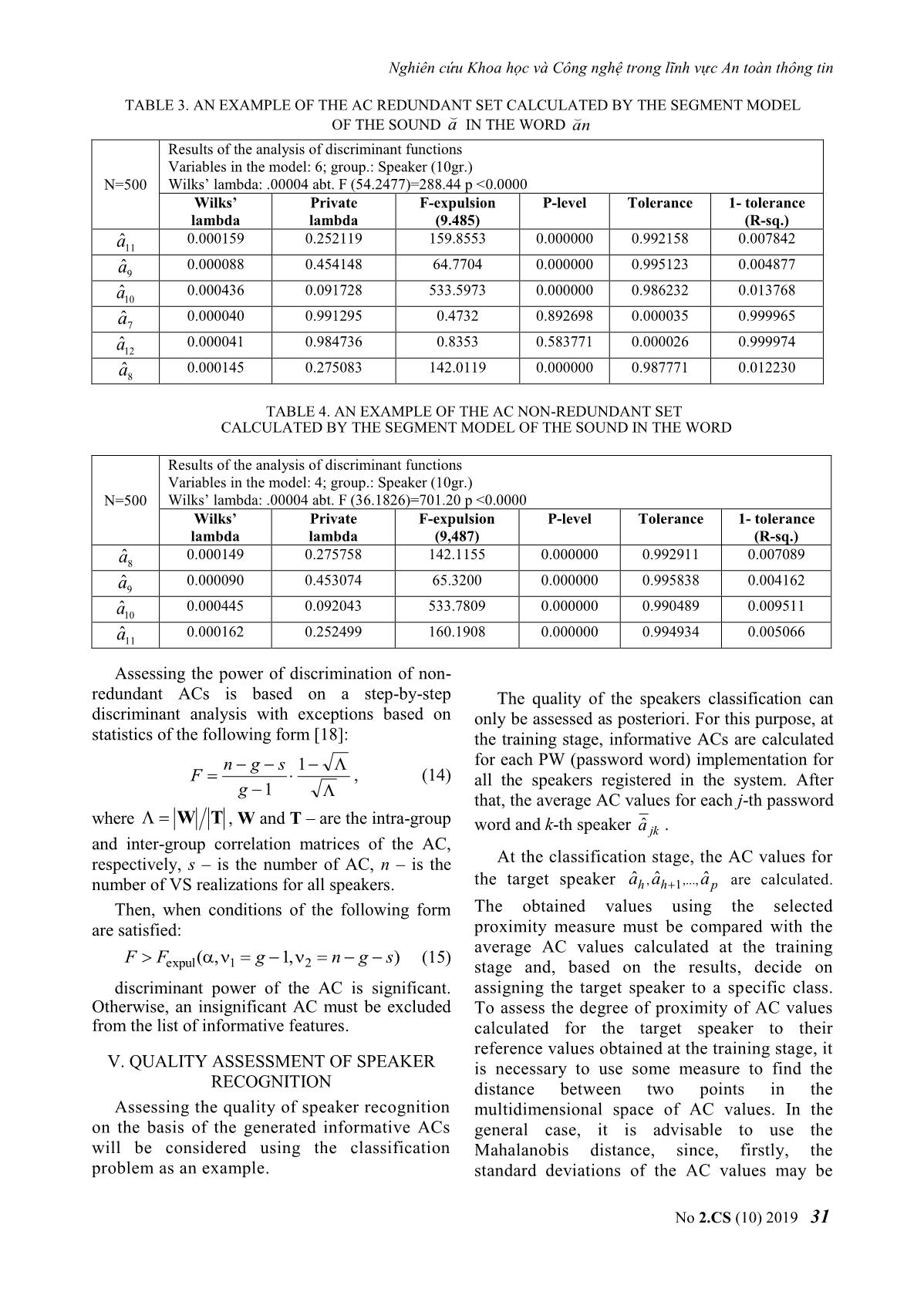

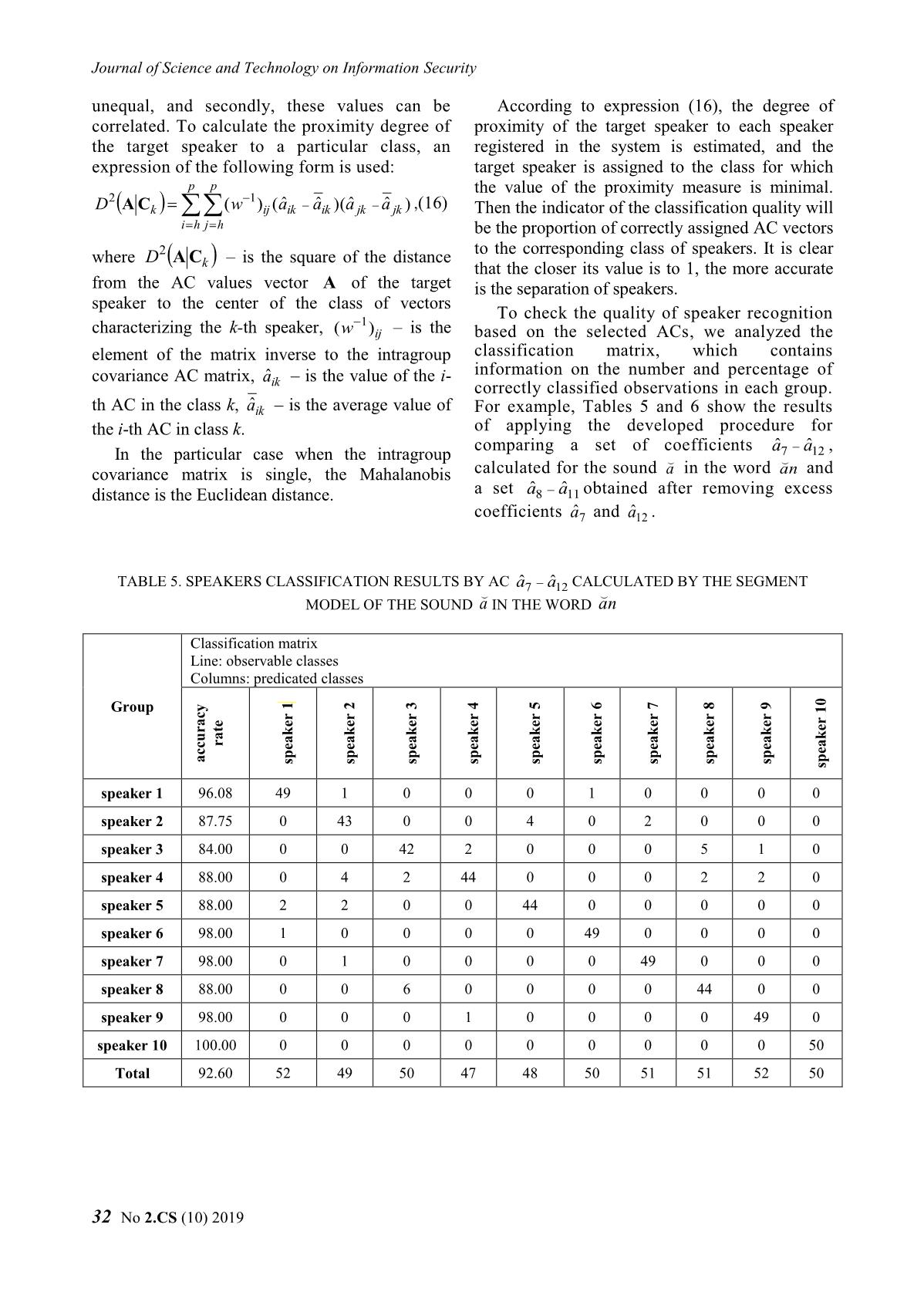

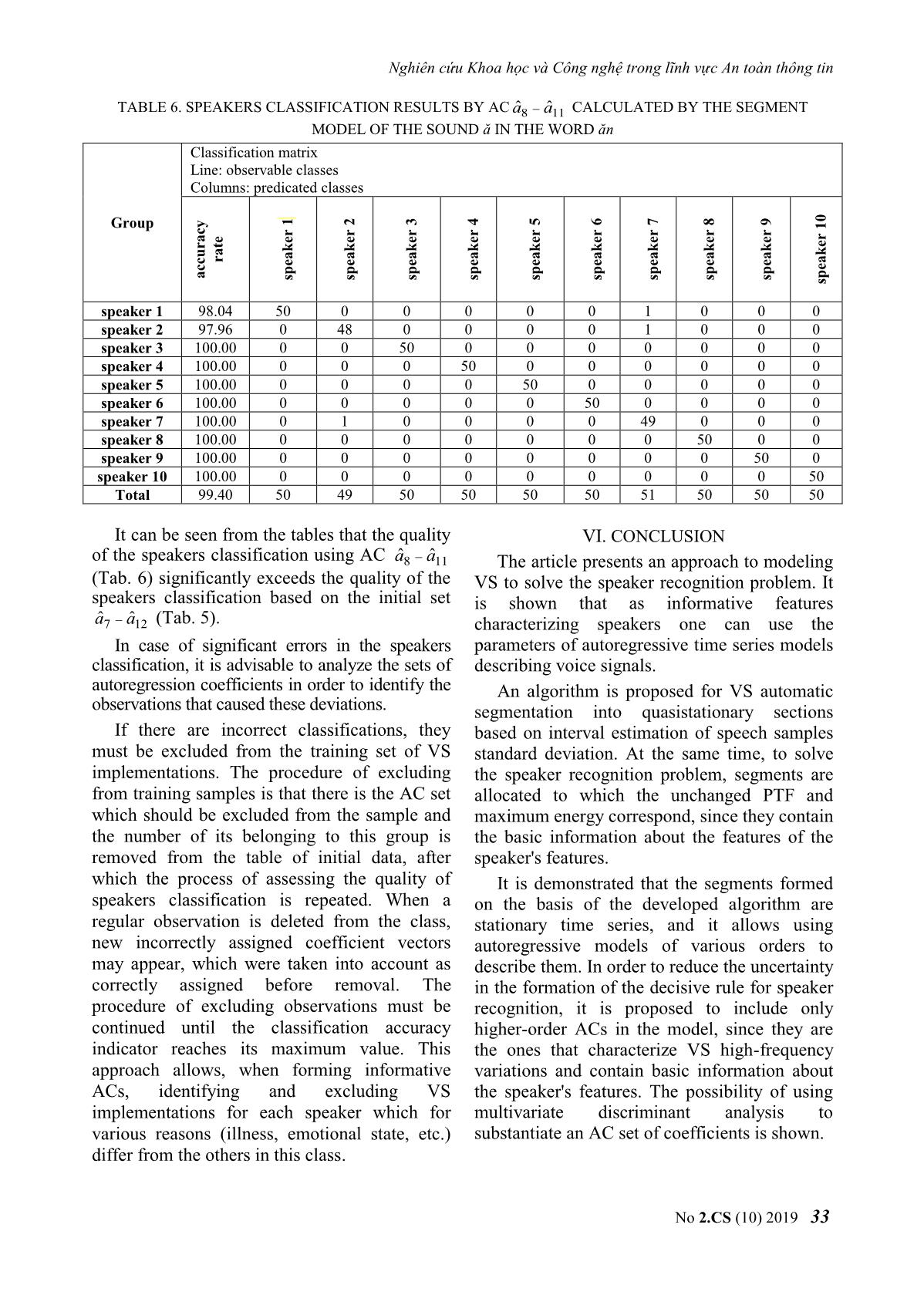

sis of discriminant functions Variables in the model: 6; group.: Speaker (10gr.) N=500 Wilks’ lambda: .00004 abt. F (54.2477)=288.44 p <0.0000 Wilks’ Private F-expulsion P-level Tolerance 1- tolerance lambda lambda (9.485) (R-sq.) 0.000159 0.252119 159.8553 0.000000 0.992158 0.007842 aˆ11 0.000088 0.454148 64.7704 0.000000 0.995123 0.004877 aˆ9 0.000436 0.091728 533.5973 0.000000 0.986232 0.013768 aˆ10 0.000040 0.991295 0.4732 0.892698 0.000035 0.999965 aˆ7 0.000041 0.984736 0.8353 0.583771 0.000026 0.999974 aˆ12 0.000145 0.275083 142.0119 0.000000 0.987771 0.012230 aˆ8 TABLE 4. AN EXAMPLE OF THE AC NON-REDUNDANT SET CALCULATED BY THE SEGMENT MODEL OF THE SOUND IN THE WORD Results of the analysis of discriminant functions Variables in the model: 4; group.: Speaker (10gr.) N=500 Wilks’ lambda: .00004 abt. F (36.1826)=701.20 p <0.0000 Wilks’ Private F-expulsion P-level Tolerance 1- tolerance lambda lambda (9,487) (R-sq.) 0.000149 0.275758 142.1155 0.000000 0.992911 0.007089 0.000090 0.453074 65.3200 0.000000 0.995838 0.004162 0.000445 0.092043 533.7809 0.000000 0.990489 0.009511 0.000162 0.252499 160.1908 0.000000 0.994934 0.005066 Assessing the power of discrimination of non- redundant ACs is based on a step-by-step The quality of the speakers classification can discriminant analysis with exceptions based on only be assessed as posteriori. For this purpose, at statistics of the following form [18]: the training stage, informative ACs are calculated n g s 1 for each PW (password word) implementation for F , (14) all the speakers registered in the system. After g 1 that, the average AC values for each j-th password where W T , W and T – are the intra-group word and k-th speaker aˆ jk . and inter-group correlation matrices of the AC, respectively, s – is the number of AC, n – is the At the classification stage, the AC values for number of VS realizations for all speakers. the target speaker aˆh , aˆh 1,..., aˆ p are calculated. Then, when conditions of the following form The obtained values using the selected are satisfied: proximity measure must be compared with the average AC values calculated at the training F F ( , g 1, n g s) (15) expul 1 2 stage and, based on the results, decide on discriminant power of the AC is significant. assigning the target speaker to a specific class. Otherwise, an insignificant AC must be excluded To assess the degree of proximity of AC values from the list of informative features. calculated for the target speaker to their reference values obtained at the training stage, it V. QUALITY ASSESSMENT OF SPEAKER is necessary to use some measure to find the RECOGNITION distance between two points in the Assessing the quality of speaker recognition multidimensional space of AC values. In the on the basis of the generated informative ACs general case, it is advisable to use the will be considered using the classification Mahalanobis distance, since, firstly, the problem as an example. standard deviations of the AC values may be No 2.CS (10) 2019 31 Journal of Science and Technology on Information Security unequal, and secondly, these values can be According to expression (16), the degree of correlated. To calculate the proximity degree of proximity of the target speaker to each speaker the target speaker to a particular class, an registered in the system is estimated, and the expression of the following form is used: target speaker is assigned to the class for which p p the value of the proximity measure is minimal. 2 1 D A Ck (w )ij (aˆik aˆik )(aˆ jk aˆ jk ) ,(16) Then the indicator of the classification quality will i h j h be the proportion of correctly assigned AC vectors where D2 A C – is the square of the distance to the corresponding class of speakers. It is clear k that the closer its value is to 1, the more accurate from the AC values vector A of the target is the separation of speakers. speaker to the center of the class of vectors 1 To check the quality of speaker recognition characterizing the k-th speaker, (w )ij – is the based on the selected ACs, we analyzed the element of the matrix inverse to the intragroup classification matrix, which contains covariance AC matrix, aˆ – is the value of the i- information on the number and percentage of ik correctly classified observations in each group. th AC in the class k, aˆik – is the average value of For example, Tables 5 and 6 show the results the i-th AC in class k. of applying the developed procedure for comparing a set of coefficients aˆ7 aˆ12 , In the particular case when the intragroup covariance matrix is single, the Mahalanobis calculated for the sound a in the word an and distance is the Euclidean distance. a set aˆ8 aˆ11 obtained after removing excess ˆ ˆ coefficients a7 and a12 . TABLE 5. SPEAKERS CLASSIFICATION RESULTS BY AC CALCULATED BY THE SEGMENT MODEL OF THE SOUND a IN THE WORD an Classification matrix Line: observable classes Columns: predicated classes 1 2 3 4 5 6 7 8 9 Group 10 rate accuracy speaker speaker speaker speaker speaker speaker speaker speaker speaker speaker speaker 1 96.08 49 1 0 0 0 1 0 0 0 0 speaker 2 87.75 0 43 0 0 4 0 2 0 0 0 speaker 3 84.00 0 0 42 2 0 0 0 5 1 0 speaker 4 88.00 0 4 2 44 0 0 0 2 2 0 speaker 5 88.00 2 2 0 0 44 0 0 0 0 0 speaker 6 98.00 1 0 0 0 0 49 0 0 0 0 speaker 7 98.00 0 1 0 0 0 0 49 0 0 0 speaker 8 88.00 0 0 6 0 0 0 0 44 0 0 speaker 9 98.00 0 0 0 1 0 0 0 0 49 0 speaker 10 100.00 0 0 0 0 0 0 0 0 0 50 Total 92.60 52 49 50 47 48 50 51 51 52 50 32 No 2.CS (10) 2019 Nghiên cứu Khoa học và Công nghệ trong lĩnh vực An toàn thông tin TABLE 6. SPEAKERS CLASSIFICATION RESULTS BY AC CALCULATED BY THE SEGMENT MODEL OF THE SOUND ă IN THE WORD ăn Classification matrix Line: observable classes Columns: predicated classes 1 2 3 4 5 6 7 8 9 Group 10 rate accuracy speaker speaker speaker speaker speaker speaker speaker speaker speaker speaker speaker 1 98.04 50 0 0 0 0 0 1 0 0 0 speaker 2 97.96 0 48 0 0 0 0 1 0 0 0 speaker 3 100.00 0 0 50 0 0 0 0 0 0 0 speaker 4 100.00 0 0 0 50 0 0 0 0 0 0 speaker 5 100.00 0 0 0 0 50 0 0 0 0 0 speaker 6 100.00 0 0 0 0 0 50 0 0 0 0 speaker 7 100.00 0 1 0 0 0 0 49 0 0 0 speaker 8 100.00 0 0 0 0 0 0 0 50 0 0 speaker 9 100.00 0 0 0 0 0 0 0 0 50 0 speaker 10 100.00 0 0 0 0 0 0 0 0 0 50 Total 99.40 50 49 50 50 50 50 51 50 50 50 It can be seen from the tables that the quality VI. CONCLUSION of the speakers classification using AC aˆ8 aˆ11 The article presents an approach to modeling (Tab. 6) significantly exceeds the quality of the VS to solve the speaker recognition problem. It speakers classification based on the initial set is shown that as informative features aˆ7 aˆ12 (Tab. 5). characterizing speakers one can use the In case of significant errors in the speakers parameters of autoregressive time series models classification, it is advisable to analyze the sets of describing voice signals. autoregression coefficients in order to identify the An algorithm is proposed for VS automatic observations that caused these deviations. segmentation into quasistationary sections If there are incorrect classifications, they based on interval estimation of speech samples must be excluded from the training set of VS standard deviation. At the same time, to solve implementations. The procedure of excluding the speaker recognition problem, segments are from training samples is that there is the AC set allocated to which the unchanged PTF and which should be excluded from the sample and maximum energy correspond, since they contain the number of its belonging to this group is the basic information about the features of the removed from the table of initial data, after speaker's features. which the process of assessing the quality of It is demonstrated that the segments formed speakers classification is repeated. When a on the basis of the developed algorithm are regular observation is deleted from the class, stationary time series, and it allows using new incorrectly assigned coefficient vectors autoregressive models of various orders to may appear, which were taken into account as describe them. In order to reduce the uncertainty correctly assigned before removal. The in the formation of the decisive rule for speaker procedure of excluding observations must be recognition, it is proposed to include only continued until the classification accuracy higher-order ACs in the model, since they are indicator reaches its maximum value. This the ones that characterize VS high-frequency approach allows, when forming informative variations and contain basic information about ACs, identifying and excluding VS the speaker's features. The possibility of using implementations for each speaker which for multivariate discriminant analysis to various reasons (illness, emotional state, etc.) substantiate an AC set of coefficients is shown. differ from the others in this class. No 2.CS (10) 2019 33 Journal of Science and Technology on Information Security The results of assessing the quality of speakers tech. sciences. – St. Petersburg.– 197 pp. classification allow us to conclude that it is [Electronic resource]. URL: possible to use the described approach to create 2017. speaker recognition automatic systems for the [12]. Sorokin V. N. Segmentation and recognition of vowels / V. N. Sorokin, A. I. Tsyplikhin // Vietnamese language. Information Processes. Vol . 4. – No. 2. – pp. 202– 220, 2004. REFERENCES [13]. Nguyen An Tuan Automatic analysis, recognition [1]. Sorokin V. N. “Voice recognition: analytical and synthesis of tonal speech (based on the material review” V. N. Sorokin, V. V. Vyugin, A. A. of the Vietnamese language): dissertation ... Tananykin, Information processes,.Vol. 12, No. 1. Doctors of technical sciences. – Moscow– 456 pp. pp. 1–13, 2012. [Electronic resource]. URL: https: // [2]. Pervushin E. A. “Review of the main methods of dissercat.com/content/avtomaticheskii-analiz- speaker recognition” / E. A. Pervushin // raspoznavanie-i-sintez-tonalnoi-rechi-na-materiale- Mathematical structures and modeling,, No. 3 (24), vetnamskogo-yazyka, 1984. pp. 41–54, 2011. [14]. Gmurman V. Ye. Probability theory and [3]. I. Rohmanenko. “Algorithms and software for mathematical statistics: textbook. manual for verifying an announcer using an arbitrary phrase: universities / V. E. Gmurman. – 12th ed., Revised. thesis ... cand. tech. sciences”. [Electronic – M.: Yurayt, 2010 .– 478 p. resource]. URL: https://postgraduate.tusur.ru [15]. Boxing J., Jenkins G. Time Series Analysis / Per. /system/file_copies/ files / 000/000/262 / original / from English; Ed. V.F. Pisarenko. M .: Mir, 1974.– dissertation.pdf Tomsk, , 111 pp. 2017. 406 pp. [4]. Ahmad K. S. A “unique approach in text [16]. Kantorovich, G. G. Analysis of time series // independent speaker recognition using MFCC Moscow, 2003. – 129 pp. [Electronic resource]. feature sets and probabilistic neural network” // URL: http: // Advances in Pattern Recognition (ICAPR), Eighth biznesbooks.com/components/com_jshopping/ International Conference on..,pp.16. 2015 files / demo_products / kantorovich-g-g-analiz- [5]. Markel, J. D. Linear Prediction of Speech: [trans. vremennykh-ryadov.pdf. from English.] / J. D. Markel, A. H. Gray; under the [17]. Novikov E.I. Parameterization of a speech signal editorship of Yu.N. Prokhorov and V. S. Zvezdin. – based on autoregressive models / E.I. Novikov, Do Moscow: Communication, 308 p,1980. Kao Khan, // XI All-Russian Interdepartmental [6]. Lysak A. B. Identification and authentication of a Scientific Conference "Actual problems of the person: a review of the basic biometric methods of development of security systems, special user authentication of computer systems / communications and information for the needs of A. B. Lysak // Mathematical structures and public authorities of the Russian Federation modeling.. No. 2 (26). – pp. 124–134,2012. Federations”: materials and reports (Oryol, [7]. Meshcheryakov R. V. Algorithms for evaluating February 5-6, 2019). At 10 hours / under the automatic segmentation of a speech signal / general editorship of P. L. Malyshev. – Eagle: R. V. Meshcheryakov, A. A. Konev // Academy of the Federal Security Service of Russia, Informatics and Control Systems.– No. 1 (31). – –pp. 127–130, 2019.. pp. 195–206. 2012. [18]. Kim J.-O. Factor, discriminant and cluster [8]. Ding J., Yen C. T. Enhancing GMM speaker analysis: Per. from English / J.-O. Kim, C.W. identification by incorporating SVM speaker Muller, W.R. Kleck and others; Ed. I.S. Enyukova. verification for intelligent web-based speech – M.: Finance and Statistics, – 215 pp, 1989. applications // Multimedia Tools and Applications.. – Vol. 74. – No. 14. – pp. 5131-5140, 2015. [9]. Trubitsyn VG Models and algorithms in speech signal analysis systems: dis. ... cand. tech. sciences. – Belgorod, 2013 .– 134 pp. [Electronic resource]. URL: algoritmy-v-sistemakh-analiza-rechevykh-signalov. [10]. Ganapathiraju A., Hamaker J., Picone J., Doddington G.R. and Ordowski M. Syllable-Based Large Vocabulary Continuous Speech Recognition. IEEE Transactions on Speech and Audio Processing, Vol. 9, No. 4, pp. 358–366, 2001. [11]. Tomchuk K. K. Segmentation of speech signals for tasks of automatic speech processing: dis. cand. 34 No 2.CS (10) 2019 Nghiên cứu Khoa học và Công nghệ trong lĩnh vực An toàn thông tin ABOUT THE AUTHORS PhD. Evgeny Novikov PhD. Vladimir Trubitsyn Workplace: The Academy of Workplace: The Academy of Federal Guard Service of the Federal Guard Service of the Russian Federation. Russian Federation. Email: nei05@rambler.ru Email: gremlin.kop@mail.ru The education process: Received The education process: his Ph.D. degree at the Research received his Ph.D. degree at Institute of Radio-Electronic Belgorod technical University of the Russian Systems of the Russian Federation in Sep 2010. Federation in Dec 2014. Research today: modeling of random processes, Research today: modeling of random processes, statistical data processing and analysis, decision-making. information and coding theory, voice signal processing The education process: received his Ph.D. degree in and analysis. Engineering Sciences in Academy of Federal Guard Service of the Russian Federation in Dec 2013. Research today: information security, unauthorized access protection, mathematical cryptography, theoretical problems of computer science. No 2.CS (10) 2019 35

File đính kèm:

application_of_parameters_of_voice_singal_autoregressive_mod.pdf

application_of_parameters_of_voice_singal_autoregressive_mod.pdf